Chapter 13

’ Integrating Data Science into Business Operations'

"Building a data-driven culture is about more than just tools and technology; it’s about fostering a mindset where data is at the core of every decision." — Jim Tyo

This chapter examines how organizations can strategically embed data science into their business operations to drive lasting value. It begins by exploring the cultural and procedural shifts needed to become truly data-driven – highlighting the role of leadership in fostering a mindset where decisions are routinely guided by data rather than gut feel. Next, it emphasizes effective communication and collaboration between analytics teams and frontline staff, showing how translators, shared language, and feedback loops bridge the gap between data insights and real-world execution. The chapter then discusses the benefits of flexible internal structures like Analytics Centers of Excellence and hybrid team models that balance centralized expertise with decentralized agility. It also underscores the importance of ongoing training and support, explaining how data literacy programs and in-house academies build a workforce capable of leveraging analytics at all levels. Finally, the chapter outlines best practices for adoption – from aligning projects with business goals and starting with quick-win pilots to scaling successes and instituting governance – all illustrated with case studies in finance, retail, healthcare, FMCG, distribution, and logistics. In sum, the overview provides a high-level roadmap for infusing data-driven thinking into an organization’s DNA, supported by real examples of companies that achieved transformative outcomes through these approaches.

13.1. Cultural and Procedural Shifts

A thriving data science initiative does not start with a fancy machine learning algorithm or a massive analytics software suite. It begins with a firm-wide acknowledgment that decisions will be based on evidence, not gut feel or the phrase “we’ve always done it this way.” Leadership must champion this cultural shift from the top, ensuring that management structures, incentives, and daily practices align with data-driven goals. It is often said that “culture eats strategy for breakfast,” and nowhere is this truer than in data science. Many organizations can procure sophisticated tools but remain stuck using them as superficial add-ons because executives never embed data-driven thinking into their core business processes. When senior leaders model evidence-based decisions, it sends a clear message that rigorous analysis is more than a trendy initiative—facts actually trump hierarchy (Public Sector Network) (How Business Leaders can Promote a Data-Driven Culture) (Nesta).

However, embracing data science means accepting that experimentation—and occasional failure—are inevitable. Executives who only tolerate success stifle innovation and cripple their own data teams. Conversely, leaders who create a safe environment for trial and error without fear of blame encourage creative uses of analytics to solve business challenges (Nesta). This mindset promotes learning from what the data uncovers, whether it confirms the initial hypothesis or smashes it to bits. In the best data-driven organizations, senior management regularly showcases these “lessons learned” and treats them as stepping stones to more refined strategies.

Transforming culture is more than inspirational slogans. It requires overhauling everyday routines so that data checks, analysis reviews, and model-driven insights become standard elements of decision-making. Organizations must integrate data checkpoints into each stage of project development, from initial business case proposals to final sign-off on large-scale investments. If that sounds like a bureaucratic nightmare, imagine the alternative: major strategic moves guided by personal hunches. Some might argue that “intuition” occasionally leads to big wins, but numerous studies show that systematically leveraging analytics is a far safer bet. Indeed, data-driven firms experience significant performance improvements, reporting around 10% cost reductions and 8% profit increases (How Business Leaders can Promote a Data-Driven Culture). They are also 23 times more likely to acquire new customers and dramatically increase retention (How Business Leaders can Promote a Data-Driven Culture).

Such gains do not emerge magically from a new department of data scientists hidden away in a corner. They arise when organizations publicize data-driven victories and celebrate the employees or teams that achieve them. Far too many companies push for analytics adoption in theory, then forget to reward the people who champion these transformations in practice. By instituting formal recognition programs—perhaps awarding an annual “Data-Driven Excellence” prize—management demonstrates that innovative uses of data are not just permissible but are in fact business imperatives. Over time, these behaviors become embedded in corporate DNA. Yet make no mistake: shifting to a data-centered culture typically requires change management strategies, well-orchestrated internal communication, and consistent training to ensure employees at all levels understand why data is a prized strategic asset (173-2010) (173-2010).

To solidify cultural changes, organizations must establish robust governance mechanisms and support structures. This often means creating an analytics center of excellence or a dedicated cross-functional team responsible for guiding data projects to align with broader commercial objectives (173-2010) (Elder Research). These committees or forums offer more than ceremonial oversight. They set standards for data quality and accessibility, prioritize analytics initiatives based on strategic impact, and define clear accountability for project outcomes. When senior executives participate, it reinforces the principle that data science is not a backroom experiment but a key driver of value creation across all business units.

Governance also extends to how data is collected, cleaned, and stored. No amount of modeling wizardry will overcome sloppy, incomplete, or biased datasets. Indeed, the unglamorous tasks of data cleaning and stewardship consume a surprisingly large chunk of a data scientist’s time—sometimes up to 80%. Forward-thinking organizations invest in the infrastructure, tools, and policies needed to ensure data integrity from the outset. They also recognize that great analytics teams include data engineers, domain experts, and business translators—roles essential for bridging gaps between complex algorithms and commercial imperatives.

While building a data-driven culture is crucial, it is equally important for leaders to understand the core steps in the data science lifecycle so they can spot opportunities and challenges. The process begins with data collection, which sounds straightforward but is often a messy affair involving multiple sources—transactional systems, social media platforms, IoT devices, or even “old-fashioned” spreadsheets saved on someone’s laptop. Once relevant data is gathered, the next stage is data cleaning, where duplicates, missing entries, and other anomalies are resolved. Many organizations underestimate how critical this step can be. Half-baked datasets undermine model performance and can result in embarrassingly incorrect insights that tarnish trust in analytics altogether.

After data is prepared, teams conduct exploratory data analysis. This is where a certain degree of healthy suspicion is applied: does the data hold any unexpected patterns, outliers, or contradictions? Can we visualize relationships between variables that might inform hypothesis generation? From there, model building commences, often using statistical techniques or machine learning algorithms ranging from linear regression to neural networks. Choosing the “right” model is a balancing act, requiring domain context (is it a forecasting problem, classification, or recommendation?) and technical skill. Once a viable model is trained, it must be rigorously evaluated using test data or cross-validation to verify its predictive accuracy and generalizability.

Despite what some glossy vendor pitches might suggest, the job does not end at a high accuracy score. Deploying models into production—integrating them with enterprise systems, real-time dashboards, or even customer-facing applications—can be the trickiest step of all. This phase requires robust software engineering practices, ongoing monitoring of model performance, and a plan for continuous retraining if data patterns shift. Neglecting post-deployment maintenance is a surefire way to degrade results over time. Models are like living organisms that can “drift” if market conditions, consumer behavior, or internal business processes evolve.

Data science initiatives in the commercial realm increasingly leverage advanced techniques such as deep learning, reinforcement learning, and real-time analytics to gain a competitive edge. Deep learning models excel at image recognition, natural language processing, and complex pattern discovery. Real-time analytics pipelines can power instantaneous recommendations or fraud detection, enabling companies to respond to changes in microseconds rather than hours or days. These sophisticated systems are no longer reserved for tech giants alone. Even mid-sized firms can harness them through cloud-based platforms and open-source libraries.

However, a rush toward the latest algorithm must be tempered by rigorous ethical and legal considerations. Bias in training data can lead to discriminatory outcomes, while opaque model decisions can undermine stakeholder trust. Executives should demand transparent, explainable approaches where possible and ensure that data governance policies extend to privacy and compliance regulations. In an era of heightened public scrutiny and rapidly evolving laws, failing to address AI ethics is a risky gamble. Indeed, cynics might say that ignoring ethical concerns today is akin to willfully ignoring environmental regulations decades ago—it may work in the short term, but it rarely ends well.

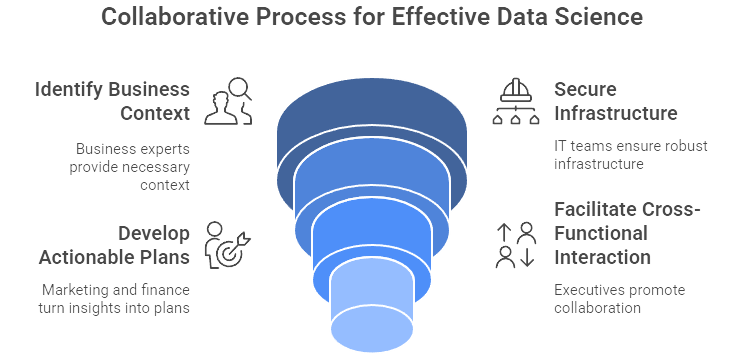

Figure 1: Illustration of the collaborative journey to effective data science, starting with business context and culminating in cross-functional collaboration for actionable plans. (Image by Napkin).

Robust data science programs flourish in organizations that value collaboration across departments. Data scientists rarely succeed in isolation, toiling away on “secret sauce” algorithms that nobody understands. They need business experts to provide context for the data and shape the right questions. They rely on IT teams to secure the necessary infrastructure, from scalable compute resources to data pipelines. They benefit from marketing and finance professionals who can turn analytical insights into actionable business plans. Executives who facilitate this cross-functional interaction help break down silos that often hinder analytics progress. The blunt truth is that no high-level model can fix an entrenched organizational silo or a power struggle. Collaboration remains the bedrock of any meaningful analytics outcome (Davenport, 2018).

One of the most common mistakes in executive circles is viewing data science as a miraculous cure-all. It is not. Even the most cutting-edge model cannot salvage a poorly conceived business strategy or contradictory corporate objectives. Another pitfall is failing to invest in the “last mile” of analytics—the translation of insights into decisions and measurable actions. Plenty of slick presentations end up shelved when leaders do not understand how to operationalize the results.

Executives should also watch out for what might be called “pilot paralysis.” Organizations spin up multiple proofs of concept but never transition them into production or measure ROI. It is far too easy to get stuck in an endless loop of trials without cementing value delivery. The solution lies in disciplined project management, clear success metrics, and a willingness to pivot quickly if early signs show a project is off track. Finally, it pays to remember that data science is a journey, not a short campaign. Continuous learning, model refinement, and iterative improvements distinguish companies that truly embed analytics in their DNA from those that merely dabble and drift away.

Building a data-driven culture is about aligning technology, people, and processes under the unifying principle that better decisions emerge from better analysis. Yes, it involves acquiring advanced tools, but more importantly, it requires fostering an environment where data is trusted, champions are recognized, and failures are seen as learning opportunities rather than career blunders. This transformation demands proactive leadership that relentlessly promotes and enforces data-based thinking. It calls for updated governance structures, a clear roadmap for analytics initiatives, and cross-functional collaboration to ensure the right expertise converges on the right problems (Public Sector Network) (How Business Leaders can Promote a Data-Driven Culture) (Nesta) (173-2010) (Elder Research).

Far from being the exclusive domain of tech-savvy companies, data science offers profound benefits to any commercial organization willing to confront the cultural and procedural shifts needed for success. Decision-makers who grasp the nuances of data collection, cleaning, model building, deployment, and maintenance will be equipped to steer their enterprises beyond superficial analytics showpieces and into a sustainable, insight-driven future. By weaving data literacy into the corporate fabric—and occasionally laughing at the hype along the way—executives can position their firms to leverage the real power of data science in an increasingly competitive market.

13.2. Effective Communication and Collaboration

One of the greatest ironies in modern organizations is that despite spending millions on advanced analytics solutions, many executives still wonder why they see so little impact on the ground. Often, the culprit is a breakdown in communication between data scientists—who live and breathe models, algorithms, and statistics—and frontline staff, who are pressed to deliver results in customer service, operations, or sales. This disconnect can manifest in myriad ways, from dashboards that no one understands to predictive tools that might as well speak another language. To bridge this gap, savvy companies are formalizing a new role often referred to as an “analytics translator” (McKinsey). The translator sits between technical and operational teams, ensuring that data solutions respond to real business problems and that the people implementing those solutions can easily understand the insights being generated.

Translators typically bring deep domain knowledge—whether that domain is retail, manufacturing, public service, or healthcare—and enough analytics fluency to hold their own when chatting with data experts. Their mission is not to overwhelm end users with graphs and equations but to pinpoint precisely where analytics can solve operational pain points, guide the modeling process accordingly, and champion the adoption of data-driven outputs. In early-stage organizations, translators often make the difference between a brilliant model that gathers dust on a server and a well-integrated solution that frontline teams actually use.

Even with translators in place, the broader organization must build a shared language around data. While this might sound like a “nice to have,” in reality it is foundational for any successful analytics endeavor. Without consistent terminology for key metrics, methodologies, or performance indicators, cross-functional collaboration can devolve into confusion. A common vocabulary allows the C-suite, analysts, and frontline employees to interpret insights uniformly. Some companies invest in an internal “analytics academy” that aligns executives, data teams, and business units on how data will be collected, how metrics are defined, and how reports should be read (McKinsey). This type of in-house education is not just for the technicians; it is equally vital for leaders who must make informed decisions based on analytics outputs.

Still, introducing new terms and concepts can create friction among employees who worry that analytics is poised to replace their judgment. One slightly sarcastic but effective strategy is to avoid or downplay the word “data” when addressing certain teams. Instead of saying, “We need to incorporate more data-driven insights,” some organizations prefer phrases like “useful knowledge” or “practical insights” (Nesta). This language shift might sound trivial, but it can significantly reduce staff resistance, since it reframes analytics not as an alien technology but as a helpful extension of existing expertise.

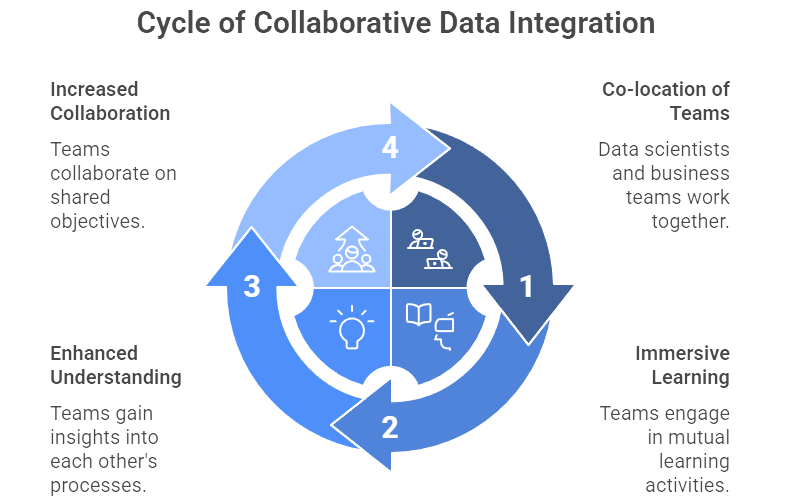

Figure 2: Illustration of how teams evolve from shared learning experiences to achieve increased collaboration through co-location and mutual understanding. (Image by Napkin).

Real cultural change happens at ground level, where data scientists and frontline employees work side by side rather than occupying separate universes. A popular approach involves co-location or secondments, where data analysts temporarily “live” in a business unit such as operations or sales. Far from an academic exercise, this immersive tactic fosters authentic mutual learning. When a data scientist sits beside sales reps and sees how they pitch to customers, the analytics team gains a nuanced understanding of the metrics and insights that actually matter in those conversations. In turn, the business staff becomes more comfortable with the data scientist, seeing them not as a mysterious figure behind a curtain of statistics but as a collaborator committed to shared objectives.

In one real-world case, an analyst was seconded to a social services department for several months (Nesta). By participating in staff meetings and observing daily challenges, the analyst could refine early predictive tools to better account for on-the-ground realities. This direct feedback loop improved the tool’s accuracy and built trust in the data. The social workers involved no longer regarded analytics as an external imposition but as a practical resource that adapted to their needs. This form of role rotation and joint project teams helps organizations avoid the common pitfall of data teams working in isolation, producing outputs that lack actionable relevance for frontline units.

Executives often ask how to guarantee that the brilliant analyses coming out of a data team actually make it into day-to-day decision-making. The short answer is: storytelling. Analytics experts sometimes forget that raw numbers rarely excite or persuade. Frontline teams want to know what the insights mean for their work, whether that is cutting response times, closing more sales, or improving patient outcomes. To drive home the benefits, data must be communicated as relatable narratives, enriched by clear visuals that highlight trends or anomalies in ways that are quick to grasp and act upon.

Organizations making strides in analytics adoption usually have a “single source of truth” in the form of accessible dashboards or real-time digital tools (How to Solve Data Quality Problems at the Source). These interfaces deliver tailored insights directly to the relevant teams, whether it is a store manager reviewing daily product recommendations or a logistics coordinator monitoring inventory predictions. However, the dashboards themselves should not be the only communication channel. A well-defined support network—sometimes a simple helpdesk or a designated “data champion” within each department—ensures that frontline employees know where to turn if they have questions. This two-way dialogue is vital for refining analytical models over time. If a recommended strategy starts producing odd outcomes, the frontline staff should be able to flag it immediately, allowing the data team to investigate and adjust accordingly.

There is a brutal truth that many companies learn the hard way: even the most advanced machine learning models or robust data pipelines will fail to deliver tangible ROI if frontline staff either distrust or do not understand them. Worse yet, trying to mandate compliance from the top without clear explanations often breeds underground resistance—teams simply refuse to use the analytics tools because they see them as irrelevant or bureaucratic. The difference between successful and stalled analytics deployments often comes down to whether leaders invest in the human aspects of collaboration, knowledge-sharing, and communication.

A common misconception among executives is that once the budget is approved for a big-name analytics platform, the rest will “just happen.” This is about as realistic as buying a world-class soccer team’s jersey and expecting to play like a pro. Effective analytics is a team sport. It requires not just the data scientists and the fancy software but also domain experts, operational champions, and a culture that rewards curiosity and continuous learning. Translators bridge the gap, but the entire organization must cultivate the habit of listening to analytics insights, asking tough questions, and providing feedback that sharpens model performance.

Embedding analytics across an organization is a long-term journey that relies heavily on communication and collaboration. Rather than chasing quick wins that look flashy in a quarterly report, executives should prioritize sustainable partnerships between data teams and frontline staff. This means securing leadership buy-in for secondments and cross-functional projects, formalizing training academies so everyone speaks the same language about metrics, and rewarding employees—whether they are data scientists or sales reps—who champion evidence-based decisions.

It may sound obvious, but nothing kills momentum faster than leadership indifference. When frontline staff see that insights are not acted upon or that data initiatives die quietly after an initial pilot, trust erodes and cynicism creeps in. Conversely, when executives highlight successful projects, give credit to cross-functional teams, and provide the tools and resources needed for ongoing collaboration, data science can permeate the very DNA of the organization. That is when analytics stops being a trendy experiment and becomes a true competitive advantage.

As analytics maturity grows, companies often formalize these communication strategies into standard operating procedures. A frequent approach is to maintain open feedback loops, where data scientists review real-world outcomes with frontline staff on a regular basis, fine-tuning models in response to new information. Another tactic is to schedule “analytics showcases” where diverse teams present ongoing projects and lessons learned, fostering internal cross-pollination of ideas. Companies that excel at communication and collaboration in data science typically see a virtuous cycle develop: more employees gain familiarity with analytical outputs, more trust is built, and more business challenges are proactively tackled with data-driven methods.

Ultimately, communication and collaboration are the unsung heroes of any data science initiative. While advanced algorithms and big data platforms command attention, they cannot thrive in an environment that lacks shared language, mutual trust, and clear channels for feedback and support. By actively bridging the gap between analytics teams and frontline staff, organizations set the stage for meaningful, lasting impact—where data science informs strategic decisions at every level and becomes a catalyst for sustained commercial success.

13.3. Centers of Excellence and Beyond

In the quest to establish a data-driven organization, many enterprises begin by creating a centralized Analytics Center of Excellence (CoE). On paper, a CoE sounds simple: a specialized team dedicated to driving analytical innovation across the company. In practice, however, it can be a game-changer—or a bureaucratic maze—depending on how it is structured and supported by leadership. A CoE is often defined as an internal group focused on promoting analytics to achieve business objectives, with responsibilities that include providing a common data platform, setting standards for data quality and governance, and offering expert consulting services to various business units (173-2010). Because it is typically chartered at the executive level, a CoE sends a strong signal of top management’s commitment to data-driven decision-making (173-2010).

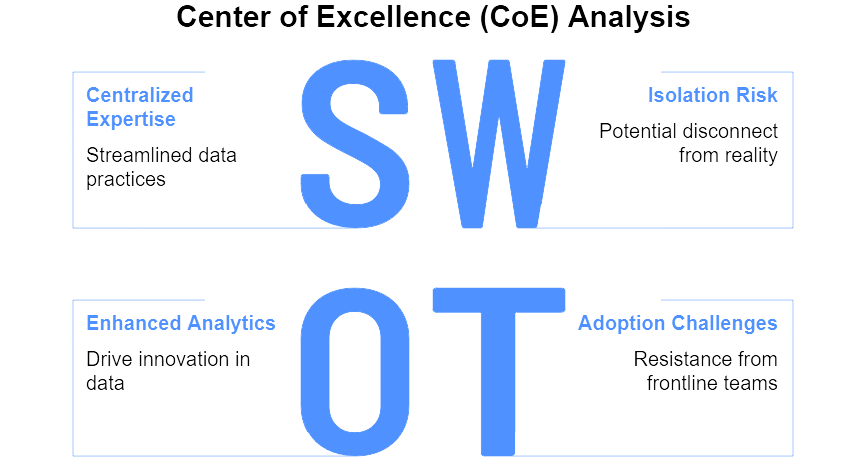

Figure 3: Illustration of the strategic considerations for a CoE, weighing the advantages of streamlined data and enhanced analytics against the challenges of potential disconnect and adoption hurdles. (Image by Napkin).

At its best, a CoE becomes the backbone of an organization’s data strategy. It centralizes critical mass for analytics efforts, ensuring consistent methodology, clear standards, and ready access to expertise (173-2010). Rather than forcing every department to reinvent the wheel on data collection, cleaning, and modeling practices, the CoE can serve as a repository of best practices. It can also become the go-to place for advanced projects, such as deep learning initiatives or the exploration of real-time analytics pipelines. By pooling talent in a single location, companies can tackle ambitious, high-impact challenges—projects individual departments might avoid for lack of skills or budget. However, one must be brutally honest about potential drawbacks: a CoE risks becoming an isolated “ivory tower” that churns out sophisticated models disconnected from frontline realities. If left unchecked, it may create friction between a centralized data team and the rest of the business, turning analytics into a top-down mandate that struggles for on-the-ground adoption.

A Center of Excellence is ideally positioned to assist in every stage of the data science lifecycle: from data collection and cleaning to exploratory analysis, model building, evaluation, deployment, and maintenance. In the data collection phase, the CoE can establish unified protocols that streamline the ingestion of information from multiple sources—transactional databases, web applications, social media channels, and beyond. In cleaning and preparation, the CoE’s specialists set enterprise-wide standards that reduce the risk of inconsistent or incomplete datasets. These efforts minimize errors that could later compromise model accuracy.

When it comes to model building and evaluation, the CoE typically offers a shared environment equipped with advanced tools, scalable computing resources, and specialized expertise. This helps business units avoid duplicating expensive technology investments or learning the same lessons multiple times. After model validation, the CoE can facilitate deployment by providing best practices for integrating predictive engines into existing systems—such as CRM platforms or e-commerce websites. Finally, it can serve as a guardian of ongoing maintenance, keeping an eye on model performance and retraining needs as market conditions evolve or data drifts. In short, a well-functioning CoE drives the adoption of robust end-to-end practices that enhance reliability and confidence in analytics across the organization.

While centralization brings clear benefits—such as consistency in data governance—some organizations discover the pitfalls of concentrating all analytics expertise in a single location. If the CoE’s staff barely interacts with business units, the insights they produce may not reflect real operational challenges. Recognizing this, many companies have adopted a hybrid or “hub-and-spoke” structure (TDWI). The hub, or central analytics team, sets enterprise standards, stewards major strategic projects, and ensures alignment with corporate priorities. Meanwhile, the spokes—smaller analytics or data science squads embedded within individual departments—handle day-to-day tasks that require deep domain knowledge (Elder Research).

Take, for instance, a consumer goods multinational that wants to personalize marketing campaigns globally while also tailoring local promotions to regional cultures. Its central CoE might define the overarching data architecture and maintain a suite of machine learning libraries, while each country’s embedded analytics team fine-tunes models for local preferences and regulatory nuances. This arrangement capitalizes on the synergy between standardization and customization. The central hub ensures that everyone speaks the same “data language,” while the departmental spokes ensure that insights align with market-specific realities. In practice, this structure fosters a culture of continuous collaboration: the spokes receive state-of-the-art technical support from the hub, and the hub, in turn, stays grounded by real feedback from local markets (Elder Research).

Some businesses opt for a fully decentralized model, especially if they already possess strong data maturity in multiple divisions. In this approach, data scientists sit directly within each department—marketing, finance, operations, HR—and report to local managers who understand the immediate commercial or operational pressures. Proponents of this approach argue that it ensures lightning-fast response times and deep contextual awareness. After all, who understands the subtleties of a marketing campaign better than the marketing department itself?

However, decentralization can come with a heavy price tag if not managed carefully. Without a unifying framework for data quality, technology standards, and knowledge-sharing, each unit may develop its own processes and tools. Over time, this can breed inefficiencies, duplicate efforts, and hamper the company’s ability to integrate data across departments. Many organizations that start off decentralized eventually create a “virtual CoE” or community of practice to align coding standards, define key metrics consistently, and encourage regular cross-pollination of insights. Others reverse the order: they centralize first to build strong fundamentals and then loosen the reins, embedding newly minted data experts into each department. The most effective solution typically emerges through experimentation, guided by the organization’s culture, regulatory constraints, and market pressures.

Regardless of the model—centralized CoE, hub-and-spoke, or embedded—one constant remains: organizational structures must stay flexible as analytics needs evolve. Today’s “perfect” solution could become tomorrow’s roadblock if it calcifies into a rigid hierarchy. Rapid changes in technology, competitive landscapes, and even consumer preferences can quickly render a once-ideal setup obsolete (TDWI). Executive leaders should be prepared to revisit how their data teams are organized every few years, if not more frequently. This might mean loosening or tightening central control, shifting certain teams from the hub to the spokes, or even spinning off new CoEs specializing in emerging areas such as AI ethics or real-time streaming analytics.

A prime illustration of how a CoE can accelerate digital transformation comes from banking giant BBVA (BBVA). In 2014, BBVA launched a Data Science Center of Excellence that operated with a degree of autonomy—both physically and structurally—separate from the traditional bank hierarchy. This bold move allowed BBVA to attract specialized data talent and foster a culture of innovation free from legacy processes. The CoE tackled forward-looking initiatives that might have languished under the day-to-day pressures of business-as-usual. Over time, these successes prompted the formation of a formal Data Office reporting directly to the CEO, signaling that data was no longer a side project but a core corporate asset. Such cases demonstrate how a well-supported CoE can evolve from a niche innovation hub into a central pillar of organizational strategy.

Executives often ask how to determine the “best” model for their organization. The honest answer is that there is no one-size-fits-all solution. A smaller firm might find that a simple, centralized team is enough to handle its analytical workload and maintain consistent standards. A sprawling conglomerate, on the other hand, could need a carefully balanced hub-and-spoke model to adapt analytics solutions to radically different divisions. The key is to remain pragmatically flexible, allowing the structure to adapt over time as the company’s analytics maturity grows and as new business challenges emerge.

It is equally crucial to ensure that any organizational structure supports end-to-end data science processes. The leadership team must provide clear mandates and resources so that data collection is standardized across departments, data cleaning protocols are established, and rigorous model validation practices are shared widely. Without coherent leadership oversight, departmental silos can lead to incomplete or contradictory insights. A CoE or similar coordinating mechanism can help prevent duplication of efforts and promote the adoption of advanced techniques—be they deep learning, natural language processing, or real-time analytics—across the enterprise.

Ultimately, structure is only half the battle; culture and leadership are the true catalysts that determine whether data science flourishes or fizzles out. Even a perfectly designed CoE or hub-and-spoke network will flounder if senior executives do not consistently champion data-driven thinking. Leaders must be willing to back analytics teams with the budgets, talents, and tools they need to solve real commercial problems. They must also celebrate data-driven wins and treat failures as learning opportunities rather than reasons to sideline analytics projects.

Moreover, as AI ethics and regulatory scrutiny intensify, organizations may find themselves needing specialized teams devoted to transparency, bias mitigation, and compliance. These evolving demands suggest that the ideal “structure” for data science is one that can shift and grow. In some cases, that means spinning up dedicated CoEs for hot-button topics like responsible AI, while in others, it means embedding ethical oversight into the main data science function. Either way, success depends on building an organizational blueprint that can be reconfigured in response to technological leaps and market changes.

Establishing a Center of Excellence, adopting a hybrid hub-and-spoke model, or embedding data teams directly within each division are all valid pathways to harnessing analytics for commercial gain. There is no universal formula, just as there is no universal definition of “analytics success.” Each organization’s journey will be shaped by its culture, industry, and strategic ambitions. Yet certain guiding principles hold true across contexts: standardize critical processes, enable knowledge-sharing, maintain close ties between data teams and frontline staff, and never let your structure become so rigid that it stifles innovation.

In a world where data competes with intuition and politics for influence, the organizational framework you choose can tip the balance decisively in favor of evidence-based decision-making. By thoughtfully designing (and continually refining) how data science is positioned within the firm, executives can set the stage for transformative change—leading to more accurate forecasts, more personalized customer experiences, and ultimately, a resilient competitive edge in an unpredictable market.

13.4. Ongoing Education & Support

The reality of data science is that it never sleeps. New technologies, modeling techniques, and software platforms emerge almost daily. Meanwhile, the demands on data teams intensify as organizations scale up their analytics capabilities. This constant state of flux means that integrating data science is not a one-time project or a fleeting strategic priority—it is an ongoing journey. The workforce must adapt continuously, acquiring new skills and refining old ones in order to keep pace with evolving business needs. Companies that neglect sustained training eventually face the dreaded “skills gap,” where the workforce simply cannot leverage data insights effectively. Predictably, such organizations end up blaming “lack of ROI” on analytics, when the real culprit is often a lack of investment in people’s skills.

In the early days of an analytics initiative, many executives take the path of least resistance, hiring a handful of experts and hoping their brilliance will “trickle down” to the rest of the company. While hiring top-tier data scientists or sending select leaders to high-priced courses might generate short-lived enthusiasm, it usually fails to transform an entire organization (McKinsey). A more enduring approach is to build broad-based capability. Rather than bringing in data-savvy hires to do all the heavy lifting, forward-looking firms reskill their existing workforce so that employees at all levels can function comfortably with data (McKinsey). This process typically includes training business managers to interpret analytics output with enough savvy to challenge assumptions, teaching analysts new tools for advanced modeling, and training frontline staff to employ data when making everyday decisions.

Extensive research demonstrates that companies with widespread data literacy enjoy tangible commercial benefits (DataCamp). One study found that enterprises with strong data literacy could increase their overall value by up to 5% relative to peers (DataCamp). Another study showed that 74% of data leaders believe teams with robust data skills consistently outperform those without (DataCamp). Skeptics might argue that correlation does not always imply causation, but when data-savvy teams far outperform their competition, at some point it stops being a coincidence.

A best practice that has gained traction among analytics-savvy organizations is to develop in-house training programs or “analytics academies.” While it might be tempting to outsource all training needs to external vendors, these generic courses often fail to address an organization’s specific tools, data infrastructure, and business context. By contrast, an internal academy can customize the curriculum around practical use cases and ensure participants learn within the organization’s own technology ecosystem (McKinsey).

Executives often worry about the cost and effort required to launch an internal academy. Yet the payoff can be substantial. These academies typically offer targeted tracks: one for executives needing to interpret data on strategic dashboards, another for managers seeking to integrate analytics into project planning, and a more advanced track for data professionals honing new modeling techniques. Google and Airbnb are famous for their internal “data universities,” but banks, manufacturing giants, and healthcare systems are increasingly catching on. In truth, building an internal academy is not just about teaching code. It is also a cultural initiative that helps employees shed any lingering fears about data (“I’m not a math person”) and embrace an evidence-based mindset that can spread organically across the company.

Training employees to press the right buttons in a dashboard is only step one. True data literacy goes further, instilling in the workforce a natural inclination to ask, “What do the numbers tell us?” at every turn. Executives who wish to see this culture take hold should push beyond the standard workshops and e-learning modules. The goal is to make data-driven discussion as routine as financial reviews or project updates.

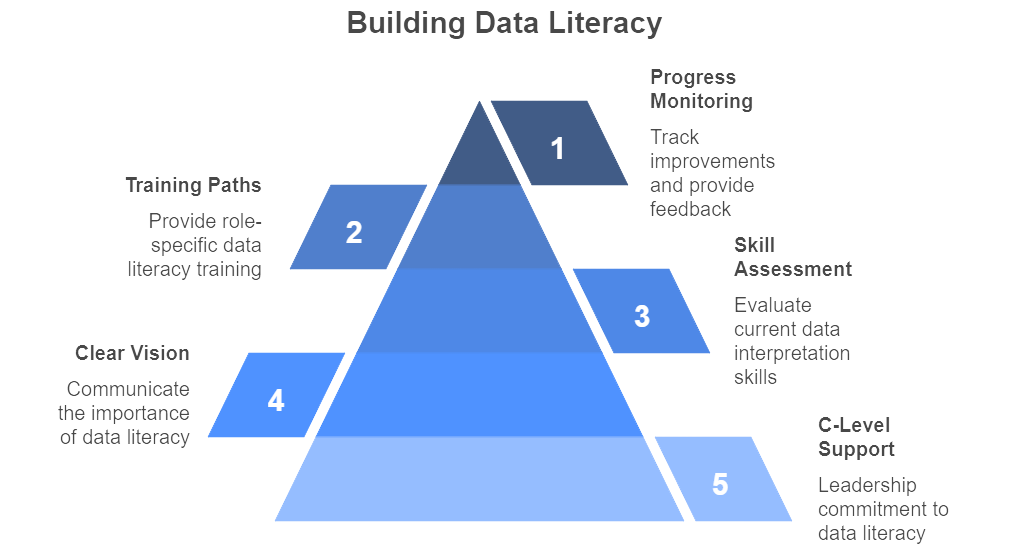

Figure 4: Illustration of the progressive journey of building data literacy, showcasing how each component, from foundational support to ongoing evaluation, contributes to a data-driven culture. (Image by Napkin).

Experts recommend a multi-step approach for instilling data literacy throughout an organization (The Data Literacy Project). First, secure C-level support to ensure the entire effort is taken seriously—nothing undermines a training program faster than leaders who ignore or undermine its lessons. Next, articulate a clear vision of why data literacy matters. If employees sense that it is just another corporate fad, they will tune out. Companies can then assess current skill levels—yes, that might mean asking some pointed questions about who actually knows how to interpret a histogram—and provide targeted training paths for different roles. However, the process does not end there. Monitoring progress, celebrating small wins, and creating feedback channels all contribute to embedding data literacy as a core competence.

An underappreciated aspect of data literacy is teaching the cultural norms that accompany evidence-based work. This includes encouraging employees to question assumptions, share data openly across departments, and accept that data analysis sometimes disproves entrenched beliefs. Admittedly, it can be uncomfortable for managers to see their favorite strategy contradicted by a bar chart. But in a mature, data-literate culture, these moments are no longer seen as threats; they are embraced as opportunities to learn and refine.

Of course, running a few workshops will not solve every data skill gap for the next decade. Once training is complete, employees need ongoing support to maintain and develop their skills. Mentoring programs are particularly effective for bridging the divide between novices and experts. Pairing an experienced data practitioner with an enthusiastic learner can accelerate real-world skill development, especially if the mentor helps apply analytics directly to the learner’s day-to-day tasks. Another strategy is to maintain an internal helpdesk or digital platform where employees can seek assistance on everything from interpreting regression outputs to building complex data visualizations (DataCamp).

Organizations that treat data training as an ongoing affair often see improvements in employee engagement and retention. Staff recognize that the company values their professional growth, and they see a clear path for advancing their capabilities. According to some reports, robust corporate data training programs correlate with higher talent retention (DataCamp). While that might sound a bit too rosy, the logic is straightforward: employees who feel more empowered to do their jobs well—and who are upskilling in high-demand areas like AI and data science—are less likely to jump ship.

A compelling example comes from Mass General Brigham, a major U.S. healthcare provider. After investing heavily in a new data warehouse, leadership discovered that physicians and administrators were barely using it. Instead of simply blaming “user resistance,” they recognized that many staff lacked the analytical background needed to harness the platform’s capabilities (Driving Strategic Advantage Through Widespread Analytics Adoption). The organization launched a multi-pronged program involving formal classroom training on data tools, an internal user community for shared troubleshooting, and designated analytics coaches who made rounds with medical staff to address questions in real time. Over the next year, usage of the data warehouse soared, and clinical teams began to rely on analytics for everything from patient throughput optimization to targeted care interventions. This reinforced the notion that the best technology in the world is worthless if employees do not know how, or why, to use it.

Some executives—perhaps those with a dash of cynicism—may wonder why we keep hammering on culture and mindset. The blunt truth is that an analytics strategy fails the moment the workforce reverts to old habits. If a manager trusts a “gut feel” approach and ignores an advanced forecasting model, the investment in data science is squandered. Continuous training and data literacy initiatives serve as a bulwark against backsliding into the pre-analytics dark ages.

However, it is equally vital that organizations celebrate data-driven decision-making. Company-wide recognition of successful analytics initiatives—say, a manager whose data-backed pilot project reduced operational costs—reinforces the notion that data is not just an afterthought but a central pillar of strategic thinking. These cultural cues, combined with robust training and support, help form an environment in which data-informed decisions become second nature.

Ultimately, continuous training and data literacy programs enable all employees to speak the same language when they encounter metrics, predictive models, or strategic dashboards. When these skills are widespread, data scientists no longer must serve as translators between advanced analytics and an oblivious workforce. Instead, the conversation shifts to, “How do we use these insights most effectively?” That is when data science transcends hype and becomes a genuine competitive advantage.

For leaders, the takeaways are clear: do not rely on quick fixes or glamorous hires alone. Make a sustained commitment to skill development across the entire organization. Formalize that commitment through an in-house academy or targeted training programs tailored to different roles. Reinforce these lessons with accessible support structures, from mentoring to user communities. Maintain an unwavering focus on cultural transformation, ensuring that employees do not merely acquire new tools but learn to trust data as a fundamental component of decision-making. When done right, the benefits extend beyond improved performance metrics. Employees gain new competencies, the organization gains a deeper talent pool, and the resulting data culture ultimately drives innovation and growth.

13.5. Ensuring Long-Term Value Realization

Adopting data science in any organization without a clear strategic vision is like driving a Ferrari with no destination in mind: it might be exhilarating for a while, but eventually someone will ask, “Where are we actually going?” Successful data integration must begin with an unambiguous understanding of how analytics will advance key corporate objectives. This is not just about slapping a few models onto existing processes; it is about ensuring every data initiative has a direct line of sight to business priorities. Early in the planning phase, senior leaders should ask: “Which parts of our operation stand to gain the most from data-driven insights? Is it customer experience, risk mitigation, supply chain optimization, or some other focal point?” By targeting high-impact areas, an organization can concentrate resources where they will yield the greatest return (Gartner).

Once those high-value targets are identified, it becomes crucial to articulate a vision that resonates enterprise-wide. That vision should connect the dots between data science capabilities and tangible outcomes like increased revenue, reduced costs, or improved stakeholder experiences (Gartner). Make no mistake: this is not an exercise in management jargon. If the workforce at large does not understand how analytics initiatives serve the larger mission, enthusiasm quickly wanes. By collaborating with multiple departments—marketing, operations, finance—executives can craft a data strategy that harmonizes with core business strategies. When everyone knows why data science matters, not just that it is “the next big thing,” sustained support and funding become far easier to secure.

A timeless adage in analytics circles is: “Think big, start small, scale fast.” Organizations that attempt a massive, multi-year analytics overhaul right out of the gate often discover that complexity and bureaucracy can derail even the best-laid plans. Instead, savvy leaders identify a handful of discrete, highly visible problems that data can solve in a relatively short timeframe. These pilot projects or proofs-of-concept (POCs) allow the team to build technical expertise, demonstrate impact, and refine processes before rolling out a broader transformation (Nesta).

Imagine a retail chain grappling with markdown optimization. Rather than applying a cutting-edge machine learning algorithm to every store in the country, they might pick just two or three regions as a testing ground. If the pilot shows a five percent uplift in margins, the CFO will be infinitely more receptive to budget requests for scaling the initiative nationwide. Likewise, a financial services firm interested in churn prediction might focus on a specific product line before extending the model across all lines of business. By delivering quick wins, the data science team builds credibility and generates real-world examples that highlight the “why” and “how” of analytics. These early success stories can then be trumpeted internally, fueling momentum for further investment.

Far too many organizations treat data science like a “one-and-done” affair: launch a model, pop the champagne, and assume it will generate returns indefinitely. But the most effective analytics solutions behave more like living organisms than static machines. Over time, the underlying data changes, user behavior evolves, and external market conditions shift. Models that once accurately forecast demand or identified fraud may gradually lose relevance if they are not regularly retrained and refined.

This calls for continuous monitoring and iterative improvement (DigitalDefynd, 2025). A predictive maintenance algorithm used in logistics might require updated sensor data, fresh external variables (such as weather patterns), or a new way of flagging anomalies to keep it razor-sharp. In parallel, frontline staff should have channels for providing feedback—if they find the model’s recommendations unhelpful in real-world scenarios, ignoring them is often easier than pushing for improvements. By creating a feedback loop of “deploy → measure → learn → refine,” organizations can adapt analytics solutions to shifting realities. Moreover, metrics such as user adoption rates, ROI, and overall impact on key performance indicators should be tracked with the same rigor used when justifying the project in the first place. If the model is underperforming or adoption is lagging, that is not a reason to abandon analytics; it is a sign to revisit assumptions, refine inputs, or upskill users.

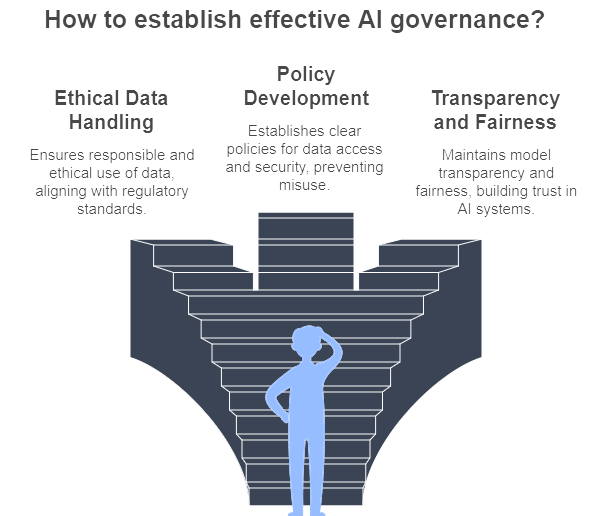

Figure 5: Illustration of the journey towards effective AI governance, where ethical data use and clear policies pave the way for transparent and fair AI applications. (Image by Napkin).

No matter how advanced an AI model is, it can all come crashing down if the enterprise fails to set up proper governance frameworks and change management practices (Elder Research). Governance addresses the pressing questions: Are we handling data responsibly and ethically? Are there policies for data access and security that align with regulations? Is there a process to maintain model transparency and fairness? A governance body—be it a data council or the oft-mentioned Center of Excellence—ensures that analytics projects do not get launched in silos with conflicting standards or practices.

Meanwhile, change management speaks to the human side of the data journey. One of the most common complaints from executives is that staff refuse to adopt new analytics tools. The more brutal truth: many employees see these changes as extra work (or even a threat to their expertise) if they are not properly onboarded. To circumvent this resistance, organizations should communicate candidly about how data science enhances, rather than replaces, human decision-making. They can designate “analytics champions” in each department to serve as evangelists and support peers in understanding the new workflows. High-profile success stories should be showcased to demonstrate that analytics yields tangible benefits—like faster approvals, targeted customer solutions, or cost savings. Over time, as these outcomes accumulate, a cultural shift can occur: analytics goes from being an optional add-on to an integral part of how the company operates.

A perennial challenge in data science adoption is scaling initial successes across different regions, product lines, or departments. Companies that fail to plan for scalability from the outset often find themselves juggling a hodgepodge of bespoke solutions that cannot talk to each other or be replicated cost-effectively. Hence, designing for expansion is non-negotiable if the aim is long-term value realization. This includes deploying robust, cloud-based infrastructures that can accommodate surges in data volume or compute demand. It also involves standardizing processes: if one region has perfected a predictive modeling workflow, that blueprint should be documented in detail so other teams can replicate it with minimal guesswork.

Documentation might not be the most glamorous aspect of data science, but it forms the bedrock of institutional memory. Without it, every new team ends up reinventing the wheel—or, worse, repeating past mistakes. Conducting post-project reviews (or “after-action reviews”) ensures that insights from each initiative are compiled into a central knowledge repository. Over time, these lessons accumulate, creating a living playbook of best practices. It is equally important to remain technologically and strategically agile. Five years ago, many executives had barely heard of deep learning or real-time analytics. Today, these approaches are increasingly ubiquitous. Companies that cling too rigidly to outdated models or platforms risk squandering their competitive edge.

Consider a global logistics company looking to improve on-time delivery rates. After a handful of successful pilots using route optimization algorithms, the company decided to scale the solution worldwide. Yet they quickly discovered that in some regions, infrastructure data was inconsistent, and local staff lacked sufficient analytics training. By addressing these issues up front—investing in data quality, setting up robust data governance, and running targeted training sessions—the firm ultimately created a unified “Delivery Optimization Playbook.” Thanks to this structured approach, the solution is now used by dozens of regional branches, each customizing routes based on local conditions but adhering to the same core methodology. Over several years, incremental improvements to the underlying models have led to measurable gains in efficiency and customer satisfaction. The key? Treating analytics as an evolving product, not a static milestone.

Long-term value realization in data science demands equal parts vision, discipline, and adaptability. It calls for a leadership team that is unequivocally committed to using data as a strategic asset, rather than viewing analytics as a one-off project for next quarter’s earnings call. It also involves empowering all levels of the organization—frontline staff, middle managers, and executives—with the skills and mindsets needed to embrace continuous refinement.

Those who succeed discover that data science can do more than generate quick cost savings or incremental revenue. It can reshape how employees think about problems and opportunities. It can drive innovation and experimentation, spurring the creation of new products, services, or operational strategies. At the same time, it can instill a sense of shared purpose, as each department sees how analytics ties into the company’s larger strategic goals.

Yet none of this endures unless the enterprise systematically embeds analytics into its daily operations and ensures that solutions are scalable, governed by robust policies, and continually improved. In short, real success in data science adoption is not measured by how flashy your first project is, but by whether the entire organization is still deriving value from analytics solutions ten years down the road.

13.6. Real-World Success Stories

The financial sector often stands at the forefront of data science adoption, in part because of the high stakes and heavy regulations that define this industry. Banks that once relied on outdated models for credit decisions or “gut feel” risk assessments have now embraced advanced analytics to detect fraud, optimize marketing campaigns, and refine compliance protocols. One illustrative example is BBVA, a global bank that completely reimagined its operations by launching a dedicated Data & Analytics unit (BBVA). This unit developed cutting-edge solutions—ranging from fraud detection systems to customer retention algorithms—and more importantly, changed how the entire bank valued data. Eventually, data matured into a core executive-level competency, powering everything from strategic decisions to everyday processes.

Capital One offers another famed story: the company built its business around an “Information-Based Strategy,” treating customer data as a goldmine rather than a nice-to-have (MIT). Data analysis drove every significant decision, especially in credit marketing and product design, helping Capital One outpace competitors who still relied on generic mass mailings or simplistic risk models. Unsurprisingly, banks that follow this path of data-centric thinking often report faster decision cycles, improved credit performance, and better compliance outcomes (Harvard Business Review Analytic Services, 2021) (How Business Leaders can Promote a Data-Driven Culture). Finance might be heavily regulated and occasionally risk-averse, but these success stories prove that with a strong top-down vision, even large institutions can pivot and become nimble, data-savvy powerhouses.

The retail sector has experienced massive disruption—and massive opportunities—through the strategic use of data science. Companies like Walmart leverage real-time data from RFID chips, point-of-sale systems, and IoT sensors to precisely forecast demand and optimize inventory (Retail Prowess). The result is fewer empty shelves and less overstock, allowing Walmart to maintain a reputation for reliability while reducing costly excess inventory (Retail Prowess). Crucially, Walmart’s data-oriented approach extends beyond its own stores. By sharing sales data with suppliers through a vendor-managed inventory system, Walmart effectively co-opts partners into a broader data ecosystem, pushing the entire supply chain to operate more efficiently.

On the marketing side, Target famously stirred public debate—and media attention—by predicting major life events like pregnancy using purchase patterns (Davenport, 2013). While some people found the personalization eerily accurate, it demonstrated Target’s ability to harness customer data to refine its product recommendations and coupon strategies. Meanwhile, Amazon’s dynamic pricing and product recommendation engines, fueled by machine learning and real-time analytics, continually adapt to individual shopper behavior. This approach has revolutionized e-commerce, setting consumer expectations for hyper-personalized experiences. Beneath these flashy examples, retail’s real success often hinges on data literacy at the store and warehouse levels. A store manager who can interpret real-time dashboards about stock levels or local buying trends becomes a potent force for on-the-ground optimization.

Healthcare provides some of the most compelling stories of data science in action, as improvements directly affect patient well-being. Mass General Brigham (formerly Partners HealthCare) offers a telling example (Driving Strategic Advantage Through Widespread Analytics Adoption). The organization rolled out an enterprise data warehouse and analytics platform that clinicians and administrators could easily access. Rather than waiting weeks for ad hoc reports, doctors could pull up relevant data with a few clicks, accelerating diagnoses and treatment decisions. Over a two-year period, usage of this analytics platform shot up by 243%, a testament to how quickly medical staff embraced the new technology once they saw the impact on patient care.

Elsewhere, UCSF Health implemented real-time predictive analytics in its ICUs, aiming to identify early signs of sepsis or other complications (DigitalDefynd, 2025). By continuously scanning patient vitals, lab results, and electronic health records, the system raised alerts when it detected patterns indicative of rapid patient deterioration. Early intervention lowered ICU mortality rates and shortened average patient stays—life-saving proof that data can be more than a cost-cutting tool. Meanwhile, Massachusetts General Hospital used predictive models to optimize patient flow, staffing, and resource allocation, reducing unnecessary wait times and alleviating overburdened departments (DigitalDefynd, 2025). These examples underscore a recurring theme in healthcare: when organizations invest in data literacy for clinicians and operational staff, analytics quickly evolves into an indispensable asset that not only cuts costs but can literally save lives.

In the world of fast-moving consumer goods, high volume meets razor-thin margins, making efficiency paramount. Many FMCG players are discovering that data science can drive breakthroughs in demand forecasting, marketing optimization, and even new product development. One anonymized case from Elder Research details how a leading consumer goods firm started with disjointed analytics projects and no coherent strategy (Elder Research). Over time, the company formed an executive steering committee and a hybrid Analytics Center of Excellence, aligning marketing, sales, R&D, and operations on common data protocols.

In its first year, the firm piloted over 30 analytics proofs-of-concept. Within three years, 23 of those had become full-scale implementations, generating a multi-million-dollar net present value (Elder Research). Some initiatives tackled pricing optimization by analyzing real-time sales and competitor data. Others dove into consumer engagement analytics to refine marketing campaigns. The secret sauce, according to executives, was a combination of strong leadership sponsorship and quickly publicized wins—there is nothing like a big headline ROI figure to galvanize other departments to jump on board. Unilever took a similar approach, creating a “People Data Centre” to unify social media analytics, sales figures, and market research. By feeding these insights directly into product teams, Unilever accelerated feedback loops, avoiding costly missteps on new launches and amplifying successful marketing campaigns.

In distribution and logistics, data science often manifests as advanced optimization algorithms, real-time routing systems, and predictive maintenance. One flagship example is UPS and its ORION platform, which recalculates delivery routes on the fly based on traffic conditions, parcel volumes, and driver locations (UPS). By systematically adjusting each driver’s path among tens of thousands of possibilities, UPS saves around 100 million miles and 10 million gallons of fuel annually. This is not a back-office experiment: drivers see optimized routes on their handheld devices, and managers monitor progress in near real-time. It is a textbook illustration of how data science can weave into daily operations to produce significant financial and environmental gains.

DHL Supply Chain, meanwhile, introduced machine learning to predict warehouse workload fluctuations. These forecasts inform the scheduling of staff and the allocation of automated picking robots, minimizing idle time and bottlenecks (DHL, 2018). In some warehouses, augmented reality (AR) tools guide workers to the correct items, raising picking accuracy and speed by up to 25%. On the high seas, Maersk streams real-time data from cargo ship engines into predictive models. By anticipating maintenance needs and pinpointing potential failures, Maersk avoids expensive vessel downtime that could disrupt global supply chains. The recurring motif in these success stories is straightforward: data science solutions thrive when they align tightly with operational goals and when frontline staff are fully trained to trust—and act on—algorithmic recommendations.

Although these cases span very different sectors, they share certain principles. First, each company that succeeded with data science had buy-in from top executives who championed data-driven thinking, sometimes even rewriting job descriptions or departmental structures to accommodate analytics teams. Second, they aligned analytics projects with strategic or operational pain points—whether it was credit risk, stock outages, patient safety, or route optimization. Third, they invested heavily in frontline adoption and data literacy, ensuring that staff beyond the analytics department understood and trusted the solutions. Finally, most of these organizations adopted a continuous improvement mindset, regularly refining models, updating data pipelines, and addressing changing market or clinical conditions.

It can be tempting to read these stories and assume that success requires either near-infinite budgets or a staff of PhD data scientists. The truth is more nuanced. While having robust resources helps, many of these organizations started with modest pilot projects that proved the concept before expanding. The key is to integrate analytics into the core of the business—whether that business is diagnosing patients, shipping goods, or selling shampoo—so that data science becomes not a novelty but a routine tool that employees rely on every day.

13.7. Conclusion and Further Learning

Integrating data science into business operations is more than a technical endeavor – it is a transformational journey for the entire organization. The conclusions drawn in this chapter reinforce that achieving a data-driven enterprise requires simultaneous attention to culture, structure, skills, and strategy. Culturally, companies must cultivate an environment where data is trusted and routinely used, which involves leaders setting the tone and employees at all levels embracing a mindset shift. Structurally, organizations benefit from flexible models (such as Centers of Excellence, federated teams, or hybrid approaches) that institutionalize analytics capabilities while remaining responsive to frontline needs. Equally important is investing in people: continuous training, upskilling, and data literacy efforts empower staff to utilize insights effectively and confidently. Through effective communication channels and roles like analytics translators, the notorious gap between data teams and business units can be bridged, ensuring that analytical findings translate into action on the ground.

The long-term adoption of data science is sustained by strategic alignment and iterative improvement. Companies should anchor their analytics initiatives to clear business objectives and demonstrate early wins to build momentum. Governance mechanisms and change management practices then help embed these new data-driven processes into the fabric of daily operations. The case studies across industries show that when these elements come together – a supportive culture, cross-functional collaboration, adaptable structures, skilled people, and focused strategy – organizations unlock remarkable improvements in efficiency, decision quality, and innovation. In conclusion, integrating data science is an ongoing commitment, but one that establishes a virtuous cycle: data-driven insights lead to better decisions and outcomes, which in turn reinforce the organization’s conviction and capability to further leverage data. By internalizing the lessons and best practices outlined in this chapter, business leaders can steer their companies toward not just implementing analytics, but truly becoming data-driven enterprises that thrive on continuous learning and evidence-based action.

As the field of data science in business is vast and ever-evolving, further exploration is key to mastering how these concepts apply in real-world scenarios. The following prompts are designed to provoke deeper thinking and research, helping readers reinforce their understanding and discover new dimensions of integrating data science into business operations. Engaging with these questions will enable readers to adapt the chapter’s lessons to diverse contexts, critically analyze success factors, and anticipate challenges. Use these prompts to delve into case studies, reflect on your organization’s situation, or spark discussions with peers – each prompt is an opportunity to translate theory into practical insight.

Assessing Data Culture: How would you evaluate an organization’s current data culture and readiness for data science integration? Consider what signs indicate a truly data-driven mindset versus mere lip service, and propose methods (surveys, interviews, metrics) to gauge cultural maturity.

Leadership’s Role: In what specific ways can top executives demonstrate their commitment to a data-driven approach? Outline at least three actions (e.g. in communication, policy, resource allocation) a CEO or senior leader should take to champion analytics and influence organizational culture.

Overcoming Resistance: Identify common reasons why frontline employees might resist using data or analytics tools. How would you address each of these concerns? Formulate a change management plan that transforms skepticism into advocacy among staff (hint: think about trust, simplicity of tools, and WIIFM – “What’s in it for me”).

Data Translator Skills: What competencies make an effective “analytics translator” and why are they critical in bridging technical teams and business units? Devise a development program to cultivate these skills in either existing staff or new hires, and discuss how their impact could be measured.

Improving Communication: Examine a scenario where a data science team’s analysis was not acted upon by the business. What communication gaps might have caused this? Propose strategies to improve data storytelling and dialogue so that insights lead to decisions (consider visualization, language choice, frequency of interaction).

Designing a CoE: If you were to establish an Analytics Center of Excellence in a mid-sized company, what would its charter, structure, and key responsibilities be? Outline a charter document, including how the CoE will interface with other departments and how success will be evaluated in its first year.

Centralized vs Decentralized: Debate the pros and cons of centralized analytics teams versus decentralized (embedded) analytics professionals in business units. In what situations might a hybrid model be preferable? Support your argument with examples of organizational context (such as company size, industry, or analytics maturity).

Case Study Analysis: Choose one of the industry case studies mentioned (e.g. Walmart’s inventory analytics or Mass General’s data adoption). Investigate further and identify the critical success factors in that case. What challenges did they face, and how were those overcome? Present your findings as a brief case analysis, noting lessons that could apply to other sectors.

Data Literacy Programs: Imagine you are tasked with improving data literacy in a global organization. What key components would you include in a data literacy program? Describe steps like assessing baseline skills, tailoring curriculum for different roles, and incentivizing participation. How would you measure the program’s impact over time?

Continuous Learning Culture: Why is fostering a culture of continuous learning important for analytics integration? Provide examples of practices that encourage ongoing skill development (e.g. hackathons, certifications, mentorship). How can an organization balance employees’ learning time with their regular duties effectively?

Technology vs People: Many companies invest heavily in analytics technology. Discuss the statement: “Technology implementation is futile without the right people and processes.” Use evidence or readings to support how much outcomes depend on cultural adoption versus the software itself. Can you find an example of a failed analytics initiative due to non-technical issues?

Ethical Considerations: As data science is embedded into operations, ethical considerations (like data privacy, algorithmic bias) become crucial. How should an organization integrate ethical checkpoints into its data-driven processes? Describe a framework for ethical governance that involves both data scientists and business leaders in oversight.

Scaling Analytics Projects: What are the biggest challenges when scaling a successful pilot analytics project to an enterprise-wide solution? Consider technical challenges (data integration, performance at scale) as well as human factors (change management across units). How would you mitigate these to ensure the scaled solution delivers the anticipated value?

Measuring ROI of Data Science: Develop a set of metrics or KPIs to assess the return on investment from data science initiatives. How would you attribute business outcomes (like revenue uplift, cost savings, customer satisfaction) directly or indirectly to analytics efforts? Discuss the time horizon for these returns and the importance of quick wins vs long-term gains.

Role of Middle Management: Middle managers can either be champions or bottlenecks in analytics adoption. What specific role should they play in integrating data science into daily operations? Propose ways to engage and educate middle management so they become facilitators (for example, by using data in their own KPIs or team meetings).

Cross-Functional Teams: Design a cross-functional project team for an analytics initiative (for instance, deploying predictive maintenance in a manufacturing plant). Who should be on this team (data scientists, engineers, IT, operators, etc.), and what would each role contribute? How do you ensure effective collaboration and knowledge sharing within such a diverse team?

Sustaining Executive Support: Initial executive buy-in can wane if results are slow. How would you keep executives engaged in and supportive of data science programs over the long run? Outline a communication and governance cadence – e.g. quarterly executive dashboards of analytics impact, leadership participation in data demos – that maintains visibility of the analytics program’s value.

Adapting to Industry Differences: Compare how data science integration might differ between two industries (say, healthcare vs retail). What unique challenges or opportunities does each sector present (consider regulations, talent availability, type of data, culture)? How should strategies adapt to these differences? For example, discuss the extra importance of data governance in healthcare, or the fast iteration cycles in retail.

Future Trends: Explore an emerging trend (like AutoML, real-time analytics, or data mesh architecture) and discuss how it might further influence the integration of data science into business operations. For instance, how could democratizing data through a data mesh affect the role of a central CoE, or how might real-time streaming data analytics open new use cases on the frontline? Encourage thinking about how organizations can stay ahead by integrating such trends.

Self-Reflection – Your Organization: If you apply the concepts from this chapter to your own organization (or one you are familiar with), what would be the first three steps you’d take to embed data-driven thinking more deeply? Identify current gaps (cultural, skills, process) and draft an action plan to address them. What outcome do you envision in one to two years if these steps are successful?

By grappling with these prompts, you will deepen your comprehension and uncover nuanced insights beyond the chapter’s scope. Each question is an invitation to apply critical thinking, seek out additional resources, and connect theory with practice. Embrace the opportunity to explore – the more you investigate and reflect, the more adept you’ll become at leading and supporting data science integration in any business context. Remember that becoming truly data-driven is a journey of continuous learning, and your curiosity is one of the best tools to propel you forward.

The following practical assignments are designed to reinforce the concepts from this chapter through hands-on application. These strategy-oriented exercises encourage you to put ideas into action in a simulated or real organizational setting. Each assignment includes a clear objective and set of tasks, guiding you to develop tangible outputs – from cultural assessments to implementation roadmaps. By completing these assignments, readers can translate knowledge into skills, honing their ability to drive data science initiatives and overcome real-world challenges. These exercises will not only solidify your understanding but also help you build a toolkit of techniques to integrate data science into business operations effectively.

🛠️ Assignments

📝 Assignment 1: Data Culture Assessment and Plan

🎯 Objective:

Evaluate the current state of data-driven culture in an organization (real or hypothetical) and design a plan to strengthen it.

📋 Tasks:

Conduct a structured assessment by gathering information on decision-making processes, employee attitudes toward data, leadership support, and existing analytics usage. This could involve interviewing 5–10 stakeholders across levels or using a survey with questions targeting cultural indicators (e.g. “How often do you use data to support your decisions?”). Compare findings against best practices discussed in the chapter (such as leadership signaling, experimentation tolerance, etc.). Identify key cultural gaps – for example, maybe frontline employees rely on gut instinct or there’s fear of punishment for mistakes. Document 3–5 major findings. Then, for each finding, propose specific interventions. For instance, if you discover low trust in data, an intervention might be to run data demo sessions to show accuracy, or implement a no-blame pilot period to encourage experimentation. Develop a brief action plan (timeline of 6–12 months) outlining how to implement these interventions, who will lead them, and how to measure progress (such as improved survey scores or increased analytics usage in decisions).

💡 Guidance:

Leverage frameworks from the chapter (like leadership communication strategies and incentive tweaks) in your plan. This assignment builds skill in diagnosing cultural readiness and prescribing changes – a critical first step in any data science integration.

📝 Assignment 2: Communication Strategy between Data Teams and Frontline

🎯 Objective:

Create a communication and collaboration strategy to enhance the partnership between an analytics team and a specific business unit’s frontline staff.

📋 Tasks: