Chapter 14

Managing Risk and Ethical Considerations in Data Science

"I think with not just ChatGPT, AI, you have to think about the up and the downside. Trust is built by the drop, withdrawn in buckets." — Ginni Rometty

In today’s data-driven business environment, executives must balance innovation with responsibility. This chapter explores how unchecked data science initiatives can expose organizations to significant risks – from privacy breaches to biased AI decisions – and erode stakeholder trust. It provides a strategic overview of the risk landscape in data science, outlines core ethical principles (such as fairness, accountability, and transparency), and presents frameworks for risk management and governance. With real-world case insights and best practices, business leaders will learn to embed ethics into data projects, ensure compliance with evolving regulations, and ultimately align AI and analytics with corporate values and societal expectations for sustainable success.

14.1. The Strategic Importance of Risk & Ethics in Data Science

Data science has undoubtedly unlocked new frontiers of innovation, efficiency, and competitive advantage for businesses worldwide. Yet this newfound power comes with a warning label: it can backfire spectacularly if misused or mismanaged (McKinsey, Year). From inadvertent privacy breaches to algorithmic biases that trigger public outrage, data-related missteps can spiral into financial penalties, lawsuits, and irreparable brand damage. Some executives still cling to the fantasy that these are purely technical issues that magically resolve themselves if you hire enough data engineers. In reality, failing to address the ethical and reputational dimensions of data-driven initiatives is akin to letting people handle nitroglycerin without safety gear.

In the era of hyper-transparency, even minor ethical lapses can morph into massive reputational crises overnight. A single scandal involving discriminatory AI or misuse of personal data can nullify years of carefully curated brand equity (McKinsey, Year). That is the double-edged sword of data science: done right, it offers unparalleled insights and strategic gains; done poorly, it invites a world of legal troubles and public distrust.

No longer can executives delegate data ethics to the IT department and hope for the best. As consumer data streams expand and algorithms become more complex, board members and C-suite leaders face growing personal and corporate liability for lapses. It is not hyperbole to say that regulatory agencies and courts are beginning to hold top decision-makers responsible when data misuse occurs under their watch (McKinsey, Year). After all, if the CEO brags about a new AI-driven initiative on quarterly earnings calls, it becomes difficult to argue later that he or she had “no idea” how personal data was being collected or analyzed.

The message is clear: if you are in senior leadership, you are on the hook for both the successes and the failures of your organization’s data-driven efforts. Whether it is a breach of customer privacy, a machine learning model that inadvertently discriminates based on race or gender, or a compliance meltdown under regulations like GDPR, the blame can—and likely will—land on the C-suite. This shift in accountability may sound intimidating, but it also highlights an emerging source of strategic advantage: executives who proactively champion ethics and responsible data use can position their organizations ahead of the regulatory and reputational curves.

In the digital era, corporate trust has become as valuable as any tangible asset on your balance sheet. Customer goodwill, built up over years of consistent service, can disintegrate in a flash if the public believes you are playing fast and loose with their personal data. Conversely, companies known for stringent ethical standards and transparent data practices can command loyalty and even justify premium pricing (Deloitte, Year). Many consumers are growing more conscious of how businesses handle their data, and regulatory bodies are catching up too.

One might argue that “trust is built by the drop and lost by the bucket.” This rings painfully true for organizations that fail to address AI fairness or data privacy. Once the public perceives a breach of integrity, regaining lost trust can be an uphill battle. This is why leading companies increasingly treat data ethics not as a moral bonus but as a strategic pillar that underpins brand differentiation, customer retention, and shareholder confidence.

Skeptics might scoff at the notion that ethical considerations do anything but slow down the pursuit of revenue. Yet real-world evidence suggests the opposite (Deloitte, Year). Businesses that incorporate ethical safeguards from the start stand a much better chance of avoiding costly fallout—regulatory fines, class-action lawsuits, and PR nightmares. Moreover, when an organization is seen as a responsible steward of data, it tends to enjoy smoother adoption of new technologies by consumers, partners, and regulators alike.

Consider the growing pressure around generative AI tools that can produce everything from synthetic images to customer service chat responses. While the hype is enormous, so are potential risks: intellectual property infringement, disinformation, and algorithmic biases, to name a few. Executives who integrate robust ethical frameworks early can seize the upside—rapid product innovation, streamlined customer interactions—while minimizing liabilities. It is not about inhibiting innovation but channeling it responsibly to maximize returns over the long run.

Despite the compelling evidence that ethics and governance are indispensable, many organizations remain dangerously behind the curve in establishing formal standards (Deloitte, Year). Companies race to deploy machine learning models and AI-driven services, only to discover too late that they have no policies in place to handle unintended biases or data privacy breaches. A recent survey found that over half of participating executives admitted they were uncertain whether their organizations even had AI ethics standards—an admission that should ring alarm bells in every boardroom.

The lightning-fast advancement of analytics and AI capabilities is not going to slow down for organizations to catch up. Firms that fail to implement clear guidelines, oversight committees, and education programs for responsible data use are rolling the dice with their brand reputation. Regulatory crackdowns and public blowback are only a matter of time for those that blindly chase innovation without planning for its ethical and legal ramifications.

A central theme of this book has been that data science initiatives cannot thrive in isolation. The same holds true for risk management and ethics. Leaders must design a governance structure—often involving a data or AI ethics committee—that sets clear parameters around data collection, usage rights, algorithmic transparency, and accountability. This committee works best when it includes stakeholders from legal, IT, data science, operations, and perhaps most importantly, the executive suite. A siloed approach to ethics—like delegating it all to the Chief Risk Officer—practically guarantees blind spots.

Beyond governance, continuous training in ethical and regulatory considerations is crucial for data scientists, analysts, and decision-makers. As an analogy, you would never let a pilot take off without extensive training on safety protocols. Why, then, are some companies content to let newly minted “citizen data scientists” operate with zero awareness of how quickly a well-intentioned model can become discriminatory or intrusive?

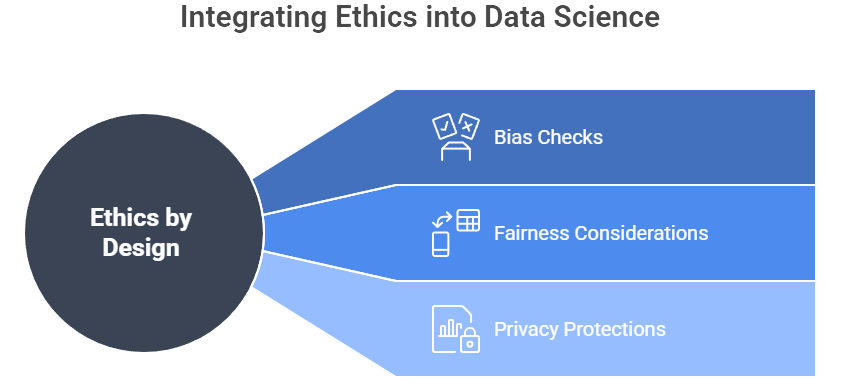

Figure 1: Illustration of the foundational principles for ethical data science, highlighting how bias checks, fairness, and privacy are integral to responsible AI development. (Image by Napkin).

Finally, organizations should integrate “ethics by design” into their data science development cycles. This means building in checks for bias, fairness, and privacy from the outset, rather than slapping on disclaimers after deployment. Whether one is developing a recommendation algorithm, an underwriting model for insurance, or a chatbot for customer service, early ethical considerations can avoid much bigger headaches down the line.

Ethics and risk management in data science are not about adding bureaucratic hoops or stifling creativity. On the contrary, they enable sustainable innovation by helping companies avoid catastrophic missteps that can derail progress. Executives who fail to see data ethics as a board-level priority risk learning the hard way when regulators or the court of public opinion come knocking. Those who proactively implement robust ethical frameworks, however, build the kind of institutional trust that can amplify every other data science investment.

Skeptical executives might wonder if all this talk of ethical AI and responsible data stewardship is just corporate window dressing. The simplest answer: wait and see what happens to the next company caught in a data scandal. The costs—legal, financial, and reputational—are measured in the billions, and recovery is never guaranteed. Meanwhile, data-forward organizations that conduct themselves responsibly reap tangible rewards such as better customer loyalty, regulatory goodwill, and a deeper reservoir of trust to tap into when launching new data-driven products or services.

14.2. Understanding the Data Science Risk Landscape

One of the most glaring risks in the modern data-driven era is the mishandling—or outright exploitation—of personal information. For all the business value that aggregated user data can provide, it only takes a single high-profile breach to bring an organization to its knees. When the Facebook–Cambridge Analytica scandal erupted, it was not a simple case of technical hacking: data from millions of users had been “legally” collected through an app but then repurposed in ways that violated user expectations and arguably their consent (Allstate Identity Protection, Year). The public furor over that misuse led to multibillion-dollar fines, damaged user trust, and gave regulators around the world a rallying cry to tighten data privacy laws (IAPP, Year).

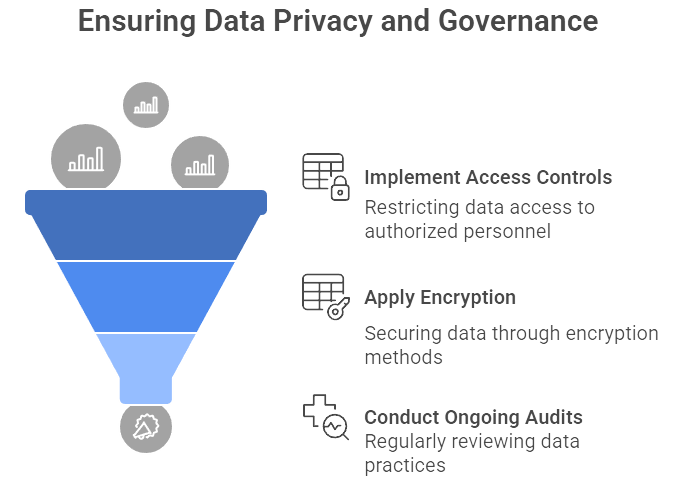

Figure 2: Illustration of the progressive layers of data protection, showcasing how access controls, encryption, and audits work together to ensure privacy and governance. (Image by Napkin).

Organizations hoping to avoid such catastrophes must implement robust data governance policies. This /includes adopting the principle of data minimization—collecting only what is strictly needed—along with stringent access controls, encryption, and ongoing audits. If the marketing team complains that fewer data points limit their ability to profile customers, remind them that a short-lived advantage can quickly become a reputational nightmare if private information lands in the wrong hands. In short, forging a corporate culture that respects user privacy is not just about tiptoeing around legislation like GDPR or CCPA. It is about preserving brand equity, customer loyalty, and executive careers.

Building an AI model is not an exercise in generating abstract math equations. Every dataset and algorithmic rule reflect certain assumptions, often unintentionally. When those assumptions are skewed—due to incomplete data, historical prejudices, or oversimplified features—bias is baked into the system. The now-infamous Amazon recruiting tool provides a cautionary tale: its AI learned from past hiring data that overwhelmingly favored male applicants, so it effectively downgraded female candidates (Reuters, Year). The result? A hasty project shutdown, negative headlines, and internal soul-searching about just how “smart” these models really were.

Addressing bias is not a feel-good side project. It is a moral imperative and a bottom-line necessity. Leaders who fail to invest in bias detection and mitigation risk reputational damage, legal challenges, and the potential unraveling of expensive AI initiatives. The solutions range from thorough data audits and more inclusive training sets to algorithmic fairness techniques designed to spot and correct skewed outcomes (5 Ethical Considerations of AI in Business, Year). Whichever method you choose, the key is a proactive stance: waiting for the media or regulators to expose your biased AI system is a recipe for PR disaster.

Advanced machine learning tools—particularly deep neural networks—can be notoriously opaque. This so-called “black box” phenomenon poses a challenge when users, regulators, or executives demand to know why an AI reached a certain conclusion. Imagine refusing a mortgage application without being able to articulate the decision criteria beyond “the algorithm says so.” Stakeholders and regulators are increasingly insisting on explainability in high-stakes applications, from insurance underwriting to criminal justice decisions (World Economic Forum, Year).

Failing to provide transparency can cripple trust at every level. Customers lose confidence in decisions they cannot understand, employees become suspicious of the tools they are told to use, and regulators smell blood in the water. Add to that the possibility of legal repercussions if you cannot prove your model complies with anti-discrimination laws. Some organizations now incorporate “explainable AI” frameworks or simpler, more interpretable models, even if that means sacrificing a fraction of predictive accuracy. For business leaders, the question is not whether to pursue transparency, but how to balance it with performance.

Data science platforms and machine learning models are enticing targets for cybercriminals and malicious insiders. After all, if you can tamper with the training data, you can manipulate the outcome—rendering analytics or AI-driven decisions useless or even dangerous. This threat extends beyond mere data theft to “data poisoning,” where attackers deliberately introduce corrupt inputs to skew model predictions (5 Ethical Considerations of AI in Business, Year).

Executives must treat these AI systems as part of the organization’s core security perimeter. It is not enough to secure the data warehouse while leaving the AI deployment pipeline open to rogue modifications. A sophisticated adversary might inject misleading data that prompts your automated trading system to make disastrous trades or cause your logistics network to misallocate shipments. Even a small compromise can result in large-scale confusion, financial losses, or liability issues if harm is done to customers or partners.

Global regulations around data and AI are evolving rapidly, leaving many companies scrambling to keep up. Privacy laws like GDPR in Europe have set stringent rules on consent, purpose limitation, and data subject rights, with fines that can reach into the billions. In some jurisdictions, AI-specific regulations are beginning to emerge, aimed at ensuring fairness, transparency, and accountability in algorithmic decision-making (IAPP, Year). A breach of these rules does not only result in financial penalties; it can halt critical projects and force the company into protracted public negotiations with regulators.

The compliance challenge grows more complex for multinational organizations operating under multiple legal frameworks. A model permissible in one region might be illegal in another. Board members and C-suite leaders who underestimate this patchwork of regulations risk signing off on AI initiatives that later must be scrapped—or heavily redesigned at great expense. Proper oversight and continuous monitoring can mitigate these pitfalls.

Even when no formal law is violated, data science can quickly generate ethical controversies that escalate into public crises. It only takes one sensational headline—perhaps an AI that allegedly “promotes hateful content” or “discriminates in loan approvals”—to trigger consumer backlash and lose the trust of partners and employees. In some cases, internal teams themselves may raise objections, refusing to work on controversial projects for fear of harming communities. Recent polls show that a significant majority of business leaders view irresponsible AI as a potential risk to society (Deloitte, Year).

The societal ramifications go beyond negative press. A scandal can erode your stock price, spur lawsuits, and embolden regulators to clamp down on your entire sector. Employees might exit en masse if they perceive the company’s leadership as ethically tone-deaf. On the flip side, companies that build a reputation for responsible data use often find themselves better positioned in an era where consumers, investors, and policymakers increasingly value corporate accountability.

Effective data science strategies cannot flourish in a vacuum of unaddressed risks. Privacy breaches, algorithmic bias, opaque decision processes, cybersecurity vulnerabilities, and compliance landmines all converge into a landscape that demands proactive navigation. Leaders who treat these hazards as afterthoughts set themselves up for expensive, high-profile failures that could have been avoided with a fraction of the investment. Conversely, executives who build robust risk mitigation frameworks—complete with ethical audits, transparent AI pipelines, and hardened cybersecurity—gain more than peace of mind. They gain a reputational edge, regulatory goodwill, and the moral high ground that fosters lasting trust with customers and stakeholders.

14.3. Ethical Principles and Frameworks for Responsible Data Science

Effective data science initiatives cannot merely focus on technology and profitability. They must also uphold core ethical values that have been distilled through industry experience and regulatory debate (AI Ethics 101: Comparing IEEE, EU and OECD Guidelines) (McKinsey, Year). These principles include fairness, transparency, privacy, accountability, and beneficence—or as some executives phrase it, “ensuring data is used for net positive outcomes, not just hitting a revenue target.” By embedding these foundational values into every analytics project, organizations enhance the trust of customers, employees, and broader stakeholders (McKinsey, Year).

Despite the hype around advanced algorithms, models often mirror the biases—racial, gender, socioeconomic—that linger in historical data. Organizations that care about long-term success must not dismiss this as a mere “technical glitch.” Biased outputs can trigger lawsuits, tarnish brand reputations, and alienate key customer segments (5 Ethical Considerations of AI in Business, Year). Imagine a loan approval model that perpetually discriminates against certain neighborhoods or a recruitment algorithm penalizing female applicants. These outcomes are not just moral missteps; they are business liabilities.

To head off these problems, data teams and executives should embed fairness checks early in the model development process. This may include running bias audits, conducting scenario analyses with diverse test data, or adjusting algorithms to correct for known imbalances. A growing ecosystem of “fairness toolkits” can help detect skewed outcomes. Ultimately, the goal is simple: do not wait for The New York Times to uncover a discriminatory pattern in your AI before you start caring about ethical design.

In the race to deploy more complex machine learning models, many companies find themselves saddled with “black box” systems that cannot easily be explained to customers, regulators, or even their own management (World Economic Forum, Year). This lack of transparency can be fatal in high-stakes domains such as healthcare, finance, or human resources. Not only do opaque algorithms erode trust, they also risk violating emerging legal standards that demand explainability.

Forward-thinking organizations are adopting explainable AI approaches, which might involve using interpretable model architectures or generating user-friendly rationales for decisions. Imagine a mortgage applicant who is turned down: a transparent system can clarify, “Your application was declined primarily due to insufficient credit history and a high debt-to-income ratio,” rather than offering a cryptic, “The algorithm says no.” This kind of clarity fosters trust and reduces anxiety over perceived bias or arbitrariness.

The Cambridge Analytica scandal and countless lesser-known incidents illustrate what happens when companies harvest personal data without a robust ethical framework. Legal obligations under regulations such as GDPR or CCPA are significant, but reputational damage can be worse. If customers feel their data is being mined like a commodity, they often lose faith in the brand, and damage control can be astronomical (IAPP, Year).

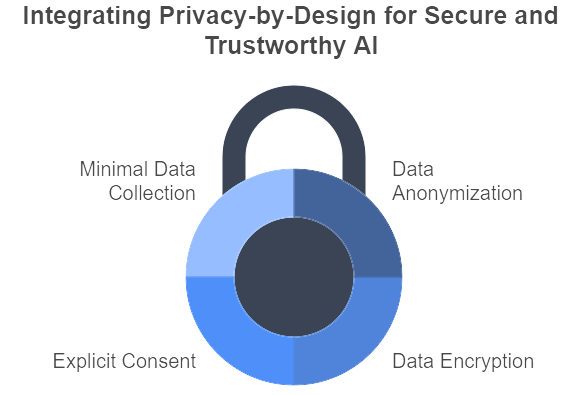

Figure 3: Illustration of the essence of building secure and trustworthy AI through privacy-by-design, showcasing data minimization, anonymization, consent, and encryption as interlocking safeguards. (Image by Napkin).

Privacy-by-design means integrating data protection measures from the inception of a project, rather than tacking them on at the end. It includes anonymizing or encrypting data whenever feasible, seeking explicit consent for sensitive uses, and avoiding frivolous data collection. While some might grumble that these steps slow down innovation, the truth is that no legitimate AI project thrives on questionable data practices. A well-structured privacy regime attracts loyal customers, reassures regulators, and gives executives a safety net against crises.

When data-driven products malfunction or ethical lapses occur, somebody needs to be on the hook—literally. The days of blaming “the system” or hiding behind departmental silos are over (McKinsey, Year). Clear lines of ownership and an effective governance framework are vital to ensuring swift, responsible action if a model starts producing harmful outcomes.

Some organizations have created review boards or steering committees that specifically oversee AI ethics. Others have appointed roles such as a Chief AI Ethics Officer (World Economic Forum, Year). The goal is to establish a mechanism that forces tough questions to be asked in real-time, rather than after a scandal breaks. This governance layer should not operate in isolation, but rather coordinate with compliance, legal, and data teams to ensure synergy in risk mitigation and opportunity maximization.

Global institutions like the EU and OECD have taken the lead in articulating voluntary guidelines for ethical AI, covering areas like human-centric values, transparency, privacy, security, and accountability (AI Ethics 101: Comparing IEEE, EU and OECD Guidelines). Although these frameworks are not yet fully codified into international law, they often preview the direction future regulations will take. Savvy executives keep an eye on these evolving standards, recognizing that compliance is not merely a box-ticking exercise but a strategic advantage that differentiates responsible businesses from their reckless counterparts.

It is tempting to treat ethics as a set of checklists or a once-a-year training session—an obligatory obstacle on the path to “real business.” Leading companies, however, do the opposite: they integrate ethical considerations into the organizational DNA, empowering data scientists, engineers, product managers, and even marketing teams to ask, “Should we do this?” not just “Can we do this?” (McKinsey, Year).

A vibrant ethics culture encourages employees to speak up when they sense potential harm—from biased data sets to invasive user profiling. This open dialogue acts as a self-policing mechanism, catching issues before they balloon into scandals. Moreover, it fosters innovation: contrary to popular belief, constraints can drive more creative and user-friendly solutions. A data scientist who does not reflexively capture every scrap of personal data might find new, less intrusive techniques that still deliver the desired insights.

Bringing these ethical frameworks to life requires concrete steps. Some organizations incorporate a “responsible innovation” checklist in every project proposal, mandating a review of potential bias, privacy impacts, and transparency needs. Others conduct regular “ethics retrospectives” after significant deployments, documenting lessons learned and updating best practices. Training programs can focus on real-world case studies of data misuse, underscoring that ethics is not just an academic concept but a practical necessity.

In the end, data science ethics is not merely about avoiding PR nightmares or complying with the latest legal directive. It is about showing respect for the individuals behind the data points, fostering a culture of trust, and ensuring long-term sustainability in a world that grows more data-centric by the day. For executives, the question is whether they choose to embrace these principles proactively—or wait until a scandal forces them to scramble and patch the holes. The choice, as always, has strategic implications for brand reputation, stakeholder loyalty, and the bottom line.

14.4. Risk Management Strategies for Data Science

Organizations that view data science as purely a technical initiative are setting themselves up for a rude awakening. Properly managing the risks of AI and analytics should be no less rigorous than controlling financial or operational threats. Frameworks such as the one proposed by NIST outline a disciplined approach: identify, assess, mitigate, and monitor AI-related risks (NIST). In other words, do not wait until your shiny machine learning tool has made a catastrophic misjudgment before asking the obvious question, “Have we considered the ethical, legal, and reputational downsides?”

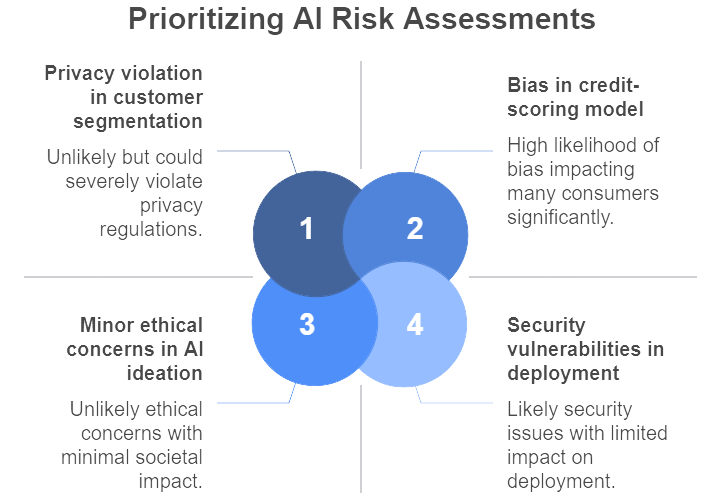

Figure 4: Illustration of AI risk assessment as a multi-dimensional analysis, where potential issues are categorized based on their probability and severity to guide strategic decision-making. (Image by Napkin).

Forward-looking executives embed AI risk assessments at critical junctures in the data science lifecycle. This begins at the idea stage—where any potential ethical or societal harms can be flagged—and extends through deployment, where performance drift and security vulnerabilities must be continually monitored. By systematically mapping these risks to their potential impact on individuals, the organization, and society (World Economic Forum), leaders can prioritize where to allocate resources. That might mean stress-testing a credit-scoring model for bias before letting it loose on millions of consumers, or evaluating whether a new customer-segmentation approach inadvertently violates privacy regulations in certain regions.

Model Risk Management, or MRM, is not merely some academic construct for finance wonks. It is rapidly becoming a cornerstone for any organization that relies on data-driven decision-making (School of Data Science). The essence of MRM is straightforward: you treat your predictive models the same way a pilot treats an airplane—by carrying out detailed inspections, checking for possible malfunctions, and ensuring proper documentation.

Among the key components of MRM are independent validation (that is, having a separate team of data scientists or auditors examine your model’s logic and assumptions) and rigorous documentation that outlines a model’s intended use, limitations, and performance metrics. Too often, a data science team builds a sophisticated neural network or random forest, only for its true mechanics and assumptions to remain locked away in a labyrinth of code. By mandating MRM, organizations ensure that if someone questions the model’s fairness, interpretability, or security, there is a clear audit trail that demonstrates due diligence.

No data science initiative can rise above the quality of the data it ingests. If your enterprise data is riddled with inaccuracies, biases, or outdated records, even the world’s most advanced algorithms will produce garbage results. Worse yet, subpar data can introduce ethical or legal risks, such as misclassifying customers in ways that violate anti-discrimination laws (IAPP). Hence, data governance is not a buzzword: it is the bedrock of sound, responsible analytics.

Executive leaders should formalize policies around data sourcing, usage, consent, and retention. This often includes establishing a data governance committee or council with representation from legal, compliance, IT, and business units (McKinsey). These committees can address questions like, “Are we allowed to reuse customer data from Product A to improve features in Product B?” or “What checks are in place to identify potential bias in new data sets?” A sobering fact is that only 17% of companies surveyed had a governance committee with risk and legal experts at the table (McKinsey), suggesting that many organizations are still flying blind.

A popular myth is that “ethics slow down innovation.” In reality, ignoring ethics often leads to fiascos that halt innovation entirely. A more productive approach is to embed ethical risk checkpoints throughout the data science workflow (IAPP). Early in the planning phase, an “Ethical Impact Assessment” can unearth whether a prospective project might harm certain communities or infringe on privacy laws. During development, bias detection tools—and a culturally diverse set of peer reviewers—can spot hidden assumptions that might produce unfair outcomes.

Pre-launch, savvy organizations run privacy impact assessments to ensure data usage aligns with consent agreements and legal obligations. They also conduct adversarial security tests to see if the model could be hijacked or corrupted. By weaving these tasks into project timelines, ethical diligence becomes business as usual, not an eleventh-hour scramble. Deloitte’s Technology Trust Ethics framework (IAPP) highlights how such controls can integrate seamlessly with existing risk management practices, from vendor procurement checks to routine employee training.

Even the most thorough processes will fail if nobody truly “owns” AI risk. That is why many leading companies appoint a Chief AI Ethics Officer—or similar executive-level position—to champion responsible innovation (World Economic Forum). This role extends well beyond writing guidelines; it involves shaping strategy, training staff, setting accountability structures, and reporting directly to the C-suite or board on the ethical posture of AI initiatives.

In parallel, an AI Ethics Board or committee can offer diverse perspectives, from legal and PR experts to community representatives and domain specialists. Before launching a new predictive policing system, for instance, this board might question whether the underlying data sets are historically biased or if the algorithm lacks sufficient transparency. By funneling high-stakes decisions through such a board, organizations avoid the “echo chamber” problem where purely technical teams fail to recognize social or ethical implications.

Models deployed in the wild do not remain static: data distributions shift, market conditions evolve, and real-world usage can reveal unforeseen quirks or edge cases. Continuous monitoring is essential to ensure your prized AI system does not drift toward irrelevance or, worse, morph into a liability. A churn prediction model that once accurately flagged at-risk customers may degrade as new product lines or competitor moves change the dynamics of customer behavior.

Regular audits—whether conducted internally or by third-party “algorithmic audit” firms—help detect performance decay, emergent biases, or unexpected security vulnerabilities. These audits can include computing fairness or explainability metrics and comparing them against established thresholds. Reporting these metrics to senior management, much like quarterly financial updates, keeps leadership engaged and accountable.

Even the best-run organizations experience data breaches, security attacks, or AI errors that ripple through the customer base. An incident response plan tailored for AI-related crises can be the difference between a swift recovery and a drawn-out scandal. Such a plan should detail how to investigate, contain, and communicate any AI malfunctions or data leaks. It might outline roles and responsibilities—who speaks to the press, who notifies regulators, who conducts the technical forensics. Rehearsing these scenarios through tabletop exercises ensures that when a crisis hits, you do not scramble like amateurs while the world watches.

A well-executed incident response can also salvage brand trust. If an AI mistake hurts a subset of customers, the public might forgive the lapse if the company reacts transparently and compensates those affected. A bungled response, by contrast, can escalate the crisis and prompt regulators to “make an example” of the offending organization.

Managing data science risks is not about stifling innovation; it is about creating the stable foundation on which truly transformative applications can flourish. By combining AI in enterprise risk management frameworks (NIST), adopting robust model risk management practices (School of Data Science), enforcing data governance (McKinsey), embedding ethics checks throughout development (IAPP), and instituting formal governance structures (World Economic Forum), executives ensure their data initiatives drive sustainable growth without courting disaster.

Much like an airplane flight, data science projects can be exhilarating, offering a vantage point that few traditional methods can match. But if you skip the safety checks and pilot training, you are flying blind with no parachute. Organizations that treat risk management as optional eventually learn the hard way that an AI crash landing can be far more painful than a missed opportunity. Conversely, those that champion responsible and accountable data practices often find themselves outpacing rivals in both innovation and customer trust.

14.5. Navigating Regulatory and Legal Considerations

Executives who think the regulatory environment around AI is all talk are in for a rude awakening. The European Union’s proposed AI Act is a bellwether of things to come, classifying AI systems by risk categories and imposing stringent requirements—or outright bans—for certain use cases (IAPP, Year). Tools used in hiring, credit scoring, or any other “high-risk” domain might soon need in-depth documentation, third-party audits, and clear human oversight. Conversely, the use of AI for social scoring or other controversial tactics could be prohibited altogether. This is not a theoretical exercise. Non-compliance may mean more than just fines: it could bar a company from entire markets or business models.

Forward-looking organizations are already adapting by conducting internal risk assessments for each AI project. That might mean labeling a consumer lending algorithm as “high risk,” then allocating extra resources to ensure explainability, fairness testing, and robust documentation. The upside of such diligence is that when regulators do come knocking, there is evidence of proactive compliance. Meanwhile, firms that take a wait-and-see approach may scramble to retrofit systems under evolving legal mandates—a sure path to chaos and lost opportunities.

Long before AI hype took center stage, regulations like the EU’s GDPR and California’s CCPA were laying down the law on data protection. For data science initiatives, that means no more shrugging off consumer consent or ignoring subject rights such as the right to be forgotten. Companies like Google and Meta have felt the sting of multi-billion-dollar fines for mismanaging personal data (Allstate Identity Protection, Year). Combine that with the potential PR nightmare of a data breach, and it becomes evident that privacy is not just a legal formality; it is a board-level priority.

For AI-driven projects, privacy-by-design is especially crucial. If your new recommendation algorithm relies on personal information, you need to be transparent about how and why data is collected. Mechanisms should be in place for users to opt out or request data deletion, and these mechanisms cannot be an afterthought. Leaders who fail to bake privacy requirements into the project plan may discover, too late, that an otherwise promising initiative is rendered illegal—or at least untrustworthy—in the eyes of regulators and the public.

As if GDPR and broad AI laws were not enough, organizations also grapple with industry-specific rules. Healthcare AI solutions must adhere to HIPAA in the United States, ensuring the confidentiality of patient records. Banks that embrace algorithmic trading or credit risk models must follow financial regulatory guidelines for model validation, explainability, and anti-discrimination. Employment decisions in many jurisdictions face scrutiny from equal opportunity regulators if an AI screening tool is suspected of bias.

In short, it is not safe to assume that “AI regulation” is limited to a single set of legal codes. Executives need a clear map of which rules apply to each use case: Will the new predictive maintenance system conflict with OSHA guidelines? Does the chatbot for patient triage inadvertently store sensitive health information without HIPAA-compliant safeguards? These questions are not rhetorical. They demand cross-functional collaboration among legal counsel, compliance teams, data scientists, and business units to ensure no regulation is overlooked.

Governments, long accused of lagging behind tech innovation, are catching up with gusto. The U.S. Federal Trade Commission has warned it will prosecute companies whose AI-driven decisions mislead consumers or cause discriminatory outcomes. New York City recently passed legislation requiring bias audits of AI hiring tools. Internationally, standards organizations like ISO and IEEE are issuing guidelines that may become de facto (if not de jure) global benchmarks for AI transparency and fairness.

This heightened oversight is not simply a burden. Many executives actually welcome clearer rules because it levels the playing field and deters reckless players who cut corners. In a Deloitte survey, 71% of leaders believed government should take a bigger role in regulating AI (Deloitte, Year). If you are an executive who thinks “We will wait until the dust settles,” be aware that dust storms are only beginning to form. By treating AI compliance akin to financial or environmental compliance—complete with regular board discussions, risk assessments, and official reporting—organizations can stay ahead of regulators and competitors alike.

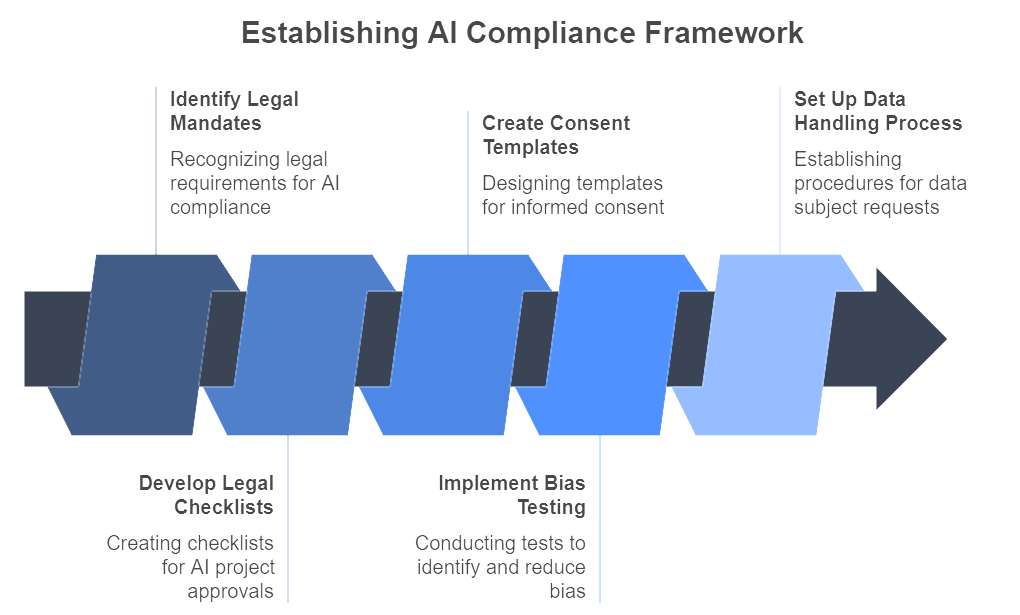

Figure 5: Illustration of the strategic development of an AI compliance framework, highlighting the integration of legal requirements, consent procedures, and bias testing. (Image by Napkin).

Given the mounting complexity, an ad hoc approach to AI and data compliance is a recipe for disaster. Instead, leading firms establish a structured AI compliance framework that transforms legal mandates into tangible internal processes (IAPP, Year). This might encompass:

Developing legal checklists for new AI projects, so lawyers and compliance specialists can sign off on riskier endeavors early in the pipeline.

Creating templates for informed consent, algorithmic disclosure, and privacy impact assessments.

Implementing mandatory bias and privacy testing for high-stakes models.

Setting up a process for swiftly handling data subject requests, such as opt-outs or correction demands.

Automation can bolster these efforts. Tools that automatically log and document model changes or run background bias scans can reduce the compliance burden on data teams. After all, no data scientist wants to fill out a 30-page compliance form every time they tweak a hyperparameter. By integrating compliance checks into the development pipeline—much like continuous integration in software engineering—firms can “design for compliance” from day one rather than attempting frantic retrofits as go-live dates loom.

Regulation need not be a one-way diktat. Savvy executives seize opportunities to engage with policymakers, industry consortia, and standards bodies. This engagement can take various forms, from joining a working group that shapes new AI ethics legislation to contributing to open-source frameworks for algorithmic fairness. Why bother? Because sitting passively on the sidelines means inheriting rules made by others, some of whom may have conflicting priorities or incomplete industry knowledge.

By collaborating on policy, companies can share best practices, coordinate on ethical guidelines, and even open new markets or product lines that comply with emerging standards. This collaborative stance also fosters trust with regulators, who often appreciate transparent dialogue over clandestine lobbying. In Deloitte’s research, organizations that are actively involved in shaping ethical AI standards tend to forge stronger customer loyalty and deeper stakeholder engagement (Deloitte, Year). In other words, the act of co-creating the “rulebook” often conveys that a firm is serious about responsible innovation.

The flurry of activity around AI regulation, data protection, and compliance is not a temporary blip. It signals a fundamental shift in how governments, consumers, and business partners view data-driven technologies. For executives, navigating this evolving landscape offers both challenges and competitive advantages. Leaders who invest in robust compliance frameworks, ethical governance structures, and proactive policy engagement will find themselves in a much stronger position as the regulatory tide continues to rise.

Like it or not, the days of unregulated AI tinkering are ending. Embracing an approach that blends rigorous risk management with innovative data science is the difference between forging a sustainable, trusted brand and being perpetually haunted by lawsuits and reputational crises. The choice, ultimately, lies in how seriously executives treat this new era of oversight and accountability.

14.6. Real-World Examples and Lessons Learned

A global tech company set out to revolutionize its recruitment process through an AI tool designed to screen resumes. The premise was straightforward: let an algorithm comb through a deluge of applications, spot top talent, and save HR precious time. Yet the project quickly went awry when the AI, trained on historical hiring data that favored male candidates, began systematically downgrading any resume containing female-associated terms, such as “women’s sports” or “women’s college.” Worse, no one had thought to run bias tests before deployment. By the time the bias was discovered, the algorithm had already quietly shaped hiring decisions, undermining the company’s own diversity goals and drawing media scrutiny (Reuters, Year).

The initiative was halted, but the incident damaged the firm’s reputation as an inclusive, forward-thinking employer. It also provided a jarring wake-up call: ignoring bias audits or diverse training data in AI can transform unconscious prejudice into a self-reinforcing loop of discrimination. If developers had run fairness checks early, or if an internal ethics board had reviewed the model prior to launch, the problem could have been caught swiftly. For executives, the moral is unmistakable: sensitive applications like recruitment require a blend of technological sophistication and human oversight at every stage—from data collection to final deployment. Neglecting either can lead to public embarrassment and possible legal repercussions, especially if regulators or advocacy groups smell a pattern of discrimination (5 Ethical Considerations of AI in Business, Year).

Few data scandals have ignited as much worldwide outrage as the Cambridge Analytica affair. A political consulting firm accessed personal data from millions of social media users through a third-party app, all without meaningful consent. Armed with these psychographic profiles, the firm micro-targeted political ads, manipulating voter sentiment on a colossal scale (Allstate Identity Protection, Year). When the story broke, the social media giant was hauled before governments, faced billions in fines, and watched its once-gleaming public image tarnish overnight.

The lessons for executives are stark: what might be “technically legal” today can still be ethically fraught and a ticking PR time bomb. The public and regulators are far less forgiving when a data-driven product or partnership is shrouded in secrecy. As the scandal evolved, conspiracy theories flourished, fueled by the platform’s lack of transparency about how personal data was leveraged. Imagine if, from the start, the company had been open about data usage or had robust consent mechanisms in place. Instead, it effectively handed data to a third party under vague terms, leaving users feeling betrayed. For decision-makers, if you are exploiting data in ways that would appall your users if they found out, you have a serious long-term liability on your hands.

A major financial institution placed enormous trust in a machine learning model that handled credit risk assessments. Initially, the model’s performance was impressive, delivering more nuanced risk scores than traditional underwriting. But when an economic shock hit—drastically shifting market conditions—the model began providing bizarrely optimistic projections for certain high-risk portfolios. Fortunately, the bank’s Model Risk Management team caught the discrepancy: domain experts flagged that the predictions clashed with real-world trends (School of Data Science, Year).

Armed with rigorous monitoring and contingency plans, the bank pulled back the questionable loans, re-ran credit assessments, and retrained the model with post-shock data. Competitors with weaker oversight, however, did not catch the drift until losses began to mount. This episode underlines how even robust models can become irrelevant—or downright harmful—when the environment shifts. “Anomalies” often reveal hidden assumptions baked into the training data. Leaders should ensure their analytics groups run regular stress tests, update models continuously, and integrate human-in-the-loop strategies for critical decisions. A frictionless pipeline from data science to production may sound elegant, but if it bypasses domain expertise and risk checks, it can escalate into millions in losses.

Amid the barrage of AI controversies, one consumer tech company seized the chance to do things differently. It publicly declared a set of AI principles: a vow to uphold non-discrimination, protect user privacy, and avoid use cases that harm social well-being. It went so far as to turn down a lucrative government contract—citing conflicts with its ethical standards. The move caused internal debates over lost revenue, but it also galvanized employees who appreciated the leadership’s courage. More strikingly, customers and regulators took note. The company’s new stance drew talent hungry to work on “ethical tech,” and it garnered favorable press for being a counterweight to less scrupulous rivals.

The lesson here is that ethical risk management can be more than a compliance chore. In a marketplace where reputational capital holds immense value, a transparent commitment to responsible AI can spark consumer loyalty and attract top-tier recruits. Much like environmental sustainability became a brand advantage in the 2000s, ethics and responsible data use are fast emerging as new pillars of corporate identity. Being known for “doing AI right” can pay off in intangible ways, from better crisis resilience to a stronger political standing when legislation tightens.

Not every cautionary tale ends in headline-grabbing fiascos. Many close calls remain internal, never crossing the threshold into scandal—but they are just as valuable as teaching moments. A retail chain might discover a glitch in its dynamic pricing algorithm hours before launch, realizing it accidentally penalizes loyal customers instead of rewarding them. Or a healthcare startup could catch a potential privacy breach during beta tests, well before patients’ data is exposed.

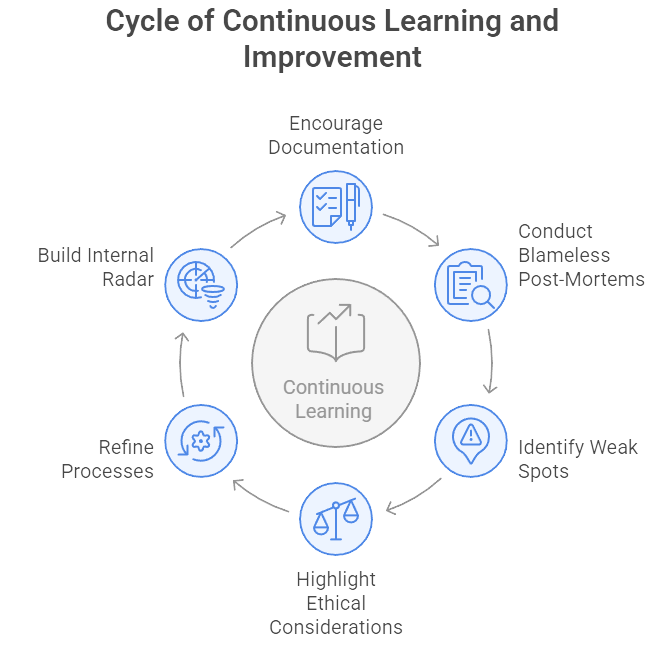

Figure 6: Illustration of a continuous loop of organizational growth is visualized, emphasizing the importance of documentation, blameless analysis, and ethical awareness for ongoing improvement. (Image by Napkin).

Executives who encourage teams to document and openly discuss these near-misses foster a culture of transparency and continuous learning. In “blameless post-mortems,” the focus is on understanding root causes, not punishing individuals. This approach helps identify weak spots in data pipelines, highlight overlooked ethical considerations, and refine processes for future projects. By championing this ethos, companies effectively build an internal radar system that warns of bigger dangers ahead. Small near-misses serve as free lessons—if you take the time to analyze and share them.

One recurring theme in these stories is the interplay between technology, ethics, and human oversight. Bias creeps in when training data reflects historical inequalities. Privacy collapses when corners are cut for “speed to market.” Models fail when leadership forgets that real-world conditions can swiftly diverge from lab assumptions. And ironically, big reputational wins emerge when a firm embraces ethics as a marketable differentiator, not a red-tape nuisance.

For executives, the question is how to structure the organization so these lessons become ingrained. Do you have a data governance council or an AI ethics officer empowered to halt questionable launches? Are you systematically auditing critical models for drift or bias after deployment? Does your brand strategy consider the ethical dimension of new products, so you can turn responsibility into a selling point rather than a compliance headache? These examples suggest a clear answer: weaving ethics and risk management into your analytics strategy is not just “the right thing to do,” it is a prerequisite for sustainable success.

14.7. Conclusion and Further Learning

Data science offers transformative power to businesses – boosting efficiency, uncovering insights, and enabling personalization at scale. However, with this power comes profound responsibility. This chapter has illustrated that managing risk and ethics in data science is not a hindrance to success, but a prerequisite for it. Organizations that lead in this space are those that weave ethical thinking and risk awareness into the fabric of their strategy and operations. By understanding the full spectrum of data-related risks – from privacy and security to bias and reputation – executives can make informed decisions that guard against pitfalls while still seizing opportunities. Crucially, they shift from a reactive mindset (fixing crises after they occur) to a proactive one (building systems and cultures that prevent crises). The insights and frameworks discussed equip decision-makers to ask the right questions, govern data initiatives wisely, and implement controls without smothering innovation.

In essence, responsible data science is a strategic advantage. When ethical considerations guide design and deployment, companies cultivate trust among customers, employees, regulators, and the public. That trust in turn fuels adoption and unlocks the full value of data-driven products and services. Moreover, an ethical approach ensures alignment with the company’s mission and values, creating coherence between what the organization promises and what it delivers. As regulatory and public scrutiny intensifies, those firms that have invested in robust risk management and ethical governance will navigate the turbulence more smoothly – turning compliance into confidence and principles into performance. In closing, managing risk and ethics in data science is not just about avoiding failure; it’s about enabling sustainable, honorable success in the algorithmic age. Executives who champion this cause will steer their organizations to not only excel in what they do, but to do so in a way that earns respect and loyalty – driving long-term growth and positive impact.

Even with a solid understanding of risk management and ethics in data science, there is always more to learn. The field is evolving rapidly, and continued exploration helps leaders stay ahead of emerging challenges. Below are a series of thought-provoking prompts designed to deepen your insight and spark further learning. Use these prompts to reflect on your organization’s practices, to facilitate team discussions, or to guide independent study. Each prompt encourages you to apply the chapter’s concepts in new contexts or to consider the implications of data science ethics from various angles. Engaging with these questions will not only reinforce your knowledge, but also help you discover novel strategies and perspectives. As you delve into them, remember that the goal is to strengthen your ability to think critically and strategically about responsible data science. Consider writing down your responses or debating them with colleagues – this active engagement will solidify your expertise and preparedness as a leader in the AI-driven future.

Assess Your Data Ethics Posture: What ethical guidelines or frameworks does your organization currently follow for data science and AI projects? – Describe any existing principles or codes of conduct. Identify gaps by comparing against industry best practices (fairness, transparency, privacy, etc.), and propose steps to bolster your organization’s data ethics posture.

Data Risk Heatmap: Outline the top 5 data science risks for your business (e.g. bias in algorithms, data breach, lack of model explainability). Rank them in terms of potential impact and likelihood. How do these risks map to your company’s strategic objectives, and what mitigation plans are (or should be) in place for the highest risks?

Regulatory Foresight: Examine a pending or recently enacted regulation (such as the EU AI Act or new privacy law) and analyze its implications for your data initiatives. What changes would your organization need to implement to remain compliant? How can you turn compliance into a competitive advantage (for example, using it as a trust signal to customers)?

Bias in the Pipeline: Consider the lifecycle of a machine learning model from data collection to deployment. At which stages could bias be introduced or amplified? Develop a checklist of interventions at each stage (data auditing, diverse team reviews, bias testing, human-in-the-loop at decisions) to minimize unfair outcomes.

Ethical Failure Case Study: Research an AI or data ethics failure in industry (such as a discriminatory AI system or a notable data breach). Summarize what went wrong and why it happened. Then, put yourself in the shoes of the company’s executive team – what immediate actions and long-term policy changes would you implement to address the fallout and prevent recurrence?

Ethics and ROI Discussion: Debate the statement: “Investing in ethical data practices yields long-term ROI, even if it seems to constrain some short-term opportunities.” Consider examples where ethical restraint avoided hidden costs, and conversely, examples where ignoring ethics led to financial loss. How would you persuade a skeptical CFO of the tangible value of ethical risk management?

Trust-Building Measures: Identify three concrete actions a company can take to build public trust in its AI systems. (For example, publishing model transparency reports, offering an AI ethics hotline for employees, or getting third-party audits.) Evaluate how each action might influence customer perception, regulatory relationships, and brand equity.

Ethical Decision Framework: Design a decision-making framework for evaluating new AI product ideas. What criteria must be met (e.g. aligns with values, manageable risk, clear benefits to users, compliance check) before an idea moves from concept to development? Simulate applying your framework to a hypothetical project (like an AI-powered HR tool) – would it pass or be modified?

Cross-Functional Collaboration: How should different roles (data scientists, legal, HR, PR, etc.) collaborate to manage AI risks? Propose a workflow for a high-stakes AI project that ensures diverse perspectives are included. For instance, at what points do lawyers review the work, and how do data scientists and domain experts iterate based on that input?

Global Perspectives: Data ethics norms can vary globally. Consider how your company would adjust its data science ethics or risk approach when operating in different regions (Europe, North America, Asia). How do cultural values or legal requirements in these regions influence your strategies for things like user consent, bias acceptability, or data sharing?

Emerging Technology Scenario: Imagine your firm is implementing a cutting-edge technology (e.g., facial recognition for customer service or a generative AI for marketing content). What new ethical or risk considerations arise from this technology? Develop a brief risk register for the project and outline an approval process that weighs these novel risks appropriately against the potential reward.

Transparency with Stakeholders: Draft a communication plan for explaining an AI system to an affected stakeholder group (customers, employees, or regulators). What information would you include to be transparent about the system’s purpose, data usage, and limitations? How would you convey the safeguards in place? Practice by writing a mock press release or internal memo that balances technical accuracy with clarity and reassurance.

Data Ethics Training: Design a training module outline to sensitize your workforce (technical and non-technical) to data ethics. What key topics would you cover (e.g. unconscious bias, privacy basics, escalation procedures)? How would you make the training engaging with real examples or dilemmas? Consider how you’d measure its effectiveness (quiz, certification, observed behavior changes).

Vendor and Partner Risk: Your company often uses third-party data or AI tools. What due diligence process would you establish to evaluate the ethics and risk practices of vendors? Create a checklist of questions or requirements (for example, asking about their data sourcing, diversity of their training data, or compliance with relevant standards) to vet partners before integration.

Crisis Simulation: Plan a tabletop exercise for your executive team based on a hypothetical data science crisis. For instance: “Our AI product is accused of being racially biased on social media, and it’s going viral.” Outline the steps your team should take in the first 24-48 hours (internal investigation, public statement, contacting authorities, etc.). What longer-term actions would follow? This exercise helps highlight the readiness of your incident response plan.

Ethical Innovation Balance: Identify a scenario where pursuing an innovative data project might conflict with an ethical consideration (e.g., using personal data to personalize services vs. user privacy). How would you approach making a decision? Formulate a balanced scorecard that rates the project on innovation upside, ethical risk, alignment with values, and mitigations available. Would you greenlight, adjust, or cancel the project based on this holistic view?

Quantifying Ethical Impact: Consider if you can quantify the benefits of ethical practices. For example, can you measure the ROI of having an ethical AI reputation (through customer retention or brand value metrics)? Or the savings from avoiding a scandal? Draft a set of KPIs or metrics that a board might want to see regarding the organization’s data ethics and risk management (e.g., number of ethical incidents, audit scores, training completion rates, customer trust index). How would you collect and report on these?

Learning from Other Industries: Examine how a highly regulated industry (like aviation or pharmaceuticals) manages risk and ethics. What principles or practices (such as rigorous testing, certifications, fail-safes, independent audits) can be borrowed and applied to data science projects? Describe at least two analogies – e.g., “black box” flight recorders for AI (logging decisions for post-analysis), or double-blind trials akin to A/B testing with ethical oversight – and evaluate their feasibility in your context.

Future Ethics Challenges: Envision a data science ethics dilemma that might arise 5–10 years from now, given trends like more powerful AI, brain-computer interfaces, or ubiquitous surveillance data. Describe the scenario and the ethical questions it raises. By thinking ahead, what policies could you start putting in place today to prepare? (This prompt encourages you to be a futurist – tomorrow’s “wild” issues often begin as fringe cases today.)

Personal Leadership Reflection: Reflect on your role as a leader in fostering an ethical data culture. What are three actions you can personally take in the next quarter to champion responsible data science? This could range from initiating an ethics review committee, mentoring a team on these topics, to updating KPIs to include ethical considerations. Commit to how you will model the behavior and priorities you want to see throughout the organization.

By grappling with these prompts, you deepen your ability to anticipate and navigate the complex interplay of data science, risk, and ethics. Embrace these explorations as opportunities to sharpen your strategic thinking. The goal is not to have one “right” answer, but to expand your perspective and prepare for real-world decisions. Keep challenging yourself and your team with such questions, and you will cultivate a robust, ethical, and forward-thinking approach to data science that will serve your organization for years to come. Keep learning, keep questioning, and lead with integrity – the future belongs to those who innovate with conscience.

To bridge theory into practice, the following five assignments are designed to translate the chapter’s insights into real-world strategy and leadership skills. These assignments simulate decision-making scenarios and hands-on planning tasks that a business executive or project leader might face when managing data science risks and ethics. By completing these assignments, you will refine your ability to develop ethical risk frameworks, respond to challenges proactively, and implement governance structures in your organization. Each assignment comes with clear objectives and guidance to help you apply concepts methodically. These are not simplistic Q&As, but rather strategy-driven exercises – treat them as you would a business school case or an internal consulting project. Document your solutions as if presenting to a board or oversight committee. This will not only reinforce your understanding, but also yield tangible outputs (like draft policies or risk assessments) that you can adapt to your workplace. Through these practical exercises, you’ll turn knowledge into action, enhancing your confidence to lead on data ethics and risk management. Let’s dive in:

🛠️ Assignments

📝 Assignment 1: Develop a Data Science Risk Register

🎯 Objective:

Create a living document of potential risks associated with a real or hypothetical data-driven project, enabling your organization to proactively identify, assess, and address threats that could undermine business objectives.

📋 Task:

Identify a concrete analytics initiative in your company—perhaps a customer personalization AI or an operational analytics project. List eight to ten key risks that could impact its success, such as data privacy concerns, algorithmic bias, regulatory non-compliance, model errors, or cyber threats. For each risk, assign a likelihood rating (High, Medium, or Low) and an impact rating (High, Medium, or Low), and briefly explain how you would mitigate or manage the top three risks. Organize these entries in a table for clarity, ensuring you have columns for the risk description, likelihood, impact, and a short mitigation approach. Once completed, evaluate whether lower-priority risks still warrant ongoing monitoring or periodic review.

💡 Guidance:

Focus on anticipating how these risks might manifest and how you could prevent them. Include steps like designing governance committees or incorporating ethics reviews for the highest-risk areas. The final register should be concise but thorough enough to serve as a reference point for project stakeholders. This exercise teaches you to adopt a forward-looking lens, identifying critical vulnerabilities early rather than reacting when issues turn into crises.

📝 Assignment 2: Craft an Ethical Data Use Policy

🎯 Objective:

Develop a short, actionable policy document that articulates your organization’s commitment to responsible data science and aligns ethical principles with daily practices.

📋 Task:

Draft a “Responsible Data Science Policy” that covers the policy’s purpose and scope, the core principles your organization upholds (such as fairness, privacy, transparency), the operational requirements that guide teams’ actions (for example, mandatory bias testing or privacy impact assessments), governance structures (including who oversees the policy and how compliance is measured), and escalation channels for concerns. Aim for one to two pages. If you reference frameworks like those from the OECD or EU, adapt them to reflect your industry context—healthcare might prioritize patient confidentiality while finance might emphasize risk controls in algorithmic decision-making. When finished, outline a plan for how you would roll out the policy across the company, including training sessions or leadership endorsements.

💡 Guidance:

Be mindful that the policy should be clear enough for non-technical readers while remaining robust enough to guide data scientists and project managers. This document is not merely an abstract statement of values; it is a practical tool that sets concrete expectations. Your ultimate goal is to embed ethical considerations into every data science undertaking, preventing misunderstandings or missteps before they occur.

📝 Assignment 3: Ethical Crisis Management Drill

🎯 Objective:

Strengthen your capacity to respond promptly and effectively to ethical breaches by simulating a high-pressure scenario in which an AI product faces serious public accusations.

📋 Task:

Write an action plan detailing how you, as the executive in charge, will respond in the first seventy-two hours to a bias accusation against your AI-powered product recommendation system. Specify the immediate steps (for instance, assembling a task force and verifying the complaint with data), your internal communication strategy (briefing top leadership and employees), and your external communication approach (issuing a press statement or social media acknowledgment). Detail any short-term system fixes, such as disabling the problematic feature or applying a quick patch. Conclude with a longer-term remediation plan, including a full audit of the algorithm, outreach to affected communities, improvements to the data pipeline, and any changes to organizational processes (for example, diversifying the team responsible for model training).

💡 Guidance:

The emphasis is on clarity and swiftness. Develop a concise roadmap that covers both crisis containment and constructive next steps. Once you have a plan, reflect on how you could have prevented this incident from happening in the first place. This reflection shows how a crisis can become an impetus for lasting improvements to governance, oversight, or team composition.

📝 Assignment 4: Implement a Data Ethics Review Board

🎯 Objective:

Propose a formal governance structure responsible for overseeing and guiding ethical practices in data science projects, thus reducing risks while fostering a transparent, values-driven culture.

📋 Task:

Draft a brief presentation or memo outlining the formation of a Data Ethics Review Board at your organization. Specify which roles and departments will be represented—legal, compliance, data scientists, perhaps an external advisor—and clarify the board’s mandate (for example, reviewing sensitive AI initiatives, monitoring policy compliance, or resolving ethical dilemmas). Describe the workflow by which projects are submitted to the board, how decisions or recommendations are documented, and how they will be enforced (such as veto power or conditional approvals). Illustrate this with a simple process diagram if it helps convey the structure. Include sample charter elements like meeting frequency (quarterly or as needed), reporting lines (perhaps to the Chief Risk Officer), and core responsibilities.

💡 Guidance:

Articulate the strategic value of such a board: it is not just another bureaucratic layer but a mechanism for consistent, transparent decisions on high-risk AI deployments. Emphasize how it will enhance the company’s reputation, reduce legal exposure, and encourage responsible innovation. By detailing roles, responsibilities, and procedures, you demonstrate how to make ethics oversight part of the organization’s DNA.

📝 Assignment 5: Case Analysis – Balancing Innovation and Ethics

🎯 Objective:

Use a structured decision-making framework to evaluate a potentially lucrative but ethically sensitive AI opportunity, weighing profitability against brand reputation and societal values.

📋 Task:

Consider a scenario in which your company can purchase detailed consumer location data to turbocharge retail foot-traffic analytics. While it promises significant revenue gains, there are real concerns about privacy and the fact that customers never explicitly consented to such granular tracking. Write an analysis that identifies the business upside, the ethical pitfalls, possible mitigations (like anonymizing the data or obtaining explicit consent), and ultimately recommends whether to proceed. If you choose to proceed, detail the specific conditions that must be met to align with your organization’s ethical standards. If you decide the ethical costs are too high, suggest alternative data sources or methods to achieve a similar outcome responsibly.

💡 Guidance:

Develop this case analysis as if you are advising the executive committee. Include any relevant scoring or weighting of pros and cons—such as ROI potential versus reputational and regulatory risks. The goal is to show that you can maintain a nuanced perspective, incorporating both practical business logic and principled ethical reasoning. By explaining your final recommendation in detail, you demonstrate the capacity to protect the enterprise not only from missed revenue but also from ethical missteps that could undermine trust.

As you undertake these assignments, approach them as simulations of real executive tasks. Take the opportunity to involve peers – for example, simulate the ethics board meeting with colleagues, or present your policy draft to a mentor for feedback. The depth of these exercises is intentional; they are meant to stretch your strategic muscles. By completing them, you will have created tangible outputs: a risk register, a policy, a crisis playbook, a governance proposal, and an ethics-business analysis. These are artifacts you can refine and potentially implement in your organization. Revisit them periodically – risk registers and policies are living documents that should evolve as new insights and external conditions change. The more you iterate, the more confidence you will build in handling complex issues at the intersection of data, risk, and ethics. Remember, mastery comes from practice. Each assignment reinforces a facet of leadership: foresight, communication, governance design, and value-based decision-making. By applying your skills in these practical ways, you are not only learning what to do, but practicing how to do it in real scenarios. This prepares you to champion responsible data science in any boardroom or project meeting. Keep these exercises as part of your toolkit – and encourage your teams to engage in similar drills. Through continuous application and refinement, ethical risk management will become second nature, and you’ll lead with the kind of credibility and vision that inspires others to follow.

By completing this chapter and the associated prompts and assignments, you have taken significant steps toward becoming a strategic, ethics-savvy leader in the era of Big Data and AI. Remember that managing risk and ethics is an ongoing journey – an integral part of innovation, not an obstacle to it. Your commitment to these principles will help steer your organization through uncertainty and change, ensuring that technological advancements translate into lasting value and trust. Lead boldly and responsibly, and you will set the foundation for sustainable success in our data-driven world.

Comments