Chapter 2

Data Science Foundations for Business Leaders

"Without data, you’re just another person with an opinion." — W. Edwards Deming

This chapter explains that data science is an interdisciplinary field combining statistics, computing, and domain knowledge to extract insights from data, and it emphasizes why this matters for executives in strategic terms. It systematically walks through key concepts such as big data and the spectrum of analytics (descriptive, predictive, prescriptive), and it outlines the data science process (from defining business problems to collecting data, modeling, and deploying solutions) in a way that connects technical steps to business outcomes. Through well-researched case studies and examples, it illustrates practical applications across marketing, finance, operations, and more. The chapter also tackles the crucial roles, team structures, and cultural factors needed to implement data science, underlining the importance of data-driven decision-making and leadership’s role in fostering a supportive, ethical data culture. Recognizing that leveraging data comes with responsibilities, it covers data ethics, privacy, and governance, advising on how to manage risks like bias and ensure compliance with regulations such as GDPR. In sum, this chapter equips business leaders with a clear understanding of data science fundamentals and best practices, enabling them to champion analytics initiatives confidently and align them with company strategy for maximum impact. It sets the stage for deeper exploration in subsequent chapters by establishing why data science is a cornerstone of modern competitive advantage and how to lay the groundwork for successful data-driven transformation.

2.1. Introduction to Data Science in Business

Data science has emerged as the beating heart of modern commerce, driving organizations to respond to market fluctuations with speed, precision, and that all-important edge over the competition. At its core, data science fuses statistical insights, computer science methods, and domain-specific expertise to tackle challenges once viewed as the exclusive domain of seasoned executives and their gut instincts (Davenport and Harris, 2007). Today’s abundance of data—from everyday consumer clicks to complex supply chain sensors—has made data science not just feasible but practically unavoidable for any company intent on survival. As IDC (2018) points out, ever-cheaper cloud computing and the exploding scale of digital interactions have turned old-school guesswork into a liability. Executives who rely on “trust me, I know” are fast discovering that the competitor down the street is crunching the numbers, listening to the data, and reaping the rewards.

From an academic perspective, data science reframes established theories of competitive advantage. The resource-based view traditionally posited that unique assets—like intellectual property or exclusive supplier relationships—could help a firm stand out. Researchers now argue that data, when refined and deployed intelligently, can be just as irreplaceable as a patented technology, especially if rivals cannot easily replicate the underlying processes that turn raw information into valuable insight. Brynjolfsson et al. (2011) note that companies embracing evidence-based decision-making tend to display higher productivity and operational efficiency. A classic example is Capital One, which famously used data-driven experimentation to identify undervalued customer segments for its credit card business (Davenport and Patil, 2012). By treating each new product offering as a scientifically grounded trial rather than a leap of faith, the firm unearthed untapped niches long overlooked by traditional market analyses.

Technology stalwarts like Netflix have taken these principles and utterly transformed entire industries. Television used to revolve around pilot episodes, boardroom hunches, and spreadsheets of Nielsen ratings. Netflix, however, combined advanced machine learning with data from millions of user interactions to greenlight shows (Business Case Studies, 2024). “House of Cards” has become the poster child for how data can detect latent demand, bypassing the need for the dreaded pilot season. A more recent example might be Netflix’s global expansion strategy, which used streaming data to localize content offerings—investing heavily in Korean dramas, for instance—long before most competitors realized the global appetite for such programming. In doing so, Netflix demonstrated how harnessing vast repositories of behavioral data can upend old business norms, from content production to distribution.

Industry perspectives echo these academic findings, revealing how data science extends well beyond entertainment. Finance firms automate fraud detection by analyzing real-time transaction flows for irregularities. Retailers preemptively stock items and adjust prices based on predictive models that consider everything from weather patterns to social media chatter. Healthcare providers run machine learning algorithms on clinical data to improve patient outcomes and tailor personalized treatment plans. This democratization of analytics power, once reserved for only the largest enterprises, has accelerated thanks to cloud-based platforms and accessible tools that allow even startups to crunch massive datasets without needing to buy entire data centers (Atlan Insights, 2023). While the bar for technical competency has risen, it’s also never been easier for new entrants to leverage advanced analytics—if they plan and execute correctly.

This brings us to the need for structure and focus. Many organizations adopt formal frameworks such as CRISP-DM to ensure analytics projects follow a disciplined cycle: clarifying business problems, exploring and cleaning data, building and testing models, and ultimately deploying solutions for real-world impact (Davenport and Harris, 2007). In the early stages, the emphasis often falls on descriptive and diagnostic analytics—essentially, “What happened?” and “Why did it happen?”—before moving on to predictive or prescriptive methods that attempt to forecast the future or recommend specific actions (RIB Software Blog, 2024). This incremental approach can be a sanity-saver, particularly when the board wants everything from predictive revenue models to AI-driven chatbots, all by next quarter. By charting a clear maturity path, executives can avoid the classic trap of trying to swallow the entire data science ocean at once.

Figure 1: Illustration of he interconnectedness of key factors for successful data-driven decision processes.

Strategic alignment is another indispensable piece. Data science must be woven into the broader corporate strategy so that insights truly shape decision-making at every level. Barton and Court (2013) argue that analytics lives or dies based on cross-functional collaboration, which is a polite way of saying data scientists rarely succeed in a vacuum. Without input from frontline staff and domain experts, the slickest machine learning model can be thwarted by incomplete or messy data. Conversely, when leadership actively champions a data mindset—and invests in the necessary people, tools, and governance—insights flow freely across departments, boosting everything from marketing campaigns to supply chain scheduling (Business Case Studies, 2024). One typical scenario involves a consumer goods firm that tries to break into a new region by correlating demographic data with purchasing patterns, then tailoring both product distribution and ad spend to match. If the CMO and the operations lead can’t agree on definitions or metrics, no fancy algorithm will salvage that rollout. If they do collaborate, however, the analytics team can prototype localized marketing campaigns, test them with small budgets, and then scale up only the ones that produce verifiable results (MIT Sloan Management Review, 2020).

Ultimately, data science serves as a critical lens through which companies can decode complexity, identify emerging trends, and iterate quickly on what works. The velocity of new data streams also accelerates the pace at which businesses must adapt, turning previous quarterly updates into daily or even hourly tasks. This heightened speed is both an opportunity and a risk: the firms that can handle the deluge and surface actionable insights stand poised to outmaneuver competitors, while those clinging to outdated methods run the risk of being blindsided by more agile players (OneData AI Insights, 2025). In the chapters ahead, we will map the tactical steps and leadership principles needed to ensure data science initiatives not only launch effectively but also become ingrained in the organization’s culture, paving the way for continuous refinement and sustained competitive gains. Readers will learn about best practices for data collection, cleaning, modeling, and governance, as well as how to navigate the human side of analytics—because even the most compelling data story can falter if stakeholders aren’t ready to listen.

2.2. Core Concepts and Terminology

Data science has emerged as a critical driver of success in modern business, fundamentally altering how organizations strategize, execute, and differentiate themselves. It weaves together statistical methods, computational techniques, and practical domain insights, ultimately producing actionable intelligence that can guide anything from pricing decisions to entire business pivots (Davenport and Patil, 2012). At its most basic level, data science answers two essential questions: “What is truly going on?” and “What should we do about it?” These are not trivial questions, especially in an economy awash with near-infinite digital signals coming from every corner of the internet, supply chains, consumer mobile apps, and beyond. As OneData AI Insights (2025) points out, organizations now face an era in which data streams multiply daily, making the ability to gather, process, and analyze them both a daunting challenge and an unparalleled opportunity.

Executives often approach data science with varying degrees of caution and enthusiasm. On one hand, it promises to illuminate hidden trends, inspire profitable innovations, and help companies stay a few steps ahead of market fluctuations. On the other hand, the surge in data volume, velocity, and variety—often referred to as the “three Vs” of big data—can be overwhelming for those used to smaller, more structured data sets. The reason data science resonates across industries, from banking to manufacturing, is its potential to convert these unwieldy datasets into strategic assets that drive top-line growth and bottom-line efficiency. Academics reinforce this sentiment by highlighting how data, when effectively managed and interpreted, can become an inimitable resource—aligning with the resource-based view of competitive advantage, which identifies rare and difficult-to-replicate assets as prime levers for long-term success (Brynjolfsson et al., 2011).

Understanding the analytical continuum of data science is an essential starting point for any executive hoping to cut through the jargon and hype. This continuum typically begins with descriptive analytics, which looks backwards to clarify what happened in a business, often through dashboards and historical reports. Diagnostic analytics then investigates why certain events occurred, zeroing in on correlations and potential causal factors that explain, for example, a spike in customer churn or a slump in regional sales (Barton and Court, 2013). Armed with this context, organizations move on to predictive analytics, using machine learning algorithms and statistical models to forecast future scenarios—predicting outcomes such as demand for a specific product or the likelihood of a customer to default on a loan. Prescriptive analytics takes this a step further by suggesting optimal actions to achieve a desired outcome. For instance, a ride-hailing service may dynamically alter its pricing based on real-time estimates of where demand will peak, effectively allowing algorithms to shape business strategy on the fly (Cote, 2021).

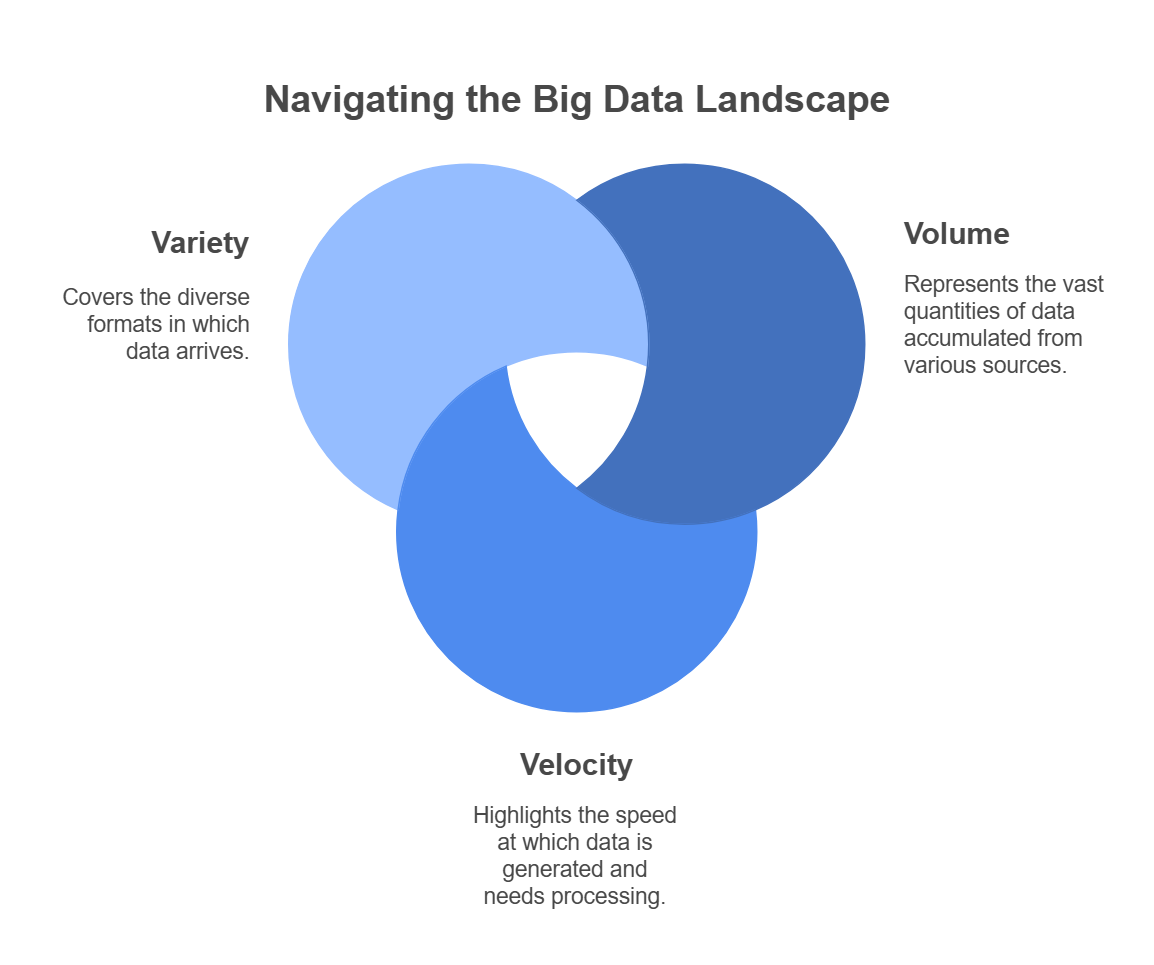

Figure 2: Illustration of the core challenges and considerations when working with Big Data. (Image by Napkin).

Underpinning these analytics approaches is the world of big data, a phrase that has at times been reduced to a cliché but still captures the scale and complexity businesses face. The three Vs—volume, velocity, and variety—remain apt descriptors. Volume speaks to the vast quantities of data accumulated from transactional systems, IoT sensors, and user interactions. Velocity underscores the speed at which this data floods in, requiring near-real-time or even continuous analytical processing for some use cases. Variety covers the shifting formats in which data arrives, from neatly tabulated rows in a database to unstructured social media posts and sensor logs that need specialized parsing techniques (Laney, 2001). While this big data environment can appear chaotic, it provides fertile ground for advanced analytics. Innovators in retail, finance, and beyond harness techniques like Hadoop-based distributed computing or in-memory data processing with Spark to make sense of massive data stores that would have sent older computing architectures into meltdown (MIT Sloan Management Review, 2020).

Moving from high-level theory to tangible results typically involves a structured methodology. CRISP-DM remains one of the most widely cited frameworks, offering a cyclical process: clarifying business objectives, understanding and preparing the data, modeling potential solutions, rigorously evaluating them, and finally deploying successful models into operational workflows (Davenport and Harris, 2007). This iterative approach helps prevent organizations from diving into the deep end of analytics without a lifesaver. For example, a logistics firm might begin by using descriptive analytics to measure average delivery times, then apply diagnostic techniques to locate congestion hotspots. Predictive algorithms can then forecast where future bottlenecks are likely to appear, setting the stage for prescriptive models that automatically reroute drivers in real time. This incremental journey ensures that each analytics step remains aligned with overarching strategic priorities, rather than becoming a random experiment that fails to impact the bottom line (Atlan Insights, 2023).

Yet all the advanced tools and frameworks in the world accomplish little if no one trusts or understands the insights. Effective data governance ensures that the information being analyzed is both accurate and ethically sourced, a concern amplified by emerging regulations and public scrutiny over privacy violations. Governance addresses who gets access to what data under what conditions, how data integrity is verified, and what standards guide the analysis. While some executives fear governance will throttle innovation, the opposite is often true: clear policies and reliable data pave the way for faster, more impactful analytics, because employees and decision-makers no longer need to question the legitimacy of every data point (Shearer, 2000).

From an industry perspective, mastering these core concepts—descriptive to prescriptive analytics, big data technologies, and a robust methodological framework—differentiates winners from also-rans. E-commerce giants apply deep learning to personalize product recommendations and optimize warehouse operations in a single unified ecosystem (Business Case Studies, 2024). Banks deploy advanced risk-scoring models that not only approve or deny loans but also adjust terms in near real-time, reflecting the latest credit data. Healthcare providers mine patient records and sensor data to improve clinical care and reduce hospital readmissions. In all cases, the hallmark of successful data science adoption is that analytics isn’t relegated to a silo; it saturates the organization’s strategy and everyday operations.

That saturation, however, requires deliberate leadership and a collaborative culture. Brynjolfsson et al. (2011) emphasize that when data science intersects with organizational design, it can unleash a multiplier effect: each successful analytics project teaches lessons that spill over into other departments, creating a feedback loop of continuous improvement. But such a loop requires executives who champion data-driven thinking, middle managers who provide domain expertise, and frontline workers who learn to rely on validated insights rather than hunches. Technical specialists cannot shoulder the entire data science burden alone; they need domain experts to contextualize findings, legal teams to navigate compliance risks, and IT professionals to ensure stable systems. This cross-functional collaboration enriches analytic models by injecting them with real-world perspectives and ensures that data products don’t gather dust after a flashy pilot phase (NewVantage Partners, 2020).

In conclusion, the world of data science hinges on a few core concepts—analytics stages (descriptive, diagnostic, predictive, and prescriptive), big data’s three Vs, and a structured roadmap such as CRISP-DM—that together offer organizations a systematic approach to converting data into actionable strategies (OneData AI Insights, 2025). Whether a company is optimizing its supply chain or personalizing its mobile app experience, grounding these activities in proven methodologies increases the odds of success. As subsequent chapters will illustrate, these core concepts serve as the bedrock for everything from machine learning deployments to real-time analytics infrastructures, forming the foundation upon which savvy businesses build enduring competitive advantages.

2.3. The Data Science Process and Lifecycle

Data has become a defining resource in today’s business environment, influencing everything from product innovation to how organizations measure their market resilience. Academics emphasize that a clear, repeatable methodology is crucial for converting raw data into actionable insights, mainly to prevent analytics initiatives from becoming scattered, one-off experiments that never quite make it to prime time (Barton and Court, 2013). Meanwhile, industry experts remind us that executives face intense pressures to keep up: customer preferences morph by the minute, supply chain complexities multiply, and market disruptors emerge seemingly overnight (OneData AI Insights, 2025). In such a high-stakes environment, data science frameworks serve as strategic playbooks, guiding leaders from an ambiguous “We have data, now what?” to the pragmatic reality of building and deploying models that solve real problems.

One of the most prominent frameworks is the Cross-Industry Standard Process for Data Mining (CRISP-DM). This structured approach includes the steps of business understanding, data understanding, data preparation, modeling, evaluation, and deployment (Shearer, 2000). While the name might not scream excitement—honestly, “Cross-Industry Standard Process” doesn’t jump off the page—it has the virtue of ensuring that analytics projects never lose sight of their overarching business objectives. Too many companies jump directly to the modeling stage, dazzled by the prospect of fancy algorithms, without articulating what problem they’re actually trying to solve. CRISP-DM insists on starting with clarity: define the question, the success metrics, and the organizational stakes right out of the gate (LaValle et al., 2011).

Business Understanding is the first critical step. It might seem obvious that you should know what you’re trying to accomplish before you do it, but you’d be surprised how often executives skip this phase in their rush to “do data.” Consider a food delivery startup that sets out to “reduce delivery times by 15% in high-traffic urban areas.” That level of specificity guides every subsequent decision, from which data sources to collect (GPS timestamps, traffic conditions, driver schedules) to which performance metrics really matter. If a data science team dives in without an explicit target, they risk building solutions that are technically impressive but disconnected from the company’s actual pain points—an all-too-common scenario in corporate analytics (Atlan Insights, 2023).

Once the business goal is nailed down, teams move to Data Understanding and Data Preparation. This is where many projects discover that their data is incomplete, riddled with errors, or locked away in some archaic legacy system that requires a Rosetta Stone to decode. And yet, as unglamorous as it sounds, this is the fulcrum on which everything else pivots. No amount of machine learning wizardry can salvage a dataset marred by missing values, wildly inconsistent entries, or—my personal favorite—columns that were mislabeled for years because nobody noticed. Systematic cleaning, integration, and feature engineering can often turn junk data into a goldmine of insights, but it requires both perseverance and collaboration among IT, domain experts, and data scientists (MIT Sloan Management Review, 2020). Think of it as prepping ingredients before cooking a gourmet meal: if you don’t do it thoroughly, the final dish is guaranteed to disappoint.

In the Modeling phase, organizations finally get to the fun part: choosing and testing algorithms. Options range from simple statistical approaches (like linear or logistic regression) to more advanced machine learning and deep learning tools (Davenport and Harris, 2007). The uninitiated sometimes believe there’s a magical “best algorithm” that will solve all their problems. In reality, the right choice depends on your data, your computational resources, and your business objectives. An insurance firm seeking to detect fraud may deploy anomaly detection methods, iterating among various algorithms to find the best balance of false positives and missed fraud cases (Business Case Studies, 2024). This is rarely a linear process. Insights gleaned from early trials may reveal data gaps, forcing the team back to the drawing board—or at least back to data preparation—to fill the holes and refine feature sets.

The Evaluation stage validates whether the model meets the specific KPIs identified in the business understanding phase. If the intended goal was to reduce customer churn by 10%, does your predictive model actually forecast churn with enough accuracy to achieve that? If not, you might return to an earlier stage to gather additional data or refine your approach (LaValle et al., 2011). Anchoring evaluation in measurable business outcomes ensures that analytics teams aren’t celebrating a model’s high accuracy only to discover it fails to move the needle on actual revenue or cost savings. Academic studies frequently stress that data science is cyclical, with each iteration refining the project’s scope and improving the solution, rather than a one-and-done affair (NewVantage Partners, 2020).

When your model proves it can deliver the promised results, the final hurdle is Deployment, where the rubber meets the road. Many organizations fumble at this step, treating a successful proof-of-concept as the endgame. CRISP-DM, however, underscores that you haven’t really succeeded until the model seamlessly integrates into daily operations, influencing decisions or automating processes in real time (Shearer, 2000). Think of a logistics provider that automatically adjusts driver routes based on predictive traffic models, saving drivers the hassle of deciding themselves—and reducing delivery times in the process. Or an insurance company that implements a risk-scoring system so adjusters immediately know which claims merit extra scrutiny. Real-world deployment also demands thoughtful change management: if frontline employees don’t trust or understand the system, they’ll ignore it—no matter how many data-driven accolades it earned in the pilot phase (Barton and Court, 2013).

CRISP-DM aligns neatly with broader organizational frameworks that encourage data maturity and strategic integration. Barton and Court (2013) discuss how data-centric cultures thrive by embedding analytics at every level, creating a “virtuous cycle” where early wins encourage further adoption. Davenport and Harris (2007) similarly highlight that strong analytics leadership can push data science projects out of siloed labs and into the operational bloodstream of the firm. Ultimately, this lifecycle approach isn’t just about ticking off a checklist. It’s about forging a disciplined mindset that recognizes the messy realities of data, the iterative nature of model development, and the human side of adoption. Because no matter how advanced your algorithms, if the people in your organization refuse to use them, you might as well be peddling snake oil.

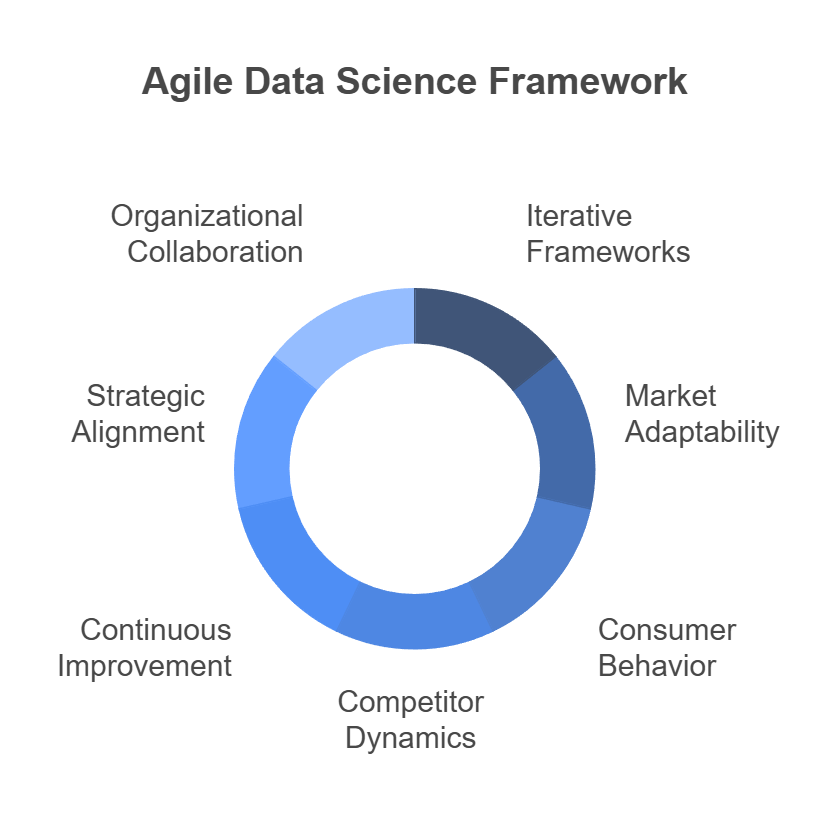

Figure 3: Illustration of the need for a collaborative and iterative approach to data science in today's fast-paced business landscape. (Image by Napkin).

The iterative nature of frameworks like CRISP-DM becomes all the more vital in fast-moving markets, where yesterday’s “perfect model” becomes obsolete as consumer behaviors shift or new competitors arrive (OneData AI Insights, 2025). A willingness to revisit earlier stages, pivot, and integrate fresh data ensures that your analytics solutions remain relevant. Businesses that cling to rigid processes run the risk of being outpaced by more agile rivals who embrace continuous improvement and learning. When done right, the data science lifecycle becomes a living, evolving practice—one that aligns data-driven insights with strategic goals, fosters organization-wide collaboration, and offers a sustainable path to innovation.

In essence, the data science lifecycle is more than a technical sequence; it is an organizational blueprint for how to think about, structure, and execute analytics in a way that delivers tangible, measurable value. It demands cross-functional buy-in, rigorous quality control, and a culture willing to embrace iterative learning. In return, companies gain the ability to transform raw data—often messy and unwieldy—into a potent catalyst for growth, differentiation, and adaptation in a business world that shows no mercy to the slow or unprepared (Atlan Insights, 2023). When framed as a cyclical, evolving process rather than a one-off project, data science serves not just as a singular initiative but as a company-wide capability that continually refines, recalibrates, and redefines competitive advantage.

2.4. Data-Driven Decision Making and Strategy

Data has evolved from a byproduct of business transactions to a critical force driving modern strategy, innovation, and operational agility. Academics in organizational behavior and information systems have long contended that firms leveraging empirical evidence adapt more effectively to volatility and competitive threats. This perspective, once considered novel, has become mainstream with studies like Brynjolfsson et al. (2011) showing that data-savvy companies routinely outperform peers reliant on managerial hunches. From the industry side, the meteoric rises of Amazon, Stitch Fix, and others exemplify how continuous, data-fueled refinement in processes or customer engagement can create an unassailable lead over traditional incumbents (MacKenzie et al., 2013).

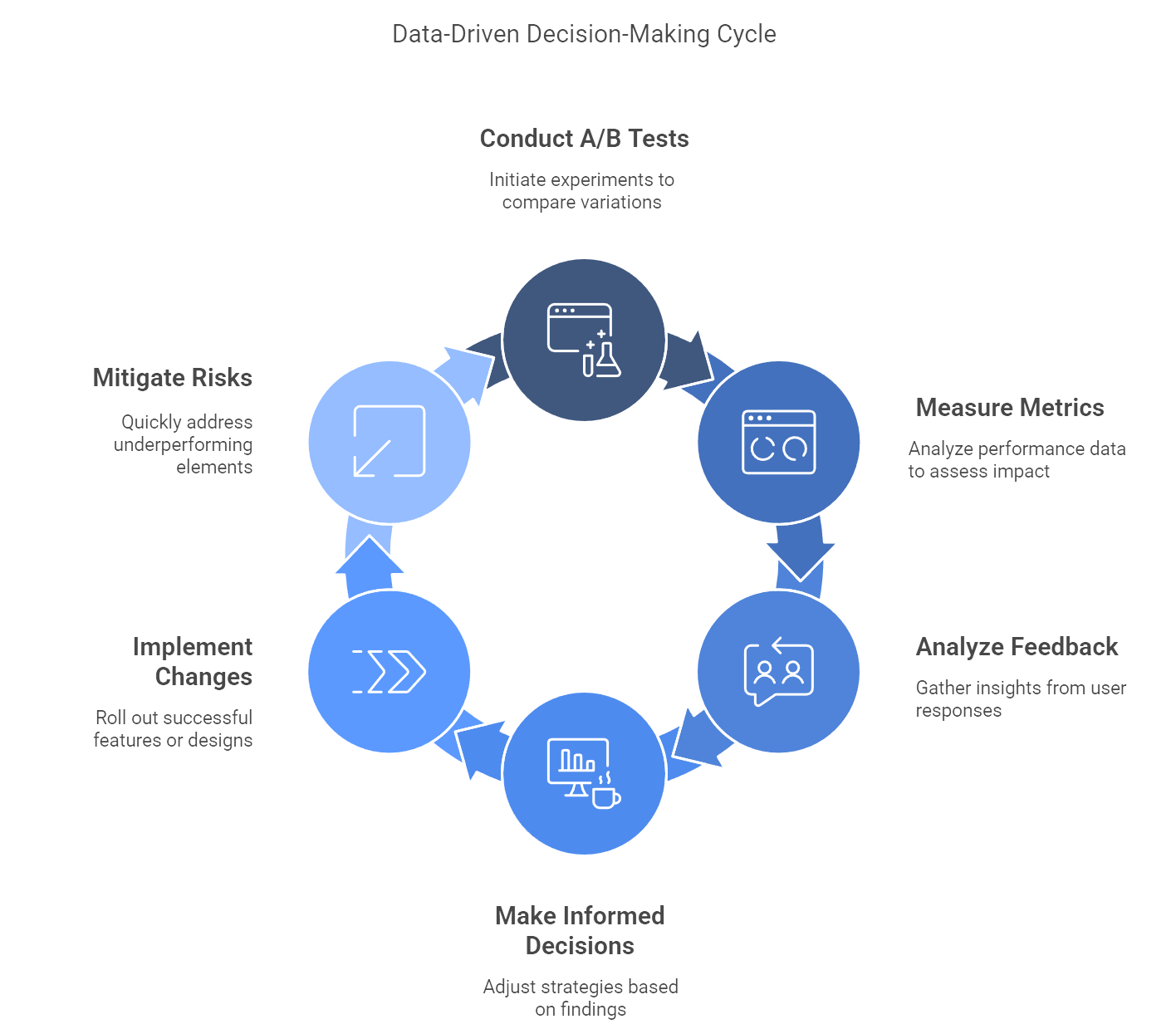

Figure 4: Illustration of a circular process of testing, measuring, and refining strategies based on data and feedback. (Image by Napkin).

A signature feature of data-driven decision making is the discipline of rigorous experimentation—often called a “test-and-learn” mindset. Amazon famously embodies this through a relentless parade of A/B tests, where design changes, new features, or product recommendations are all trialed against a control version. Although to outsiders it may appear as if the company innovates nonstop, each rollout is backed by carefully measured metrics such as conversion rates or user feedback. Davenport and Harris (2007) highlight that this approach mitigates risk: an underperforming feature is swiftly pulled before excessive costs pile up. Over time, this accumulation of small-scale learning drives long-term strategic gains, a reality that organizations across retail, healthcare, and financial services have begun to replicate through structured experimentation protocols (OneData AI Insights, 2025).

Yet shifting a firm’s culture to embrace data-driven rigor is not just a matter of installing dashboards or hiring data scientists. Leadership must actively champion a culture of objective inquiry, repeatedly emphasizing that data, rather than personal preference or office politics, should anchor strategic choices (MIT Sloan Management Review, 2020). All too often, executives cling to outdated products or processes they helped build, even when analytics clearly signals inefficiency or diminishing returns. By contrast, an organization committed to data-driven thinking encourages employees to question decisions, even those steeped in long-standing practice, and to test alternatives on a smaller scale before scaling any successful innovation (Business Case Studies, 2024). For instance, a global consumer goods firm might pilot a predictive model to reassess its entire product lineup, identifying underperforming brands that no longer resonate with shifting consumer tastes. Instead of dismissing these findings, data-savvy executives would validate them through a limited rollout—perhaps discontinuing specific items in certain test markets—thus reducing the emotional baggage that can impede rational decision making.

Academic frameworks such as the Analytics Maturity Model map how organizations progress from basic descriptive reporting to robust predictive or prescriptive analytics, highlighting key inflection points along the way (RIB Software Blog, 2024). At the highest level of maturity, analytics infuses everyday operations so thoroughly that employees routinely consult data before allocating budgets, redesigning processes, or tweaking products. Barton and Court (2013) note that such companies often exhibit agility reminiscent of “dynamic capabilities” in strategic management—an ability to reconfigure assets and processes nimbly in response to shifting market signals. Organizations with advanced analytics capabilities also integrate machine learning tools that feed real-time experimentation at scale. In retail, for example, dynamic pricing engines can instantly respond to changes in consumer sentiment or competitor behavior by adjusting discounts, thereby optimizing profitability almost continuously.

The Balanced Scorecard, originally focusing on financial, customer, internal process, and learning metrics, has also been adapted to incorporate data-centric key performance indicators (KPIs) (Atlan Insights, 2023). This shift ensures that data-driven findings are measured and monitored at the strategic level. A company intent on scaling e-commerce operations might, for example, add KPIs around online conversion rates, average order values, or recommendation-engine effectiveness. By anchoring these measures to broader corporate goals—like expansion into untapped markets—leaders maintain a holistic view of how analytics supports overarching objectives rather than remaining an isolated technical endeavor. Over time, continuous measurement against these KPIs fuels a feedback cycle where data informs every stage of strategic planning and execution.

Data-driven decision making also proves fertile ground for innovation. Stitch Fix, for instance, uses algorithms to match customers with personalized fashion choices, systematically refining its recommendations through ever-growing data loops (MacKenzie et al., 2013). In doing so, the company revolutionized how consumers shop for clothes, blending human stylists with machine-generated insights to achieve personalization at scale. Established enterprises can adopt a similar approach by layering data-driven experimentation on top of legacy systems—perhaps testing new product lines in micro-markets, analyzing real-time feedback, and then swiftly scaling successes. NewVantage Partners (2020) documents that such iterative, data-led innovation fosters both incremental improvements and the occasional leap of radical change, all while controlling risk by testing ideas in manageable chunks.

In practice, the journey toward data-driven strategy is often derailed by cultural inertia, departmental silos, or projects misaligned with corporate goals. Effective leaders tackle these pitfalls through clear vision-setting: they publicly laud analytics-informed decisions, tie incentives to data-backed initiatives, and maintain transparency about project outcomes—even if some fall short of expectations. At the same time, they remain alert to the limitations of purely quantitative methods, recognizing that data, while illuminating, cannot always capture the intangible nuances of market psychology or unforeseen global disruptions (Barton and Court, 2013). The ideal data-driven culture thus fosters a healthy balance: executives apply rigorous empirical evidence where feasible but remain open to strategic leaps guided by experience, intuition, or external events data cannot fully anticipate (OneData AI Insights, 2025).

Ultimately, weaving data insights into the strategic fabric is less about sporadic analytics triumphs and more about creating a sustainable operating model in which data shapes daily decisions, resource allocation, and long-term planning. Embracing frameworks like the Analytics Maturity Model or reimagining the Balanced Scorecard to include advanced KPIs can help leaders ensure that data science becomes a permanent—and self-reinforcing—fixture in corporate strategy. Beyond operational efficiency, this integrated model equips firms to not just react but actively shape market trends, discovering new opportunities and mitigating risks well before less data-savvy rivals see the writing on the wall. As subsequent chapters will illustrate, genuinely data-driven companies use these insights to reimagine everything from product development to organizational design, setting the stage for transformative breakthroughs and sustained competitive gains in a marketplace defined by relentless change.

2.5. Organizing for Data Science: People, Teams, and Culture

Data has surged to the forefront of modern commerce, making it not just a passing trend but a permanent fixture in how companies vie for competitive advantage. The allure of data-driven initiatives is clear: capture the right insights at the right time, and you can seize new market opportunities and shield your organization from looming threats. Yet as Davenport and Patil (2012) famously observed, the best algorithms and advanced tools are wasted without the right human and cultural framework behind them. From a practical standpoint, real-world case studies consistently show that how a company structures its data science function can determine whether those brilliant “aha” moments ever make it out of the analytics lab and into board-level strategy sessions (Bean, 2020). As OneData AI Insights (2025) aptly notes, in today’s data-saturated environment, assembling the right people and forging the right cultural mindset has become an urgent business imperative rather than a lofty ideal.

Data science success hinges on assembling a specialized blend of roles. At the core stand data scientists, professionals versed in everything from regression to deep learning who transform raw data into predictive models or revealing visualizations (Davenport and Patil, 2012). Equally important are data engineers, the unsung heroes who build and maintain the complex pipelines that funnel raw data into usable formats. If data scientists are the artists, data engineers are the ones priming the canvas to ensure every brushstroke lands exactly where it should. Then there are analytics translators—sometimes called business analysts on steroids—who interpret nuanced model outputs for executives and frontline staff alike (Henke et al., 2018). These translators help business stakeholders articulate relevant questions and contextualize data insights within commercial realities, bridging the all-too-familiar gap between the technical and the practical (Atlan Insights, 2023). In this cross-functional ensemble, each role brings distinct strengths: the engineer ensures data integrity, the scientist explores advanced methods, and the translator ensures insights find a warm reception outside the IT department.

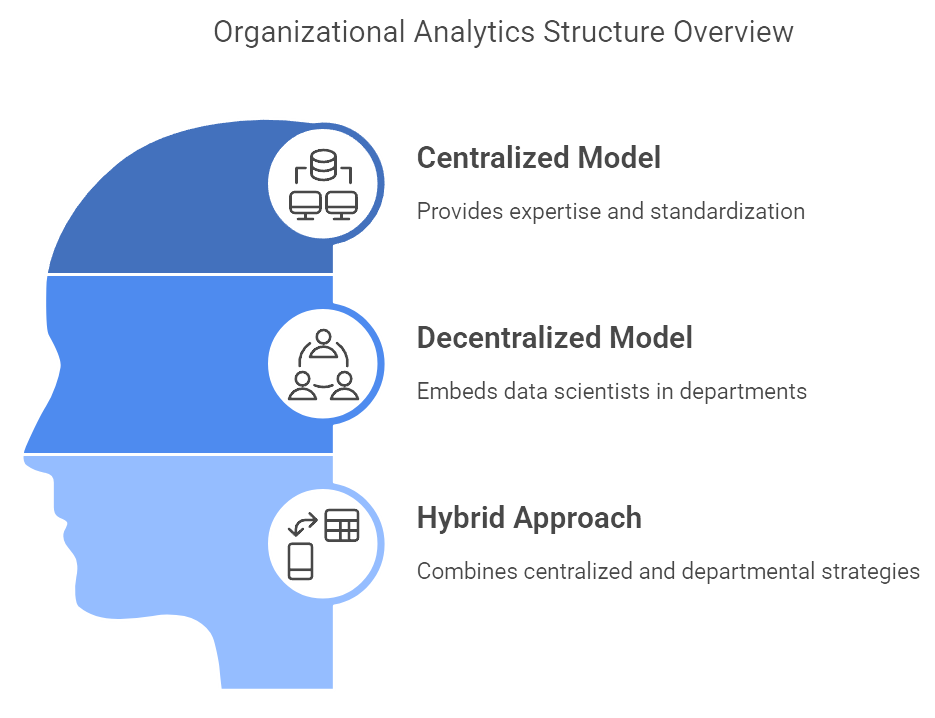

Figure 5: Illustration of different approaches to organizing analytics teams within a company, highlighting the benefits of each model. (Image by Napkin).

The question of organizational structure frequently becomes a point of debate. Some companies opt for a centralized analytics function or Center of Excellence (CoE). This model can provide a critical mass of expertise, standardized tools, and consistent methodologies, preventing every unit from inventing its own half-baked data processes. Procter & Gamble, for example, has famously leveraged a CoE to embed specialized project teams into various divisions, marrying the benefits of centralized governance with domain-specific insights (Business Case Studies, 2024). Others prefer a decentralized model, embedding data scientists directly within individual departments such as marketing, supply chain, or HR. This approach can accelerate project lifecycles and keep analytics close to real-world challenges, though it may also breed inconsistency and data silos if governance is lax (Davenport and Harris, 2007). In reality, many organizations evolve toward a hybrid approach, blending centralized oversight with department-level autonomy. Whichever structure is chosen, the ultimate aim is the same: put the right expertise in the right place at the right time, all while maintaining a consistent vision for how data shapes the company’s strategic trajectory.

Of course, no amount of technical excellence can overcome a lukewarm or outright hostile culture. Scholars have long recognized that culture often plays the starring role in determining whether data gets used or ignored (MIT Sloan Management Review, 2020). Senior leaders must create an environment where data-based inquiry is not just tolerated but actively encouraged, even when it challenges sacred cows or legacy products. This might include highlighting success stories—like the logistics team that slashed inventory holding costs by applying predictive models, or the HR unit that used attrition analytics to retain high-value employees. Publicizing these wins sends a powerful message that data is not some abstract IT artifact but a tool for tangible, bottom-line impact (Barton and Court, 2013). Conversely, organizations plagued by turf battles and departmental fiefdoms often find that data-driven efforts languish in obscure dashboards or endless proof-of-concept loops that never scale to enterprise impact. Bean (2020) notes that creating a true analytics democracy, in which employees at all levels can propose data-related experiments, fosters a continuous learning mindset and prevents the siloed thinking that stalls so many promising initiatives.

Formal frameworks can guide companies along this complex journey. Davenport’s Analytics Maturity Model, for instance, outlines a path from rudimentary descriptive metrics to prescriptive solutions that actively shape business outcomes (RIB Software Blog, 2024). As organizations move up the maturity ladder, they are expected to develop not just technical sophistication but also leadership buy-in, governance structures, and cultural norms that encourage experimentation. Some executives also adapt the Balanced Scorecard to track data-specific KPIs—such as the accuracy of a machine learning model or the speed at which data is refreshed. Linking these metrics to overarching goals, like increased market share or improved product margins, keeps analytics in tight alignment with commercial objectives (OneData AI Insights, 2025). It also provides a mechanism for ongoing feedback: every successful deployment refines best practices, while any failures highlight where talent is lacking or organizational support has faltered.

Leadership, of course, is the linchpin. When executives champion data as a pivotal asset—rather than a nice-to-have—they catalyze a shift in mindset that trickles down to the rank and file (Barton and Court, 2013). This often involves publicly interrogating decisions through a data lens (“Show me the numbers behind this initiative”) and resourcing analytics teams adequately so they can hire specialized talent and build robust infrastructures. Leaders must also navigate potential collisions between legacy processes and new data-driven insights. When data challenges an entrenched assumption—perhaps showing that a beloved product is hemorrhaging money—it helps if senior leadership demonstrates a willingness to change course based on clear evidence (NewVantage Partners, 2020). In doing so, they model the very behavior they hope to instill across the company: a bias toward empiricism over ego or departmental protectionism.

In the final analysis, effective organization for data science demands a delicate blend of roles, structures, and cultural imperatives. It’s not enough to cobble together a superstar data science team if they operate in a vacuum, nor is it wise to scatter data-savvy professionals across the enterprise without any centralized coordination. The sweet spot is a coherent ecosystem in which people with complementary skills collaborate under a unifying strategic vision, guided by governance that maintains data quality and consistency while still permitting local innovation (OneData AI Insights, 2025). By investing in the right talent, calibrating the organizational model, and cultivating a data-friendly culture from the top down, companies transform analytics from a sporadic experiment into a foundational engine of competitive advantage. This shift doesn’t happen overnight, but as subsequent chapters will illustrate, the long-term payoff—greater agility, more informed decisions, and a culture of continuous learning—makes the journey well worth the effort.

2.6. Applications of Data Science in Business

Data has evolved from a mere byproduct of corporate operations into a pivotal catalyst for strategic advantage, shaping everything from product innovation to how companies forge deeper relationships with their customers. Researchers framed this transition through resource-based theories, suggesting that data, when managed adeptly, becomes a rare and inimitable asset that can keep competitors at bay (Barton and Court, 2013). Meanwhile, market analysts confirm that businesses weaving analytics into their core functions—from marketing and sales to operations and finance—exhibit greater agility and resilience in today’s frenetic commercial landscape (MIT Sloan Management Review, 2020). In a world where consumer preferences shift overnight, the ability to anticipate demand, optimize supply chains, and personalize user experiences is fast becoming the difference between enduring relevance and rapid obsolescence (OneData AI Insights, 2025).

Marketing departments once treated segmentation as a polite guess about which demographics might respond to a campaign. Data science has turned that guess into a high-precision discipline. Instead of blindly advertising a one-size-fits-all promotional offer, retailers leverage machine learning to parse transaction histories, clickstream data, and even geospatial patterns to create microsegments of customers with similar buying behaviors (Business Case Studies, 2024). Global giants like Walmart blend real-time inventory data with weather forecasts and event calendars to predict which products will see a surge in coming weeks. When that winter storm is on the horizon, Walmart doesn’t just stock extra hot chocolate; it might also ramp up supplies of popular board games or comfort foods based on past purchase patterns. Netflix offers a parallel in the entertainment domain, using recommender systems that sift through viewing histories, user ratings, and watch durations to suggest new series or movies. Though you might joke that Netflix knows your preferences better than your closest friends, its sophisticated algorithms keep you watching, reduce churn, and foster long-term loyalty (Atlan Insights, 2023). These personalized experiences aren’t a corporate vanity project; they illustrate how the strategic use of data can radically boost customer satisfaction—and the bottom line.

In finance, data science has become the shield against fraudulent transactions and the enabler of more inclusive credit underwriting. Traditional banks typically looked at a limited set of variables—credit scores, employment history—to decide if you deserved a loan. Today, fintech upstarts blend these conventional metrics with alternative data, such as utility payments or social media signals, to lend to “thin-file” consumers who might otherwise be excluded (Davenport and Harris, 2007). On the fraud detection front, anomaly detection algorithms keep an eye out for out-of-pattern spending, like a sudden flurry of overseas purchases on a card that rarely crosses state lines. By freezing or flagging these suspicious transactions in real time, financial institutions bolster trust and slash losses. Insurance companies are also dipping into this realm with sensor-based policies (telematics) that capture a driver’s speeding habits or braking style. Rewarding safer drivers with lower premiums isn’t just a marketing gimmick; it’s a data-driven recalibration of risk models that align behavior with price. This ongoing infusion of data transforms stodgy financial services into dynamic, tech-savvy ecosystems (OneData AI Insights, 2025).

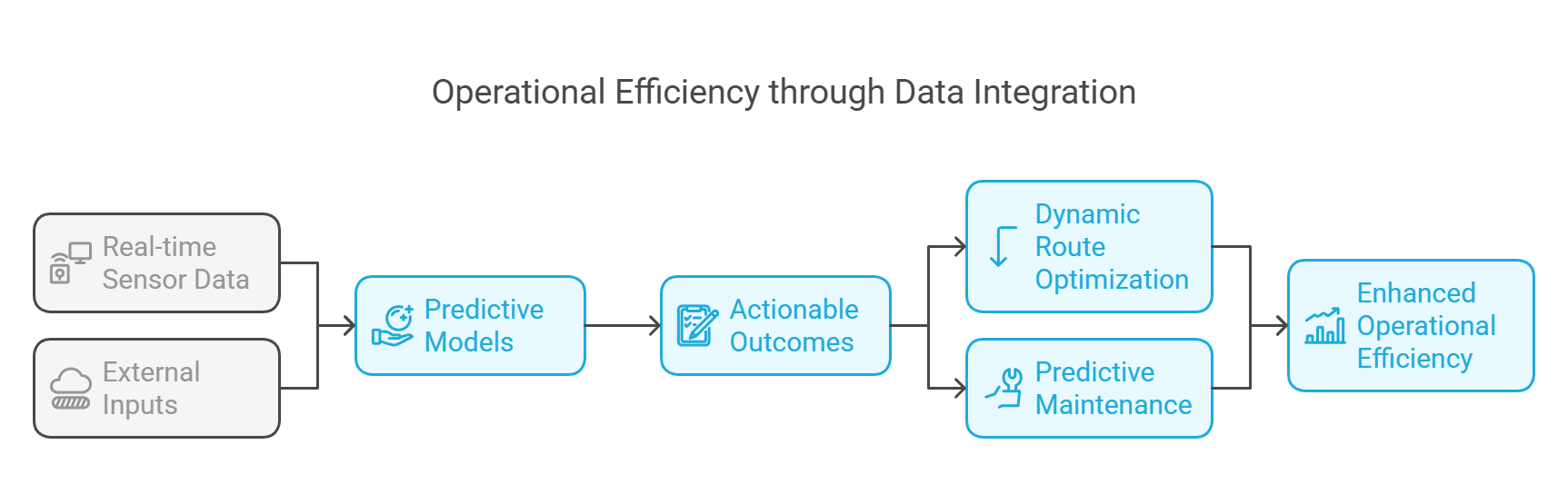

Figure 6: Illustration of how integrating real-time sensor data and external inputs leads to enhanced operational efficiency through predictive models, actionable outcomes, dynamic route optimization, and predictive maintenance. (Image by Napkin).

Supply chains might be the least glamorous side of business, yet they’re often the most data-rich. Logistics operators like UPS have famously exploited analytics to re-route deliveries in real time, cutting down on fuel costs and improving punctuality (Yoo, 2017). Think of it as a giant puzzle: at any given moment, thousands of trucks, vans, and airplanes are in motion, all needing to get from A to B without succumbing to traffic jams, bad weather, or mechanical snafus. Data science turns that chaos into an opportunity, predicting likely disruptions and suggesting alternate routes before managers even realize there’s a bottleneck forming. Manufacturing follows a similar trajectory with predictive maintenance, where machinery outfitted with sensors can send out early-warning signals for breakdowns. Instead of waiting for a conveyor belt to grind to a halt mid-shift—and paying dearly for emergency repairs—plants can schedule maintenance at off-peak times, reducing downtime and overall costs (Barton and Court, 2013). In sum, the operational efficiencies gleaned from advanced analytics create a ripple effect, letting businesses trim overhead, keep customers happier with on-time deliveries, and free resources to explore new markets or products.

While HR might not conjure images of advanced modeling, people analytics has shaken up how companies recruit, develop, and retain talent. By analyzing engagement surveys, communication patterns, and performance metrics, organizations can spot high-potential employees who might benefit from leadership development programs or identify a department prone to burnout before resignations skyrocket (Business Case Studies, 2024). When Amazon used predictive analytics to refine hiring in its fulfillment centers, it wasn’t just about filling job vacancies faster, but also about matching candidates to roles where they’d thrive long-term. This data-driven approach extends to retention strategies, where subtle indicators—a dip in engagement scores, a team’s sudden decrease in cross-functional collaborations—can warn HR leaders to intervene. Critics might worry about Big Brother-esque overreach, but responsible, well-governed people analytics helps companies retain talent, cut turnover costs, and cultivate a more engaged workforce. It also provides a valuable reminder that data science isn’t just about hooking new customers; it’s about nurturing the people who keep the company running.

Whether refining marketing campaigns or optimizing machinery schedules, companies frequently rely on structured methodologies like CRISP-DM (Davenport and Harris, 2007). This cyclical process moves from clarifying the business question to data cleaning, modeling, evaluation, and finally real-world deployment. Firms integrating advanced analytics into Balanced Scorecards further embed data-driven goals—like reducing delivery times or boosting recommendation accuracy—into corporate performance metrics (Barton and Court, 2013). As these metrics tie more concretely to revenue or customer satisfaction, data-based wins accumulate, nudging organizational culture to embrace analytics even more (NewVantage Partners, 2020). Each success story—a marketing campaign that outperforms expectations or a supply chain pivot that dodges a damaging delay—reinforces the conviction that data isn’t just a technical necessity but a strategic keystone for growth.

These varied applications, from fraud detection to route optimization, collectively demonstrate the transformative capabilities of well-implemented data science. In an age of hyper-competition, products can be imitated, prices undercut, and brand loyalties fray. Data, however, can become the differentiating factor—especially when it’s combined with the right people, processes, and cultural mindset (Atlan Insights, 2023). Organizations that invest consistently in analytics infrastructure, talent development, and leadership support lay the groundwork for a cycle of continuous improvement. They detect emerging trends faster, pivot more deftly in response to market changes, and design experiences that keep customers and employees engaged. Over time, this approach matures into an enterprise-wide ethos where data isn’t an afterthought to strategy but the bedrock upon which strategic decisions and innovations are built.

2.7. Data Ethics, Privacy, and Governance

Data propels modern enterprises toward new heights of efficiency, innovation, and customer engagement. Yet this vast potential comes with significant ethical and regulatory complexity, which, if ignored, can unravel even the most sophisticated analytics initiatives. Academics have likened the rise of data science to earlier industrial revolutions, pointing out that disruptive technologies invariably produce unintended consequences alongside their economic gains (Barton and Court, 2013). On a more tangible level, executive teams are learning that the public is far less forgiving of data mishaps—especially those involving privacy breaches—than in the early days of digital commerce. In a world where a single controversy can catalyze legal battles and brand erosion, leaders must adopt governance models that strike a careful balance between leveraging data for competitive edge and upholding stringent ethical and legal standards (MIT Sloan Management Review, 2020).

Regulatory frameworks have emerged worldwide to codify these heightened expectations, with the European Union’s General Data Protection Regulation (GDPR) serving as a prime example of powerful legal constraints. Aon Professional Services (2020) reminds us that GDPR imposes steep fines—potentially four percent of global revenue—on violators, signaling how seriously regulators view data privacy and consumer protection. Meanwhile, similar laws in California, Brazil, and elsewhere reflect a global trend toward reinforcing consumer rights over how their data is collected, stored, and monetized. In practical terms, these regulations force organizations to rethink seemingly innocuous data practices, such as capturing user clicks or location data “just in case,” and push them to adopt data minimization principles that restrict usage to well-defined business goals. The Facebook-Cambridge Analytica scandal demonstrated how quickly public sentiment can turn against a brand suspected of exploiting personal information for dubious purposes, a reminder that regulatory compliance is only part of the equation—firms must also maintain public goodwill (Business Case Studies, 2024). This dynamic aligns with the notion of a “social license to operate,” wherein corporate data use remains contingent on consumer trust and transparency.

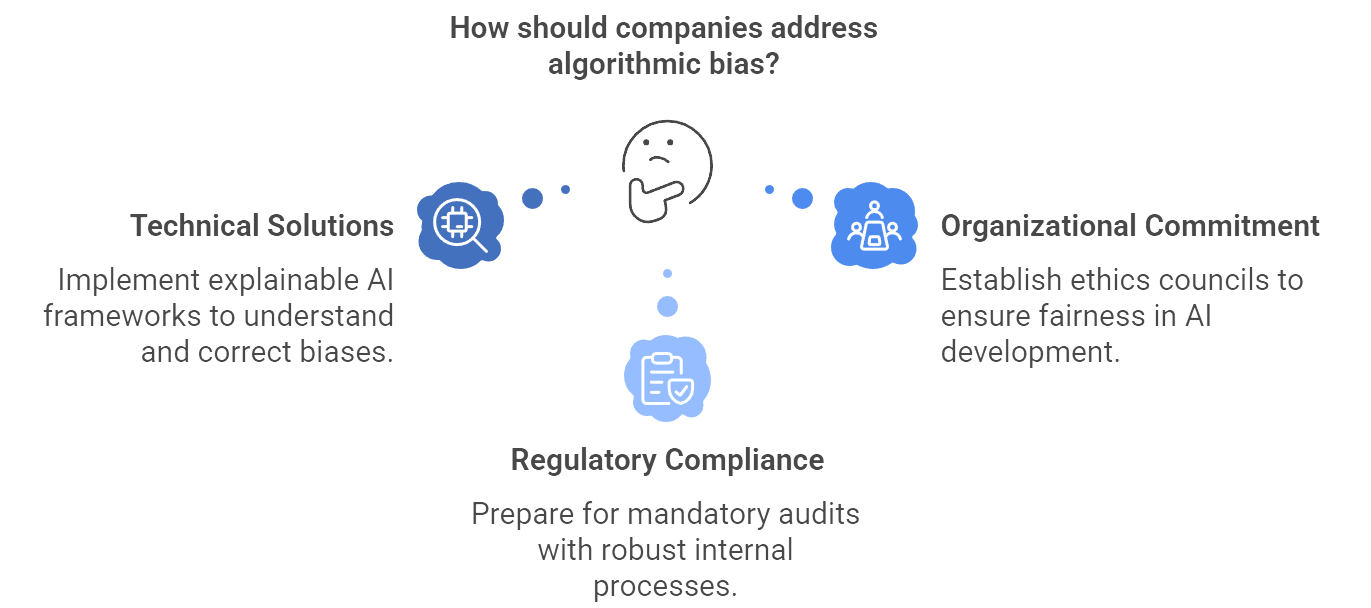

Figure 7: Illustration of exploring solutions to algorithmic bias through technical development, ethical governance, and adherence to regulatory standards. (Image by Napkin).

Algorithmic bias introduces another ethical minefield. The infamous case of Amazon’s AI hiring tool, which penalized female applicants because it was trained on historical data skewed toward male hires, highlights how subtle prejudices can become enshrined in machine learning models (Dastin, 2018). Companies risk significant reputational damage if their algorithms discriminate against protected groups, either by denying services or presenting unfair pricing. From an academic standpoint, the challenge lies in detecting and correcting biases that may be embedded in both historical data and the model’s design. Technical solutions, such as explainable AI frameworks, allow data scientists and stakeholders to understand how a model arrives at its conclusions, making it easier to spot and address unintentional discrimination (Atlan Insights, 2023). However, solving algorithmic bias also demands an organizational commitment that reaches beyond data teams. Ethics councils or review boards can help ensure that fairness and inclusivity remain non-negotiable values throughout model development and deployment (Bean, 2020). As regulations expand, companies may face mandatory audits of AI systems, necessitating robust internal processes that demonstrate due diligence and responsible decision-making.

Governance stands as a crucial pillar for managing these multifaceted responsibilities. Well-defined governance frameworks clarify the scope and purpose of data collection, the level of access granted to various stakeholders, and how data is secured against breaches (Davenport and Harris, 2007). Steering committees or data councils often play an oversight role, coordinating data strategy across departments to eliminate silos and maintain consistent standards for data quality and usage. In highly regulated industries such as finance or healthcare, authorities require thorough documentation of how AI models operate and proof of ongoing performance checks. Barton and Court (2013) discuss how these demands dovetail with industry-driven calls for “responsible AI,” encompassing transparency around model lifecycles, regular retraining as inputs evolve, and safeguards against the misuse of sensitive attributes like race, gender, or religious affiliation. From a managerial perspective, embedding these ethical checks into existing frameworks like CRISP-DM ensures that data ethics is not an afterthought but an integral part of each project stage.

Cultural and leadership factors amplify the importance of any governance model. It’s one thing to write a data ethics policy; it’s another to ensure it is respected in everyday workflows. Executives must articulate a clear vision that explains not only the “rules” but the underlying rationale for maintaining high ethical standards (Business Case Studies, 2024). For instance, if teams feel that bias tests or privacy protocols are bureaucratic obstacles, they may quietly circumvent them. By contrast, a leadership culture that highlights the competitive advantages of ethical data use—ranging from stronger customer loyalty to fewer legal entanglements—encourages teams to see these requirements as integral to corporate success rather than red tape. Past missteps, whether a near miss or a public scandal, can serve as catalysts for refining governance practices and reasserting the company’s ethical commitments. As NewVantage Partners (2020) notes, a company that emerges stronger from a data-related crisis often does so because it used the event as a springboard to reinforce ethical norms and tighten its data governance architecture.

Ultimately, the ethical and legal dimensions of data science are not separate from the core business strategy but deeply entwined. Properly navigated, these concerns foster trust—a key asset in hyper-competitive markets where transparency can differentiate a brand. Mishandled, they can undermine even the most sophisticated analytics program, triggering both legal and PR nightmares. Organizations, therefore, must view ethics, privacy, and governance not as boxes to tick but as strategic imperatives woven through every data-driven initiative. This involves recalibrating corporate mindsets, instituting robust controls, and engaging in ongoing dialogues with regulators, customers, and broader society. By doing so, companies position themselves to harness the transformative potential of data while safeguarding the brand reputation and stakeholder trust that ultimately fuel long-term success.

2.8. Maximizing Value and Managing Performance

Data now occupies a central role in how modern organizations gauge success, identify growth opportunities, and hedge against market uncertainties. Historically, many executive teams relied on gut feel or piecemeal metrics, a strategy that worked tolerably in simpler markets but is increasingly precarious in a world of complex consumer behaviors and continuous technological shifts (Barton and Court, 2013). The rise of data-driven methods has revealed more dependable avenues for demand forecasting, dynamic pricing, and personalized customer strategies, delivering cost efficiencies and new revenue streams alike. From an academic perspective, this evolution parallels the broader recognition that data can serve as a rare, valuable asset that savvy enterprises exploit to keep competitors at arm’s length (MIT Sloan Management Review, 2020). Against this backdrop, learning to maximize the value of data initiatives and methodically track their performance has transformed from an optional add-on to an absolute strategic necessity (OneData AI Insights, 2025).

Aligning analytics projects with explicit business outcomes lays the foundation for sustained impact. Researchers often characterize this step as defining a “value hypothesis,” where executives articulate exactly how and why data science investments will translate into revenue gains, cost reductions, or improved customer satisfaction (LaValle et al., 2011). Think of a telecom provider aiming to slash churn rates in a specific pilot region by leveraging a predictive model that flags at-risk customers. Managers can measure the model’s success by comparing churn before and after intervention, validating the model’s real-world utility. If the pilot succeeds, the telecom can deploy the model at scale, potentially recouping millions in lost contract revenues. This approach ties analytics to a tangible outcome—retained customers—and forces clarity on what “success” really means. Without this initial alignment, data science efforts risk drifting into the realm of “interesting insights” that never anchor themselves to financial or strategic targets.

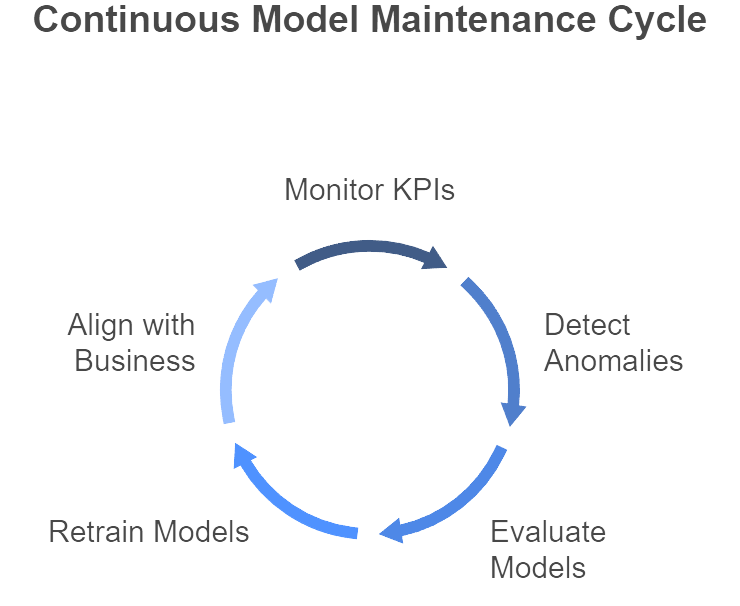

Figure 8: Illustration of the steps involved in keeping AI models accurate and effective through continuous maintenance. (Image by Napkin).

Maintaining and refreshing models is essential once they’re operational. Everything from evolving consumer tastes to policy changes can skew a model’s accuracy—an issue commonly known as model drift (Davenport and Harris, 2007). Machine learning operations (MLOps) practices mitigate these risks by establishing periodic checkups. A bank’s credit-risk model, for example, could lose its edge if the macroeconomy shifts or new lending regulations come into play. Regular evaluations and retraining sessions catch such drift early, preventing a quiet degradation that might suddenly manifest as spiking default rates. This iterative process often relies on methodologies like CRISP-DM, extended to incorporate post-deployment analysis. By monitoring KPIs (such as recall or precision) over time, data teams detect anomalies before performance drops severely, thus ensuring continuous alignment between analytics outputs and evolving business realities (Business Case Studies, 2024).

Measuring analytics initiatives is most effective when integrated into broader performance frameworks. Some organizations adapt the Balanced Scorecard to include data-specific metrics, such as predictive accuracy, lift in marketing campaigns, or cost savings from supply-chain optimizations. As these metrics sit alongside traditional financial and operational indicators, senior managers maintain a holistic view of how data initiatives interact with broader business objectives (Atlan Insights, 2023). This practice also fosters collaboration because teams in marketing, operations, and finance are jointly responsible for hitting these analytics-driven KPIs. Strong governance structures further ensure that data remains reliable and compliant, creating a virtuous circle in which better analytics produce better results, which then prompt deeper analytics adoption (Barton and Court, 2013).

Culture is the final lever for maximizing data value, and arguably the trickiest. No advanced model survives if front-line managers or department heads cling to gut instinct or outdated routines. MIT Sloan Management Review (2020) underscores that data projects often fail when an organization’s culture resists the mindset shift needed to embrace evidence-based decisions. Leaders play a critical role by highlighting early wins—perhaps a pilot that significantly cuts inventory costs or boosts customer retention—and showcasing them as proof that analytics drives measurable outcomes. Such successes, even if modest in scale, can flip the narrative from “data is an expensive experiment” to “data is fueling our competitive edge.” Just as crucial is encouraging teams to learn from near-misses or outright failures, seeing them as signals to refine data quality or revisit modeling assumptions, rather than reasons to abandon analytics (NewVantage Partners, 2020).

When these elements—clear objectives, consistent model maintenance, embedded performance metrics, and a data-friendly culture—coalesce, data science shifts from being an isolated technical undertaking to a transformative organizational capability. Tightly linking projects to meaningful business outcomes ensures immediate, verifiable impact. Ongoing model reviews ward off the natural decay of predictive accuracy in changing markets. Transparent performance dashboards keep everyone—executives, managers, and analysts—on the same page about what’s working and what needs recalibration (OneData AI Insights, 2025). Over time, these practices weave analytics into the company’s strategic fabric, setting the stage for greater operational efficiency, product innovation, and revenue expansion. As upcoming chapters will explain, operationalizing this ethos not only cements data science as a cornerstone of corporate strategy but also equips organizations with the agility and foresight required to navigate an increasingly unpredictable marketplace.

2.9. Conclusion and Further Learning

This chapter has provided a broad yet practical exploration of data science fundamentals for business leaders. From understanding essential analytical concepts and the CRISP-DM lifecycle to appreciating the complexities of enterprise data management, the discussion reveals that data science involves more than algorithms or technology alone. Its power is contextual, requiring alignment with strategic aims, cultural openness to experimentation, and sound governance mechanisms that safeguard ethics and privacy (Dastin, 2018). Organizations that have excelled—Netflix, Amazon, UPS, among others—demonstrate how analytics can illuminate hidden patterns, automate decisions, and enhance customer experiences in ways that continuously reinforce competitive positioning.

Yet, true mastery requires seeing data science as a perpetual journey. As consumer demands evolve, regulations tighten, and new technical breakthroughs emerge, businesses must remain agile, revisiting their assumptions and recalibrating their analytical methods. Effective leaders treat data science not just as an isolated function but as a core competency that informs every significant enterprise initiative, from routine process improvements to game-changing innovations (Henke et al., 2018). When coupled with ongoing ethical vigilance and robust measurement of ROI, data science can transcend its often-hyped status to become a reliable engine of organizational transformation, powering sustainable growth in an increasingly data-centric world.

Exploring data science more deeply beyond the foundations can significantly enhance a leader’s ability to innovate and steer their organization. The following prompts are designed to encourage in-depth inquiry and learning, harnessing the full capability of GenAI to provide rich insights. By engaging with these advanced questions and scenarios, business professionals can deepen their understanding of complex data science topics and discover new ways to apply analytics for strategic advantage. Each prompt invites exploration of a key theme or challenge at the intersection of data science and business, encouraging a nuanced understanding that builds on the chapter’s content. Use these prompts to delve into specific areas of interest, simulate real-world decision-making situations, and uncover expert perspectives. This deeper exploration will help consolidate your knowledge, reveal best practices, and spark ideas for how to leverage data science in your own business context. Embracing continuous learning in this way is crucial – data science is a fast-evolving field, and staying curious and informed will empower you to lead with confidence and vision. Let these advanced prompts be a springboard into the next level of mastery, turning foundational knowledge into actionable expertise.

Strategic Data Vision: “How can a company develop a comprehensive data strategy that aligns with its business objectives? Outline the key components of a successful data strategy (data collection, technology, talent, culture, etc.) and how a leader should prioritize them in a traditional enterprise.”

ROI of Analytics: “Provide a detailed analysis of how to calculate the return on investment (ROI) for a data science project. Include an example of a predictive analytics initiative – walk through hypothetical costs, direct and indirect benefits, and demonstrate how to quantify intangible benefits such as improved customer trust or brand value.”

Data-Driven Culture Change: “What practical steps can top management take to transform a company’s culture into a data-driven one? Discuss change management techniques, incentive structures, and training programs that have proven effective in embedding data-oriented thinking at all organizational levels.”

Case Study Deep-Dive: “Imagine you are the Chief Analytics Officer of a large retailer like Walmart. ChatGPT will walk you through a case study: Identify a major business challenge (e.g. inventory stockouts or personalization), propose a data science solution, and elaborate on how you would implement it (data needed, model choice, deployment) and measure its success.”

Emerging Technologies: “Explain the concepts of AutoML (Automated Machine Learning) and augmented analytics. How might these emerging technologies lower the barrier for businesses to use advanced analytics? Discuss the pros and cons of relying on AutoML tools from a leadership perspective – including issues of trust, talent, and competitive advantage.”

Ethical AI Dilemma: “Present a scenario where a bank’s AI loan approval model is found to be biased against a certain demographic. Ask ChatGPT to role-play as an ethics consultant: What steps should the bank take to address the bias? Consider technical fixes, governance changes, transparency with stakeholders, and potential policy advocacy. In your answer, explore the balance between fairness and business performance.”

Data Monetization: “How can companies monetize their data as an asset? Provide examples of data monetization models – such as data partnerships, selling insights, or using analytics to launch new data-driven services – and discuss the strategic and ethical considerations a business leader should evaluate for each model.”

Operational Analytics: “Dive into how real-time data analytics can transform operations. Use a manufacturing plant as an example: describe how streaming sensor data and IoT (Internet of Things) analytics might optimize production schedules, maintenance, and quality control. What infrastructure is required, and what challenges might arise in implementation?”

Customer Journey Analytics: “Outline how data science can map and improve the end-to-end customer journey. Starting from customer acquisition (marketing attribution modeling) to customer experience (sentiment analysis of feedback) and retention (churn prediction), ask ChatGPT to provide an integrated approach for using analytics at each stage. Include how insights from one stage (e.g. support tickets) can feed into improvements in another (product development).”

Natural Language Processing for Business: “Many companies have vast text data (emails, support chats, documents). Explore advanced uses of NLP (Natural Language Processing) in business: for example, semantic analysis for voice of customer programs, document classification for legal compliance, or chatbots for customer service. How can an executive evaluate which NLP application offers the most value and ensure the project succeeds (consider data preparation and model training specifics)?”

Scaling AI in the Enterprise: “What does it mean to industrialize or scale AI within an enterprise? Discuss the concept of an AI factory or centralized machine learning platform. How can a leader set up governance, tools (ML Ops), and teams such that dozens of AI initiatives can be developed, deployed, and maintained efficiently in parallel? Include how to handle model risk management at scale.”

Talent Development: “Detail a talent development plan for upgrading a traditional analyst team into a data science team over 24 months. What training would you provide? How would you redesign roles or hire new talent? Discuss mentorship, involvement of external experts, and creation of a knowledge-sharing culture (like lunch-and-learn sessions or internal conferences) to build advanced analytics capability internally.”

Quantifying Uncertainty: “Business decisions often require understanding uncertainty and risk. Ask ChatGPT to explain how data science models quantify uncertainty (prediction intervals, confidence levels, probabilistic forecasts) and how leaders should interpret these in decision-making. For example, how should a CEO use a sales forecast that gives a range (pessimistic to optimistic) rather than a single number? How can scenario analysis complement point predictions?”

Data Governance in Practice: “Imagine your company suffered a minor data breach due to a poorly governed data pipeline. You’ve been tasked to tighten data governance. Develop a plan with ChatGPT’s help: outline immediate actions (incident response, notification), root-cause fixes (access control, encryption), and long-term measures (governance policies, roles like data stewards, audit processes) to both remedy the breach and strengthen governance company-wide.”

Vendor Selection: “When outsourcing or purchasing data science solutions, what criteria should leaders use to evaluate vendors or platforms? Pose a scenario: your company is choosing a cloud analytics platform or an AI software vendor. Have ChatGPT compare critical factors such as scalability, cost, security, ease of integration, flexibility, and vendor support. Also, ask about how to assess vendor claims (due diligence on model performance and case references).”

Keeping Up with AI Trends: “The data science field evolves rapidly (e.g., new algorithms, AutoML, regulatory changes). Ask for strategies a business leader can use to stay informed and ahead: discuss participating in industry forums, establishing an external advisory board of AI experts, encouraging pilots of new tech (like GPT-4 or other advanced AI) in low-risk settings, and continuous learning for the leadership team. In the answer, highlight how leaders can filter hype from reality when faced with buzzwords.”

Data Visualization Mastery: “Data storytelling is vital for executives. Request ChatGPT to provide guidance on advanced data visualization and dashboard design principles that help leaders make decisions. Include discussion of when to use certain chart types, how to show uncertainty or targets vs. actuals, and how interactive dashboards can be structured for drill-down. Also, mention common pitfalls in interpreting visual data and how to avoid being misled by spurious correlations or optical illusions in charts.”

Cross-Industry Learning: “Often, innovations in analytics in one industry can be applied to another. Prompt a cross-industry comparison: e.g., ‘How have data science techniques used in e-commerce personalization inspired approaches in healthcare for personalized medicine, or in finance for personalized banking?’. By exploring analogies, identify creative ideas that a leader in one sector could borrow from another (like how supply chain optimization in retail could inform patient flow optimization in hospitals).”

AI Ethics and Profitability: “Explore the relationship between ethical AI practices and long-term profitability. Have ChatGPT discuss whether focusing on fairness, transparency, and privacy (which might limit some short-term capabilities) can actually enhance a company’s brand, avoid costly regulatory fines, and lead to better quality models (through diverse teams and datasets). Use evidence or examples where companies turned ethical considerations into a competitive advantage.”

Future Outlook – From Data to AI-Driven Enterprise: “Paint a vision of the near future (5–10 years) for a fully AI-driven enterprise. What will be the role of human leaders when AI handles many analytical and operational tasks? Ask ChatGPT to describe the evolving partnership between human intuition and AI recommendations in the C-suite. Cover expected advances like real-time scenario planning, AI assistants for every knowledge worker, and the skills future leaders will need (e.g., asking the right questions of AI and judgment to implement AI advice wisely).”

Each of these prompts is designed to unlock deeper understanding. By engaging with them, you can simulate high-level decision-making scenarios, get detailed analyses and best practices, and explore cutting-edge ideas in data science and business. Keep challenging yourself with such questions – the more you inquire and learn, the more adept you’ll become at harnessing data science for strategic success. Continue feeding your curiosity, and remember that the journey of mastering data-driven leadership is ongoing and rewarding. Happy exploring, and here’s to your growth as a data-savvy leader!

While knowledge is vital, there is no substitute for hands-on experience when it comes to mastering data science concepts. The following practical assignments are designed to help you, as a business leader, apply the chapter’s insights in real-world contexts. These exercises bridge theory and practice, giving you a chance to work through data-driven decision scenarios and refine your skills in a low-risk environment. Each assignment focuses on a core aspect of data science in business – from strategy formulation to project execution and ethical evaluation. By completing these assignments, you will deepen your understanding of how to initiate and guide data science efforts, interpret results, and make improvements. They are crafted to be comprehensive and reflective of real challenges you might face in your organization. Guidance is provided for each assignment, so you have a clear sense of how to proceed and measure success. Dive into these assignments with an open and analytical mindset. They will not only reinforce what you’ve learned in this chapter but also build confidence in your ability to lead data science initiatives. As you work through them, remember that making mistakes is part of learning – each iteration will sharpen your judgment. Let’s turn knowledge into action and accelerate your journey to becoming a data-driven leader.

🛠️ Assignments

📝 Assignment 1: Data Opportunity Assessment

🎯 Objective:

Identify a high-impact business problem that can be addressed with data science, ensuring it aligns with your organization’s strategic goals and available resources.

📋 Task:

Consult at least two departments (e.g., marketing, operations) to understand their pressing challenges or opportunities. Select one specific problem (e.g., reducing customer churn, optimizing delivery routes) and formulate a clear question that data science could answer—such as “Which customers are most likely to churn next quarter?” or “How can we minimize transportation costs in distribution?” Document the data you currently have and the data you might need. Outline how you would measure success, including both intermediate analytical metrics (e.g., model accuracy) and business KPIs (e.g., churn rate decrease, cost savings).

💡 Guidance:

| Aspect | Details |

|---|---|

| Steps to Complete: | Conduct informal interviews or meetings with stakeholders from different departments to gather potential problem areas. Choose a problem that is both significant (substantial impact on revenue, cost, or customer experience) and realistically solvable with data science methods given your existing data and resources. Write down the business question to be answered and identify the data sources (internal or external) that could be relevant. Specify the success metrics—for instance, a target reduction in churn or a percentage decrease in operational costs. |

| Evaluation: | Assess the potential impact of solving this problem: is it worth the investment of time and resources? Determine if the necessary data is sufficiently available and of acceptable quality. If not, note the gaps. Confirm stakeholder support—are the relevant departments willing to provide data and collaborate? |