Chapter 3

Aligning Data Science with Business Strategy and ROI

"Every large enterprise will need to become a technology company, and data is at the core of that transformation. If you can’t align analytics with real business outcomes, you’re missing the point." — Satya Nadella

Aligning Data Science with Business Strategy and ROI emphasizes the significance of connecting analytics initiatives to measurable business value. The chapter explains how frameworks such as the Balanced Scorecard, data strategy roadmaps, and OKRs help keep data projects consistently focused on broader corporate objectives, outlining approaches for identifying and prioritizing high-impact use cases through impact-feasibility matrices and cost-benefit analyses. Various ROI metrics, from NPV to payback period, are introduced to quantify returns, while examples from finance, retail, healthcare, FMCG, and logistics demonstrate how well-implemented data solutions can safeguard profits, heighten efficiency, and generate new revenue streams. The text also highlights the importance of managing risks by adhering to regulatory compliance, ethical AI guidelines, and rigorous governance. It concludes by showing how organizations can foster a data-driven culture, secure stakeholder buy-in, and stay poised for future advancements in real-time analytics, AI-driven automation, and the evolving CDO role, thereby ensuring that data science remains a robust catalyst for strategic differentiation and sustained growth.

3.1. Introduction to Data Science ROI

In the contemporary digital economy, aligning data science initiatives with a company’s strategic objectives is no longer a luxury but a critical factor in maintaining competitive advantage. Organizations that successfully integrate analytics into their broader business vision are more likely to exceed performance benchmarks and adapt quickly to changing market demands. Recent findings suggest that data-centric firms can achieve substantially higher customer acquisition rates and profitability than those relying predominantly on intuition (McKinsey Global Institute, 2016). At the same time, many companies fall short of realizing tangible benefits because their analytics projects lack organizational context or a clear link to profitability. Approximately 85% of big data initiatives fail to meet their stated goals (Asay, 2017), frequently due to a misalignment between technical solutions and actual business challenges. This section underscores the importance of a cohesive approach: when executives and data professionals collaborate to tie data science efforts directly to corporate goals, they create a framework where advanced analytics not only provides insights but also drives measurable returns. Such synergy empowers businesses in finance, retail, healthcare, FMCG, and distribution/logistics to seize growth opportunities, reduce operating costs, and cultivate long-term strategic differentiation in an increasingly data-driven world (Davenport and Harris, 2007).

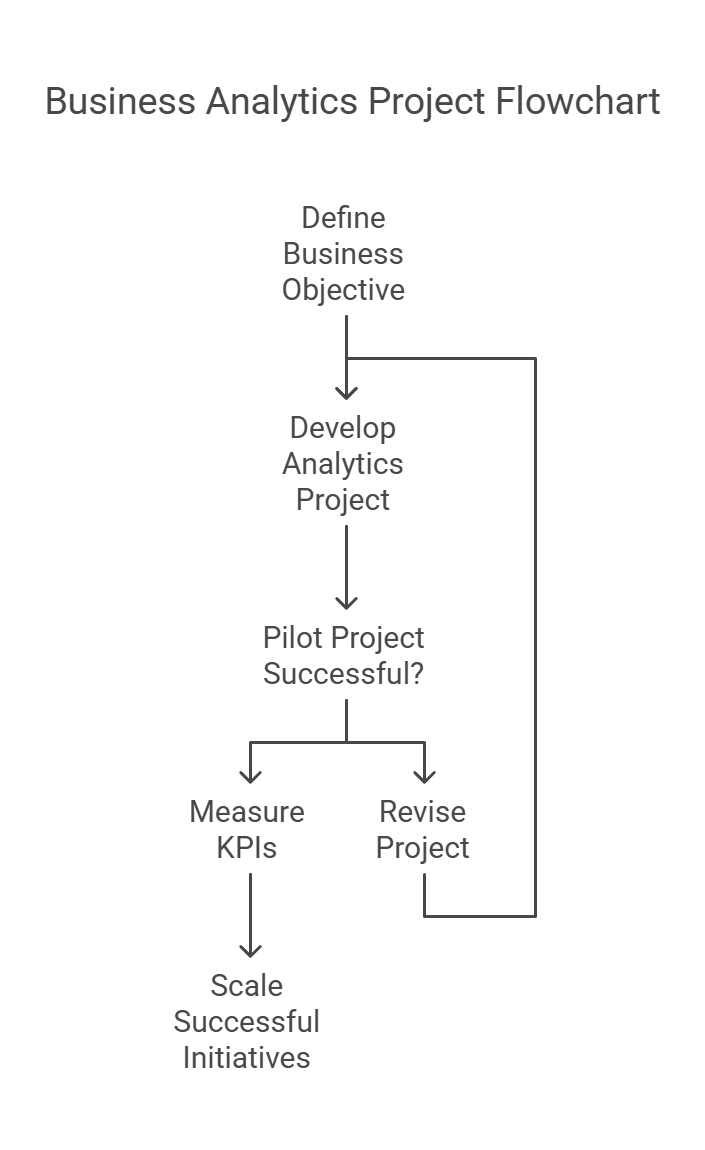

Figure 1: Illustrationo f of a business analytics project, showing the path from initial objective to implementation and potential revisions. (Image by Napkin).

It may sound obvious, but data science is essentially the art (some prefer calling it “science”) of extracting actionable insights from raw information. At its core, it involves a combination of statistics, computer science, domain expertise, and a dash of healthy skepticism. The reason for this skepticism is straightforward: data in its raw form tends to be messy, full of errors, and occasionally downright misleading. Many executives are lured into the world of analytics under the premise that “big data will solve everything,” only to discover that a disorganized data pipeline is more likely to produce confusion than clarity. True value emerges when organizations align these analytical techniques—everything from simple descriptive statistics to complex predictive models—with real business challenges. This tight alignment ensures that the data science function is not just an isolated technical exercise but a strategic enabler capable of identifying new revenue streams, optimizing operations, or improving customer experience.

Data science success, particularly in a commercial context, rarely comes from ad-hoc experiments. Instead, it requires a structured, end-to-end process that starts with data collection and ends with deployed models producing measurable returns. The usual journey begins with data collection, where organizations gather relevant information from internal systems or third-party providers. The subsequent cleaning and preprocessing stage is often where the sarcasm feels most warranted: many leaders assume this phase is trivial, but data scientists have been known to spend up to 80% of their time wrestling with inconsistent formats, missing values, and archaic file systems that “store” data in ways no human (or machine) can appreciate. Once the data is properly shaped, exploratory analysis uncovers initial patterns and outliers—this is the point where the “aha” moment might happen if the data reveals an unexpected opportunity or risk.

From there, model building begins in earnest, leveraging techniques ranging from linear regression to advanced machine learning and deep learning algorithms. Although executives are often enchanted by the glamorous algorithms du jour—neural networks, anyone?—the most critical decision is not always which sophisticated approach to pick but how to ensure the model addresses a real business question. After all, the perfect model that solves the wrong problem remains absolutely useless. Once a promising model is developed, evaluation becomes crucial: teams must compare performance metrics against both technical benchmarks (e.g., accuracy, precision, recall) and commercial KPIs that reflect financial or strategic value. Finally, the deployment phase operationalizes the model within day-to-day business workflows, often requiring robust infrastructure, clear governance policies, and cross-functional cooperation so that the insights delivered by analytics tangibly affect decision-making.

Data science is evolving rapidly, and commercial leaders must remain vigilant to maintain a competitive edge. Real-time analytics allows enterprises to react instantly to shifts in customer behavior or market conditions, providing strategic benefits in domains like fraud detection, dynamic pricing, and supply chain optimization. Deep learning techniques enable more nuanced pattern recognition, driving innovations in computer vision, natural language processing, and personalized product recommendations. Although these advanced methods seem promising—and indeed can unlock impressive returns—they come with their own set of pitfalls. Data-hungry models can intensify privacy concerns and ethical dilemmas, especially when organizations collect and analyze sensitive information. Balancing innovation with responsibility becomes a leadership imperative, ensuring that data-driven technologies do not alienate customers or violate regulatory constraints.

Successful data science initiatives hinge not just on technical prowess but on the culture that surrounds them. This starts with leadership buy-in at the highest levels of the organization. Without clear advocacy from the C-suite—complete with resource allocation and strategic objectives—even the most brilliant data scientists will find themselves adrift, creating analytical masterpieces that never see the light of day. Equally important is cross-functional collaboration. When domain experts, IT, finance, and operations teams work in silos, it is easy for projects to lose sight of commercial realities and get bogged down in technical complexity. Smart organizations integrate data science early into product, marketing, or operational strategy, creating a symbiosis where business leaders guide the analytical roadmap while data scientists translate objectives into actionable models.

One brutally honest truth is that fostering a data-driven environment often demands a transformation of organizational habits. Employees who have long relied on gut feeling might resist the introduction of metrics and models that question their intuition. Overcoming this resistance requires more than just telling everyone to “trust the data.” It involves training, open communication about the rationale behind analytical decisions, and sharing quick wins to highlight the tangible benefits of data-driven thinking. The overarching goal is to reach a stage where analytics becomes a natural part of daily work—not some arcane specialty that resides in an isolated department of “mad scientists.”

Ultimately, the ROI of data science in commercial settings boils down to the tangible impact on the bottom line and long-term strategic positioning. Organizations that succeed in this domain systematically tie analytics outputs to actionable business objectives. When a model accurately forecasts product demand, it is not merely an intellectual exercise—there should be a material reduction in inventory costs, a decrease in delivery lead times, or an increase in customer satisfaction. These results feed back into the business strategy, unlocking new opportunities for product innovation, market expansion, or cost reduction. The capacity for data science to drive repeated, measurable returns is what separates firms that treat analytics as a passing trend from those that use it to build enduring competitive advantages.

For leaders new to data science, the broad scope—from data wrangling to deep learning—may seem overwhelming. Yet, the key is to start with well-defined business questions and build out capabilities systematically. Even small, incremental improvements driven by analytics can compound into massive gains over time. For more advanced organizations already reaping the benefits of data science, the challenge lies in sustaining momentum, refining processes, and staying on top of emerging trends without getting lost in hype. Success requires ongoing investments in technology, talent, and (perhaps most importantly) culture. Treating data science as an integral facet of overall commercial strategy, rather than a standalone function, will help leaders create value that is both immediate and enduring.

3.2. Frameworks for Aligning Data Science with Business Goals

A common lament heard from business leaders is that data science teams churn out sophisticated insights, yet these breakthroughs never fully integrate with overarching commercial strategies. There is a reason so many data projects exist in a perpetual state of “trial” without ever contributing to the bottom line. Aligning advanced analytics initiatives with real business objectives demands the right frameworks—structures that prompt disciplined thinking, rigorous goal-setting, and coherent coordination across departments. When adopted properly, these frameworks become the connective tissue between boardroom-level strategy and the daily grind of data analysis.

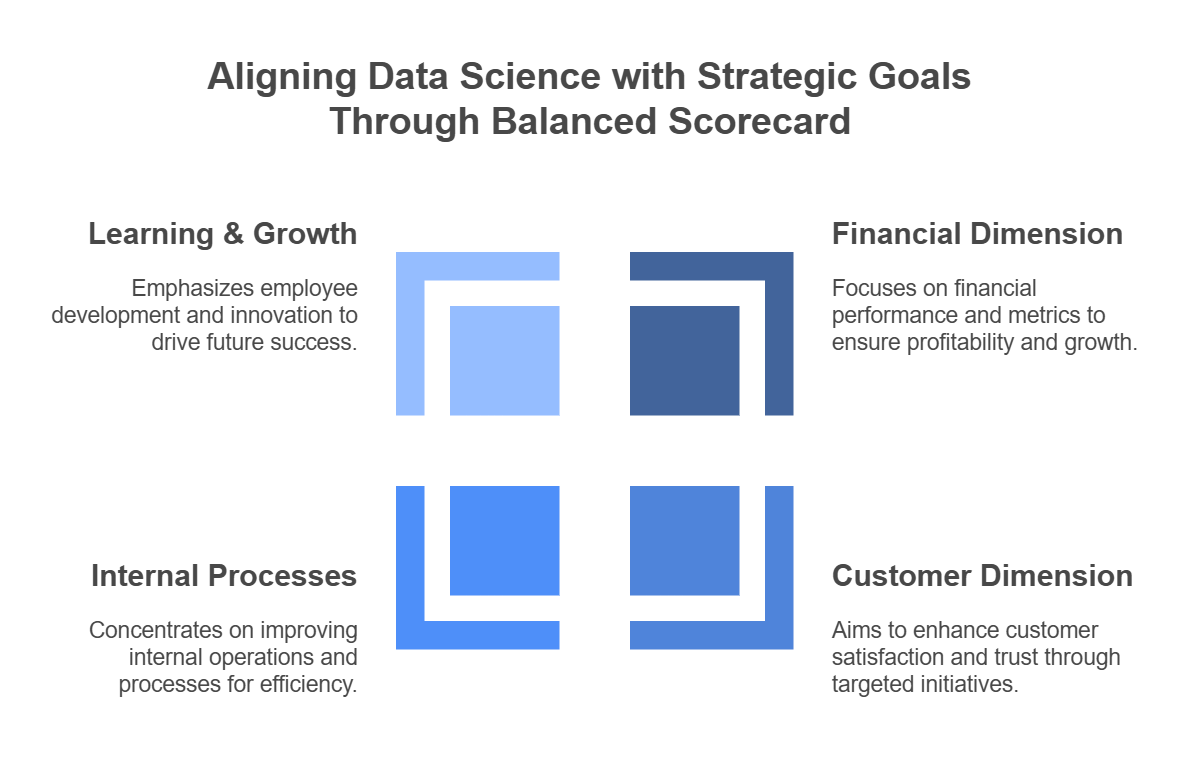

Figure 2: Illustration of the Balanced Scorecard applied to data science, showing how it connects employee development, financial performance, operational efficiency, and customer satisfaction. (Image by Napkin).

A particularly enduring approach to ensuring data science programs support larger corporate visions is the Balanced Scorecard. Introduced by Kaplan and Norton, this methodology translates a company’s strategic objectives into tangible goals across four core dimensions: financial, customer, internal processes, and learning and growth (Kaplan and Norton, 1996). By forcing businesses to examine each dimension, the Balanced Scorecard manages to inject an analytical pulse throughout the organization, preventing data science from descending into an isolated sandbox exercise. For example, a financial institution might identify “improving customer trust” as a key customer-related pillar of its Balanced Scorecard. The data science team, in turn, could design machine learning models to reduce credit risk and automate loan approvals, thereby hitting internal process improvement targets and boosting the overall brand’s reputation. The beauty of this approach is its simplicity: it demands that each data initiative demonstrate how it advances at least one quadrant of the organization’s performance metrics. This structure not only provides clarity but also forces executives and data scientists to speak a common language—an event so rare and valuable that it should probably appear on the company’s highlight reel.

While the Balanced Scorecard focuses on aligning analytics with strategic outcomes, data strategy frameworks take a more holistic view of how data flows and is utilized across an entire enterprise (Laney, 2001). Think of them as broad-scale architectural blueprints that cover governance, technology infrastructure, data security, and analytics-driven use cases, all in service of a unified business vision. A bank determined to improve its investment portfolio performance might outline specific governance protocols for evaluating data accuracy, systems for integrating third-party market intelligence, and advanced predictive models for algorithmic trading. This comprehensive approach ensures that each data project not only meets immediate performance metrics (like shortened trade execution time) but also respects the bank’s obligation to regulatory compliance and the pursuit of digital transformation. By embedding data governance and architecture into the organizational DNA, these frameworks help executives avoid that frustrating scenario where brilliant analytics solutions can’t be scaled because nobody remembered to build adequate pipelines, choose flexible technology stacks, or (minor detail) abide by pesky regulations.

Objectives and Key Results, widely known as OKRs, offer yet another road-tested method for achieving alignment between data science and broader company targets (Doerr, 2018). In the spirit of healthy ambition, OKRs push teams to define objectives that aim high—sometimes uncomfortably so—alongside quantifiable key results that indicate progress toward those objectives. An FMCG company wanting to optimize demand forecasting might articulate an objective like “revolutionize inventory management to eliminate stockouts.” The corresponding key results could involve reducing stockout incidents by a specified percentage, lowering holding costs by a clear monetary figure, or hitting measurable improvements in replenishment cycle times. The operational clarity provided by OKRs ensures that data scientists have a sharp, unambiguous target, while executives gain the ability to track each project’s real commercial impact. Of course, setting wild objectives is far easier than achieving them, so it’s wise to establish realistic timelines and resource commitments, unless your organization enjoys the drama of half-baked analytics projects.

Whether an organization adopts the Balanced Scorecard, data strategy frameworks, OKRs, or some custom blend of all three, the ultimate goal remains the same: to eliminate that awkward gap between groundbreaking analytics and tangible business value (LaValle et al., 2011). If there is one brutally honest insight for executives, it is that a model is only as good as the organizational will to implement its findings. This means that data science leaders must diligently connect the dots from algorithmic outputs to operational decisions. If an advanced forecast warns of an impending surge in demand, yet nobody in supply chain adjusts production schedules or distribution routes, the entire venture might as well have stayed on a whiteboard in the data science lab.

Choosing the right framework also helps establish transparent accountability, ensuring that every data project is designed to solve a business problem with measurable outcomes. This does not mean every initiative must produce an immediate windfall. Some projects, especially those involving large-scale digital transformation or advanced machine learning algorithms, require longer lead times to demonstrate their full value. However, frameworks like the Balanced Scorecard, data strategy roadmaps, or OKRs provide formal checkpoints where executives can gauge progress, tweak strategies, or—when necessary—pull the plug on fruitless experiments. Having the courage to terminate a failing data project can save millions in sunk costs, though it can also stir up some tension among analytics enthusiasts who fell in love with the project’s shiny algorithms.

The process of aligning data science with commercial goals is not a one-off event. Like any meaningful corporate initiative, it requires sustained executive sponsorship and an organizational culture that embraces ongoing learning. Leaders must champion the frameworks, ensuring they are integrated into regular decision-making rather than treated as bureaucratic box-checking exercises. Over time, the frameworks themselves may need refinement to reflect new market conditions or disruptive technologies. Perhaps the Balanced Scorecard’s customer dimension becomes paramount after a competitor unveils a loyalty program that’s luring away key accounts, or maybe a data strategy framework must evolve to handle real-time analytics as sensors and IoT devices proliferate. The point is that alignment is dynamic, not static, and demands agility at every level—from the C-suite to front-line data scientists.

The payoff for this careful orchestration can be substantial. When an executive can walk into a meeting and see exactly how a new analytics model is lowering cost-per-acquisition, improving customer retention, or paving the way for a new service line, that is when data science transitions from an isolated academic exercise into a true catalyst for commercial success. If additional references are needed to delve deeper into emerging methodologies or technology stacks that facilitate alignment, they would naturally fit here as part of an ongoing effort to stay current in this rapidly evolving landscape.

3.3. Identifying and Prioritizing High-Impact Data Initiatives

Many organizations, especially those newly enchanted by the possibilities of data science, face a daunting conundrum: there are simply too many appealing project ideas floating around. Machine learning this, deep learning that, real-time predictive analytics everywhere—each possibility seems more revolutionary than the last. In this swirl of options, leaders often discover that managing and prioritizing analytics initiatives is harder than pulling a rabbit out of a hat. Yet clarity is essential for achieving genuine commercial impact. Choosing the right projects can transform the enterprise’s bottom line, while the wrong ones can sink time and money into an abyss of unfulfilled promises.

One reliable way to sort through this sea of analytics possibilities is the humble cost-benefit analysis (Gartner, 2018). It may lack the glitz of a freshly minted AI prototype, but it remains a highly effective mechanism for measuring the potential returns of a project against its inevitable expenses, such as data acquisition, infrastructure investments, model development, and maintenance. Companies that take cost-benefit analysis seriously compare anticipated revenues or cost savings with the resources needed for full lifecycle execution—from data collection and cleaning to model deployment and ongoing maintenance. Initiatives showing a strong net present value or a manageable payback period typically earn an early place on the to-do list. Some might accuse this method of being too dry, but, frankly, if your CFO is not on board from day one, the project’s viability becomes questionable at best.

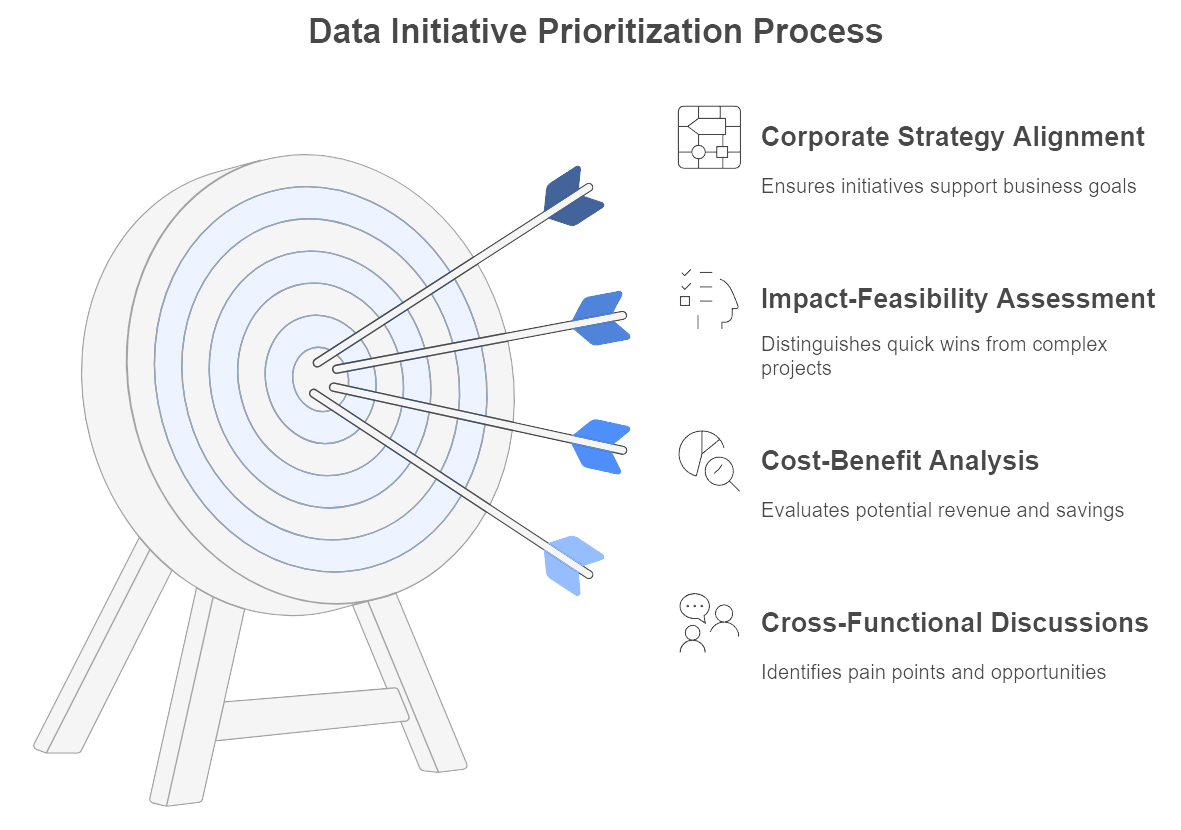

Another effective filtering method is the impact-feasibility matrix. At its core, this matrix forces organizations to ask two glaringly obvious yet frequently overlooked questions: Will this initiative significantly move the needle, and can we actually implement it without requiring a decade of R&D? Projects that rate high on both axes are the ideal “low-hanging fruit”—substantial business impact delivered through relatively straightforward implementation. Meanwhile, those that promise major strategic benefits but score low on feasibility may need to remain in a longer-term innovation pipeline, especially if the technology or data infrastructure is not yet mature.

A logistics firm grappling with spiraling transportation costs might identify route optimization as a high-impact, high-feasibility project. The models can be built using current GPS, shipping, and customer data, and the results—shorter delivery times, lower fuel costs, and improved customer satisfaction—are felt almost immediately. Conversely, a more futuristic concept like augmented reality training for drivers may sound innovative in pitch decks, but the technology stack, user adoption challenges, and potential regulatory hurdles can push this idea into the “not right now” column. By categorizing projects this way, executives avoid the tragedy of scattering limited budgets across half-baked experiments with marginal returns.

While cost-benefit analysis and the impact-feasibility matrix help quantify a project’s immediate appeal, there is still a bigger question at play: does this analytics initiative clearly resonate with corporate goals and long-term market positioning (International Institute for Analytics, 2018)? The most impressive algorithm on earth will not earn its keep if it fails to enhance customer experience, reduce churn, drive operational savings, or otherwise strengthen the company’s competitive standing. Data projects that do not align with the broader strategy risk ending up as academic curiosities championed by a handful of data scientists. This misalignment can breed frustration at the executive level—“Great, we can predict obscure patterns in monthly sales patterns, but how does that help us beat the competition?”—leading to skepticism about the entire data science function.

Figure 3: None

In many successful enterprises, the process of identifying and prioritizing high-impact data initiatives follows a structured path. First, cross-functional discussions between executives, domain experts, IT, and data science teams uncover the biggest pain points and opportunities. Next, each idea is examined through a cost-benefit lens, informed by real data on potential revenue uplift or cost savings. Projects that pass this stage move on to an impact-feasibility assessment, sorting the quick wins from the more complex, longer-term bets. Finally, executives validate that the surviving shortlist aligns solidly with corporate strategy, ensuring the initiatives will garner the internal support and resources needed to succeed. If that process sounds meticulous, it should be. One of the less advertised secrets in data science is that rushed decisions and poorly vetted project pipelines often result in orphaned prototypes that never see the light of day.

Even after narrowing down the project list, success hinges on more than just picking the right idea. It demands an organizational culture that values data-driven experimentation, fosters collaboration, and does not panic when early prototypes need fine-tuning. It also requires top-down sponsorship to champion these initiatives, secure adequate budgets, and, when the time comes, celebrate meaningful wins that validate the effort. Without such support, even the most promising analytics projects can languish.

Prioritizing high-impact initiatives does not mean ignoring the possibility of bolder, more transformative projects that might not show immediate returns. Some forward-looking ventures—such as advanced machine learning models for new product lines or real-time analytics platforms that predict supply chain disruptions—can yield massive benefits down the road. Organizations that completely neglect these “moonshot” ideas could risk losing out on disruptive innovations that redefine their competitive edge. The trick is to balance the portfolio, making sure that at least some portion of the analytics budget is earmarked for exploring emerging technologies and untested ideas.

When done thoughtfully, these structured evaluation methods create a virtuous cycle: the data science team focuses its energy on initiatives that matter, executives see tangible ROI and become more willing to invest further, and the broader enterprise develops greater confidence in analytics-driven decision-making. By consistently directing resources to high-return projects that align with both short-term needs and long-term ambitions, enterprises solidify their data science capabilities as a core business asset rather than a novelty department.

For those seeking further detail on specific quantitative prioritization approaches, including advanced ROI modeling and portfolio-level analytics governance, additional references could easily be inserted here. These resources might delve deeper into multi-criteria decision-making methods or present case studies on how large organizations systematically evaluate analytics opportunities in rapidly evolving markets. Regardless of the framework or reference used, the fundamental takeaway remains the same: investing in the right data science initiatives—and knowing which ones to drop—can mean the difference between minor improvements and transformational industry leadership.

3.4. Quantifying ROI and Business Value of Data Science

The excitement surrounding data science often escalates quickly, propelled by dazzling proof-of-concept demonstrations or enthusiastic claims about artificial intelligence revolutionizing entire industries. Yet, when the boardroom conversation turns to resource allocation, executives inevitably ask the most pragmatic question: “How does this translate to actual financial gains?” No matter how groundbreaking a predictive algorithm appears in a conference room demo, it must prove its worth in terms that CFOs, investors, and shareholders can understand. Fortunately, several well-established financial metrics help transform abstract analytics outcomes—such as improved accuracy or increased fraud detection—into decision-relevant figures.

Net Present Value (NPV) is typically the star of these conversations because it captures the discounted cash flows attributed to a project. If a manufacturing firm invests in a predictive maintenance model designed to reduce unplanned downtime, an NPV calculation can project how much the firm stands to save over several years, adjusted to today’s dollars. When these discounted savings exceed the initial investment, the analytics initiative moves from “interesting prototype” to “solid business proposition.” Of course, this approach requires a degree of disciplined forecasting. Overly optimistic assumptions about technology adoption or cost savings can inflate the model and lead to a rude awakening when real-world results fall short. Nonetheless, NPV remains a powerful baseline for deciding whether an analytics initiative is financially viable in the grand scheme of capital budgeting (Gartner, 2018).

Payback period offers a more straightforward, if somewhat blunt, measure of how quickly the organization recovers its upfront expenditure. Many executives prefer this metric because it answers a simple question: “How long will it take before we see our money back?” Short payback periods are particularly appealing to risk-averse organizations or those facing rapidly shifting market conditions that demand quick wins. The downside is that this method can undervalue longer-term returns or intangible benefits. For instance, an advanced image-recognition system that improves quality control in a factory may have a longer payback period, but once it is fully integrated, it could provide an ongoing stream of cost savings and quality improvements for years beyond the payback threshold.

Economic Value Added (EVA), meanwhile, reminds organizations that not all returns are created equal. EVA subtracts the capital charge from net operating profit after tax, highlighting the extent to which an analytics initiative exceeds the firm’s cost of capital (Stewart, 2013). Consider a data-driven marketing campaign that boosts customer acquisition. Even if the campaign increases revenues, it may not create “economic value” if the cost of capital tied to the project (including borrowed funds or the opportunity cost of internal budgets) is higher than the incremental gains. EVA shines a harsh spotlight on projects that look superficially profitable but deliver subpar returns relative to the risks taken or the alternative uses of that capital. It is a sobering metric, to be sure, but one that ensures data initiatives are evaluated within the organization’s broader financial context.

Despite their differences, all these financial metrics share a common goal: linking technical results, such as improved forecast accuracy, to concrete performance targets that matter at the executive level. A forecasting engine that predicts customer demand with 95% accuracy is impressive, but if nobody can articulate how that translates into lower inventory costs, fewer stockouts, or accelerated delivery times, the project’s financial rationale remains murky. By contrast, successful enterprises establish clear cause-and-effect relationships between analytics outcomes and revenue, cost savings, or capital efficiency. Leading logistics companies like UPS highlight this principle by connecting route optimization algorithms directly to annual reductions in miles driven, fuel consumption, and carbon emissions (Yoo, 2017). The payoff is more than just a line item in the sustainability report—it is a verifiable, quantifiable benefit that resonates with both customers and investors.

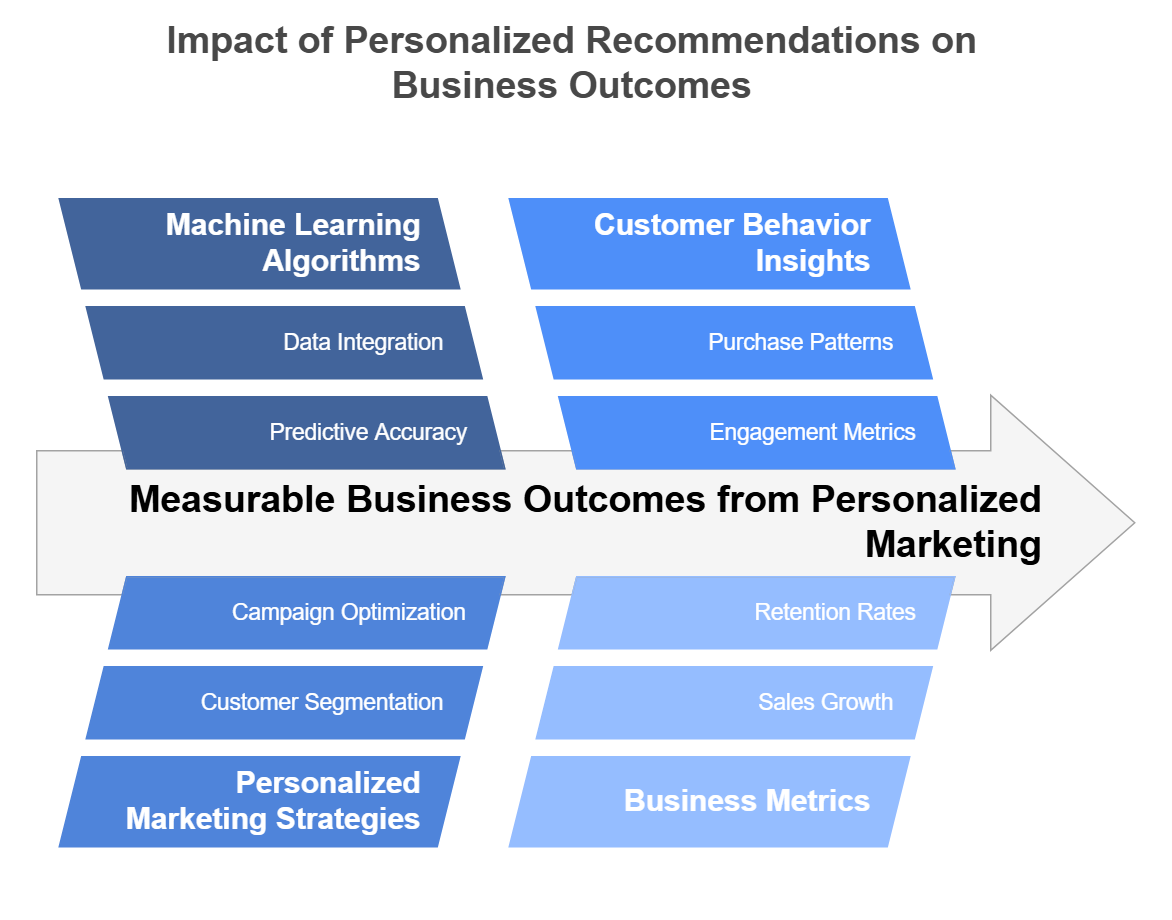

Figure 4: Illustration of how personalized marketing strategies, powered by data integration and predictive accuracy, lead to improved business metrics and customer engagement. (Image by Napkin).

The same logic applies to retail giants leveraging recommendation systems. These companies do not merely celebrate the fact that a machine learning algorithm can guess which pair of shoes a customer might fancy next. They translate these predictions into measurable sales lifts, higher basket sizes, or increased customer retention. By explicitly attributing a percentage of incremental revenue to personalized marketing, they build a business case that connects data science investments to material, bottom-line growth (McAlone, 2016). For executives, this correlation justifies further analytics spending and confirms that data science is not just a technologist’s sandbox but a critical driver of competitive advantage.

Admittedly, not every benefit fits neatly into a spreadsheet. Factors like brand enhancement, risk mitigation, and the customer goodwill generated by faster service or more personalized recommendations defy easy measurement. They might show up indirectly as reduced churn or improved Net Promoter Scores—metrics that suggest an organization is building long-term, intangible value. Sophisticated firms blend both quantitative and qualitative assessments into a cohesive value narrative. For instance, a bank investing in real-time fraud detection may reduce fraud-related losses (a direct, measurable payoff) while also bolstering customer trust (a subtler, qualitative outcome). Over time, both forms of value reinforce each other: lower fraud losses free up capital for further innovation, while stronger brand trust encourages customers to try new products and services, driving even greater returns.

The challenge for many data science initiatives, particularly advanced ones involving machine learning or deep learning, is that the development and validation phases can be lengthy. Such projects demand robust data engineering, substantial computing resources, and often a fair amount of trial and error. Early prototypes may not exhibit immediate returns, triggering impatience among stakeholders. In these cases, bridging the expectation gap requires clear communication about milestones, potential “breakthrough points,” and possible pitfalls. If these factors are transparently integrated into an ROI analysis, executives can make more informed decisions about whether to proceed, pivot, or hold off until the technology or data infrastructure reaches a more mature stage.

In the end, quantifying the ROI and business value of data science is both a financial exercise and a storytelling art. Hard metrics like NPV, payback period, and EVA offer compelling anchors for any executive discussion, but the broader narrative must also capture how data science can drive strategic differentiation, support corporate sustainability goals, or help open entirely new lines of business. Organizations that handle this process adeptly gain a decisive edge: they can invest confidently in analytics initiatives, secure stakeholder buy-in more easily, and position data science as a continuous, value-generating engine rather than a series of flashy yet unproven experiments. If additional references are needed to explore comprehensive ROI modeling, such as real options valuation for high-risk analytics projects or specialized metrics for AI-driven transformations, they could be inserted here to provide deeper insights into advanced methodologies.

3.5. Managing Risks and Ensuring Governance in Data Initiatives

Alongside opportunities for improved performance and profitability, data science carries its fair share of liabilities. Although it can fuel impressive insights, data science can also invite regulatory headaches, reputational landmines, and ethical dilemmas if not managed with deliberate rigor. The General Data Protection Regulation (GDPR) in Europe, for example, demands strict standards on data collection, storage, and usage, leaving organizations on the hook for hefty fines if they stray from the straight and narrow (Aon Professional Services, 2020). In healthcare contexts, frameworks like HIPAA enforce a whole new level of vigilance, requiring robust protocols to safeguard personal health information. While some executives dismiss privacy compliance as a “legal team problem,” the fact remains that one well-publicized data breach or HIPAA violation can obliterate trust more quickly than any marketing campaign can build it.

For many businesses, navigating the compliance landscape is not just about avoiding fines but about defending a carefully cultivated brand image. No one wants to become the next headline about customer data leaks or questionable use of sensitive information. In financial services, poorly managed models that inadvertently allow credit discrimination can attract lawsuits and scathing media coverage. In consumer technology, even a minor misstep in how user data is gathered can ignite social media outrage. These scenarios illustrate why compliance must be baked into data initiatives from the start. Establishing a robust governance framework that aligns with GDPR, HIPAA, or similar regulations seems like a time-consuming chore, but executives soon discover that prevention is far more cost-effective than the aftermath of a compliance disaster.

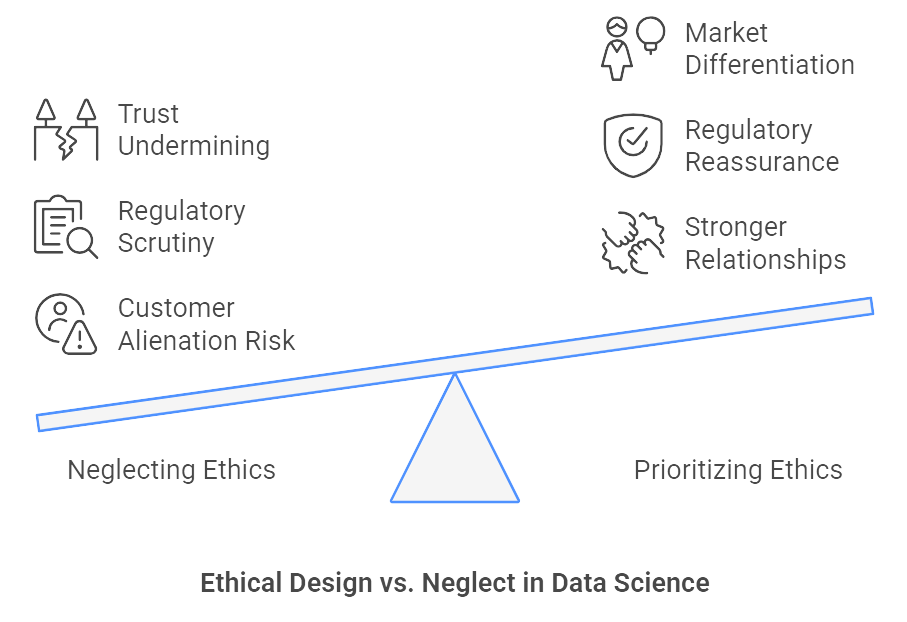

Figure 5: Illustration of how making ethical data science a priority builds trust, ensures compliance, and achieves sustainable success. (Image by Napkin).

/Ethical challenges extend beyond strict legal requirements. Algorithmic fairness and bias have become hot-button issues in multiple sectors, from hiring to medical diagnostics (Dastin, 2018). Business leaders can no longer hide behind “the algorithm did it” when data-driven decisions cause unintended harm or discrimination. If a machine learning model systematically disadvantages certain demographic groups in credit approvals, executives will be expected to explain—and correct—the model’s behavior. Data science teams that neglect fairness and transparency might push out an elegantly coded model only to discover that it alienates customers, draws regulatory scrutiny, or undermines public trust. On the flip side, those that prioritize ethical design can forge stronger customer relationships, reassure regulators, and in some cases, even create a market differentiator that signals integrity and social responsibility.

In response to these pressures, many organizations are adopting formal governance structures to keep data projects in check. Chief Data Officers, once a rarity, now hold executive positions in large corporations, tasked with ensuring that data initiatives align with both regulatory mandates and business strategy. AI ethics committees have emerged as well, bringing together legal, technical, and domain experts to scrutinize new analytics projects before they go live. While some might dismiss these bodies as red tape, in practice they act as invaluable gatekeepers. They demand evidence of compliance, model transparency, and accountability measures, essentially telling the data science team: “Show us you’ve done your homework, or don’t even think of deploying that model.” Such oversight helps protect the enterprise from legal and reputational pitfalls, while also demonstrating to customers and partners that the organization is serious about responsible innovation.

Beyond formalized roles, strong governance culture relies on clear guidelines and enforcement mechanisms. Role-based access controls, for instance, ensure that only authorized personnel can view or manipulate sensitive data, which remains one of the simplest yet most effective ways to prevent both accidental and malicious misuse. Rigorous model validation processes serve as another cornerstone: machine learning solutions are tested and retested against real-world scenarios to verify accuracy, fairness, and reliability. Audits—yes, those dreaded events involving third-party scrutiny—can be surprisingly helpful in confirming that the data pipeline and models adhere to regulations and that any potential biases are caught early. Although compliance discussions may lack the excitement of unveiling the latest neural network architecture, they play a decisive role in preserving operational resilience.

For all the talk of processes, frameworks, and oversight committees, governance ultimately boils down to people and culture. Data scientists, domain experts, and executives must view compliance and ethics not as a final box-ticking exercise but as fundamental to the integrity of any data initiative. When engineers and analysts are forced into “quick fixes” to meet deadlines, corners inevitably get cut, and that is often when governance collapses under pressure. Conversely, if leadership consistently supports and rewards best practices in risk management—rather than just punishing mistakes post-hoc—then a culture of proactive accountability emerges. Transparency becomes the norm, and ethical considerations are addressed at each stage of the model lifecycle, from data collection to real-time deployment.

A strong governance culture does more than just minimize the likelihood of fines, lawsuits, or PR nightmares. It aligns data science projects with the organization’s core strategy and values, forging a sense of trust that can be just as important to business success as new customer acquisition. Firms that demonstrate robust controls, transparent practices, and ethical stewardship of data build stronger customer loyalty, attract top-tier partnerships, and might even influence regulatory direction rather than simply reacting to it. This dynamic is particularly evident in sectors like healthcare, where data security and patient privacy are paramount, but it increasingly applies to retail, finance, and every other domain using data to automate critical decisions.

By establishing robust governance policies, role-based access controls, and rigorous validation procedures, leaders safeguard both the immediate value of data initiatives and their long-term viability (Bean, 2020). Rather than stifling innovation, these measures help ensure that new analytics programs launch with a firm foundation of trust and accountability. When data initiatives respect privacy, consider ethical implications, and comply with relevant regulations, organizations essentially earn a “social license” to innovate. In an era where public scrutiny can be unforgiving, that license can prove more valuable than any sophisticated machine learning model—because it secures the permission to continue pushing the boundaries of data science without compromising the trust of customers, regulators, or the broader market. If further references or case studies are needed to illustrate specific governance frameworks or best practices, they could naturally be integrated here, offering deeper insights into how world-class organizations navigate these complex but essential requirements.

3.6. Industry Case Studies: Data Science Success in Commercial Sectors

Real-world examples offer vivid proof of how meticulously aligned data science projects can reshape entire business segments, driving substantial value in ways that textbook theories often fail to capture. Companies across finance, retail, healthcare, FMCG, and logistics have demonstrated that by connecting the right analytics tools to urgent commercial priorities, data science ceases to be an isolated technical function and becomes a strategic growth engine.

One of the most dramatic displays of data science in action is the finance sector’s escalating battle against fraud. Visa’s Advanced Authorization, for instance, relies on real-time analytics to evaluate risk scores at the exact moment a purchase occurs, detecting and blocking billions in fraudulent transactions annually (Visa, 2020). While the media tends to focus on the staggering sums saved, it is equally important to understand the behind-the-scenes process. First, a vast trove of transactional data from around the globe is collected and fed into machine learning models that assess thousands of risk indicators, such as transaction history, merchant type, and geographical anomalies. These models often utilize sophisticated techniques like gradient boosting or deep neural networks to pinpoint suspicious patterns in milliseconds. Once a high-risk transaction is flagged, the system either requests additional verification or outright denies the charge, preventing costly losses and preserving consumer trust. This real-time feedback loop is no small feat, requiring robust data infrastructure, cross-functional collaboration between payment networks and banks, and a relentless commitment to model retraining as new fraud tactics emerge. The result is a finance ecosystem better equipped to handle risk, freeing executives to focus on innovation rather than perpetual damage control.

In the world of retail, personalized recommendations have proven to be not just a clever gimmick but a bona fide revenue driver. Amazon’s recommendation engine stands out as a prime example, with more than 30% of its sales attributed to the platform’s machine learning–powered product suggestions (MacKenzie et al., 2013). The success of this engine is underpinned by a step-by-step pipeline. First, Amazon collects massive amounts of customer behavior data, from browsing histories to past purchases. Next, collaborative filtering and content-based algorithms compare customer profiles with similar users to generate predictions about what a shopper might desire next. The final piece of the puzzle is the deployment phase, where these recommendations appear on the website or app in real time, nudging customers toward items they might not otherwise discover. The relatively simple premise of “people who bought this also bought that” conceals a complex system of continuous model training, A/B testing, and iterative improvement. By perpetually refining its algorithms, Amazon keeps customers engaged and revenue figures climbing, demonstrating how data-driven personalization can reshape consumer experiences and, unsurprisingly, the retailer’s bottom line.

Healthcare providers such as Kaiser Permanente are leveraging data science to tackle some of the most pressing medical challenges, from chronic disease management to hospital readmission rates (Davenport and Harris, 2007). The process often starts with electronic health records, patient demographics, and claims data, which are then combined with advanced analytics techniques to predict which patients are at highest risk of complications. This forewarning enables proactive interventions—physicians can schedule follow-up appointments, adjust medication regimens, or even arrange home health visits. While the typical business audience might roll their eyes at yet another “predictive model,” the real difference here is the cross-functional teamwork. Data scientists collaborate with clinicians to refine variables, ensuring they capture meaningful signals rather than burying doctors in extraneous statistics. IT teams step in to secure these sensitive data sets and ensure compliance with frameworks like HIPAA. Executives, in turn, champion the initiative by allocating resources and weaving predictive insights into operational routines. The net effect is reduced readmissions, a decline in preventable complications, and substantial cost savings for both providers and payers. More importantly, patients benefit from a healthcare system that responds to risks before they escalate, reinforcing the notion that data science can simultaneously elevate quality of care and financial stability.

Fast-Moving Consumer Goods (FMCG) firms operate in a high-stakes environment where timing is everything and margins can be razor-thin. AI-powered demand forecasting tools enable these companies to more accurately predict future product requirements, minimizing stockouts while ensuring they do not drown in excess inventory. The underlying pipeline often begins with historical sales data, promotional calendars, and market signals, sometimes enriched by external factors like weather forecasts and social media sentiment. Machine learning algorithms sift through these variables to pinpoint optimal order quantities and distribution strategies. Executives marvel at the cost savings—fewer emergency shipments, less perishable waste, and more precise production runs—while supply chain managers appreciate a more responsive approach to demand variability. In a twist of corporate humor, data scientists might find themselves becoming the unexpected heroes of efficiency, unearthing supply chain inefficiencies that have quietly burned money for years. When these insights are embraced companywide, the result is a leaner, more profitable operation that satisfies customer demands with impressive consistency.

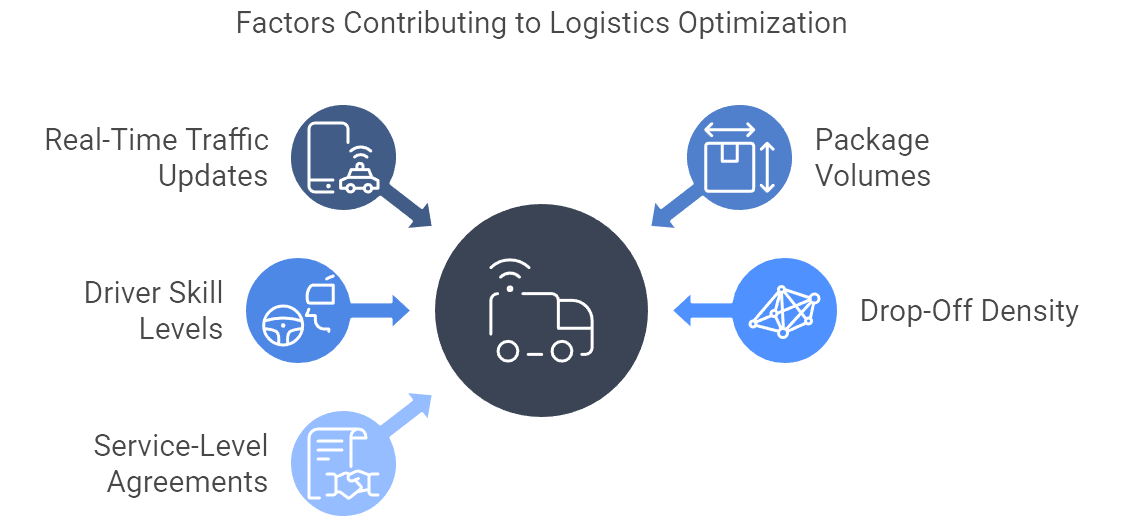

Figure 6: Illustration of the interconnectedness of elements like driver skill levels, package volumes, and real-time traffic updates, all contributing to streamlined and efficient logistics operations. (Image by Napkin).

Logistics giants like UPS thrive by optimizing routes in excruciating detail, cutting millions of miles off daily delivery schedules and saving hundreds of millions of dollars in annual costs (Yoo, 2017). It sounds glamorous, but beneath the surface lies a sprawling labyrinth of data streams, including real-time traffic updates, package volumes, driver skill levels, and drop-off density in urban areas. Data scientists develop route optimization algorithms that factor in these dynamic inputs, continuously adjusting schedules and directions to minimize fuel consumption, driver hours, and vehicle wear and tear. The system also must weigh service-level agreements, ensuring packages arrive on time or earlier if possible. Successful implementation depends on cross-departmental buy-in—from operations managers who trust the new routing suggestions, to finance teams verifying cost savings, to IT experts who integrate mobile devices for drivers so real-time updates can occur. Eventually, the analytics effort manifests in visible outcomes: fewer vehicles on the road, shorter delivery windows, happier customers, and a company that can boast both cost leadership and environmental responsibility.

The recurring lesson from these industry case studies is that data science flourishes when it is tightly woven into a company’s strategic fabric. Whether the target is risk mitigation, operational excellence, or personalized customer engagement, the analytics solutions must address a pressing business need rather than remain an isolated “lab experiment.” Each success story underscores how data collection, cleaning, exploratory analysis, model building, evaluation, deployment, and maintenance feed into a virtuous cycle of continuous improvement. Moreover, behind every triumphant case lies a story of organizational commitment: cross-functional teams, supportive leadership, and a corporate culture that values evidence-based decision-making. By matching cutting-edge analytics tools to the specific demands of their industries, companies move beyond incremental gains to secure substantive, and sometimes transformative, competitive advantages. These outcomes remind executives everywhere that data science, when wielded responsibly and strategically, can be a game-changer for both short-term performance and long-term market leadership.

3.7. Driving Adoption and Stakeholder Engagement

It is one of those timeless truths: no matter how mind-blowingly sophisticated the model architecture, a data science project that lives in a vacuum of executive apathy or departmental silos is destined for irrelevance. Real impact demands that people, across all levels of the organization, actually embrace the insights that analytics can deliver. This is where the rubber meets the road: driving adoption among decision-makers, securing buy-in from the C-suite, and ensuring that analytics outputs do not end up as dusty reports buried in someone’s inbox. Companies that successfully foster data-driven cultures, where evidence-based thinking is preferred over subjective hunches, see dramatically better outcomes than those that rely on pure gut instinct. And if there is any doubt, a quick glance at modern business heroes—from retail behemoths to fintech disruptors—makes it clear that strategic analytics adoption has become a powerful competitive weapon.

A top-down endorsement of data-driven decision-making can spark dramatic changes, but it also demands a subtle shift in organizational culture. Leaders who treat analytics as a “nice-to-have” side project will see tepid engagement at best. In contrast, those who weave evidence-based thinking into their corporate DNA send a clear message that data matters. This cultural pivot often begins with evangelizing tangible successes: perhaps a proof-of-concept forecast that slashed inventory costs or a targeted marketing campaign that boosted conversion rates by double digits. These early wins work like catalysts, transforming skeptical audiences into cautious believers. Over time, they feed a virtuous cycle where teams clamor for more analytics support. When executives themselves exemplify this data-driven mindset—asking probing questions about assumptions, applauding thorough analyses, and basing strategic initiatives on quantifiable evidence—employees quickly realize that presenting decisions backed by robust metrics is not a chore but an expectation.

Nothing accelerates adoption like a visible mandate from the top. When the CEO publicly touts the benefits of predictive modeling or invites the Head of Data Science to present at board meetings, the rest of the organization sits up straight and starts paying attention (Bean, 2020). The same holds true for tangible resource allocation. Data teams often require specialized tools, larger budgets for cloud computing, or collaboration from multiple departments. These requests can spark territorial debates if not accompanied by executive-level backing. Once leadership sets a firm strategic priority—“We will use data science to drive operational efficiency and revenue growth”—the path for data initiatives smooths considerably. Even skeptics in finance or marketing become more open-minded when they see that the CFO and CMO are aligned on the analytical roadmap.

Often overlooked yet critical to the success of analytics adoption is the middle-management layer. Managers juggling day-to-day deliverables can fear that data science initiatives might upend established workflows or even threaten their jobs. It does not help when the occasional breathless article proclaims “robots are coming for middle management.” To keep these managers engaged rather than alarmed, leaders must position analytics as a tool that amplifies, rather than replaces, human expertise. If a new machine learning model can detect anomalies in a financial ledger more accurately than humans, for instance, the manager responsible for auditing that ledger can be retrained or repositioned to handle higher-level financial strategy or vendor negotiations. The key is emphasizing that analytics frees employees from mundane, repetitive tasks and allows them to focus on the more strategic, satisfying aspects of their roles. Enlightened organizations provide clear communication and training opportunities, highlighting career development paths that integrate data literacy as a competitive advantage rather than a threat.

To move from theoretical enthusiasm to daily practice, many enterprises invest in internal workshops or pilot programs that demonstrate the power of analytics in real-world scenarios. These sessions often involve cross-functional teams working on carefully chosen proof-of-concept projects. The best pilots tackle tangible problems—like optimizing a crucial supply chain route or forecasting sales for a flagship product—so skeptics can see a direct line from analytics output to improved outcomes. When these pilot efforts succeed, they become marketing vehicles for the entire data science program, turning former doubters into vocal champions who share their success stories across the organization. Some companies even formalize this by creating champion networks—clusters of employees across different departments who champion the adoption of data tools and best practices. This grassroots enthusiasm complements top-down mandates and ensures that analytics is not dismissed as some executive fancy imposed without real frontline relevance.

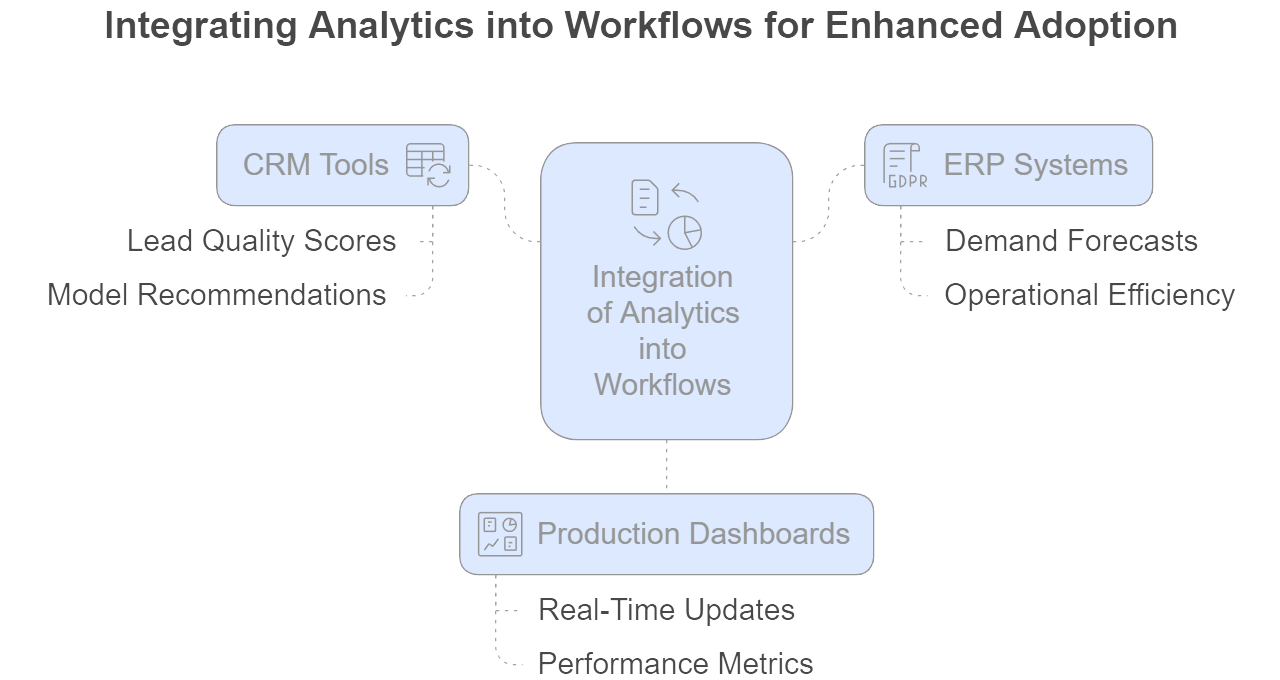

Figure 7: Illustration of how integrating analytics into existing workflows, through CRM tools, ERP systems, and production dashboards, leads to actionable insights like lead quality scores, demand forecasts, and performance metrics. (Image by Napkin).

While short-term excitement can spark adoption, long-term success depends on weaving analytics into routine workflows. Nobody in a busy marketing department or supply chain unit wants to log into multiple disconnected systems just to retrieve a single data point. By integrating data science outputs directly into the platforms employees already use—like CRM tools, ERP systems, or production dashboards—organizations lower the activation energy required to interact with analytics. The result is a seamless experience: a marketing manager glances at her CRM and instantly sees model-driven lead quality scores, while a supply chain analyst opens the usual interface and finds demand forecasts updated in real time. Such frictionless access to insights drastically increases the likelihood that managers will rely on them for everyday decisions. Over time, analytics transforms from an intriguing concept to a default setting: checking model recommendations or forecast dashboards becomes as second-nature as sending an email.

Initial enthusiasm can falter if analytics teams cannot demonstrate ongoing value. To maintain momentum, leaders must ensure that every data-driven project has transparent performance metrics. The aim is not only to measure the direct ROI but also to share improvements in speed, accuracy, or customer satisfaction that might be less quantifiable in pure dollar terms. When stakeholders see that analytics-driven decisions led to tangible improvements—like a drop in inventory holding costs or a rise in conversion rates—they grow more confident in the data science process. This trust, in turn, encourages them to use analytics more frequently, request new models for additional challenges, and even champion further investment in data capabilities. It is a virtuous cycle: every successful outcome sets the stage for the next phase of deeper analytics integration.

Ultimately, driving adoption is not just about selling one or two novel use cases. It is about embedding a data-driven ethos so deeply that it influences the entire strategic direction of the company. When cross-functional teams instinctively rely on analytics before initiating a new product line or reconfiguring a supply chain route, that is when data science truly becomes part of the corporate DNA. The greatest payoff emerges when data insights feed directly into strategic dialogues at the highest levels, shaping not just operational tweaks but long-term positioning in the market. Organizations that achieve this alignment find themselves better equipped to adapt to new competitive threats or shifts in consumer behavior, because they possess both the analytical tools and the cultural acceptance to pivot quickly. While it may sound lofty, the evidence is clear: companies with an institutionalized data mindset repeatedly outperform those that treat analytics as an optional accessory.

By addressing adoption and stakeholder engagement from the get-go—through executive sponsorship, robust cultural shifts, middle management buy-in, integrated workflows, and transparent performance tracking—enterprises give their data science initiatives the support they need to flourish. The result is a cycle of success where analytics illuminates opportunities, teams act on data-driven insights, and the organization continually refines its capabilities, forging a clear path toward sustained competitive advantage.

3.8. Future Trends and Emerging Opportunities in Data-Driven Business

Companies everywhere are bracing for a new era defined by artificial intelligence, increasingly powerful data ecosystems, and real-time analytics—an era that is transforming competitive landscapes faster than many executives ever anticipated. The mechanisms behind this transformation range from automated decision-making systems that handle complex tasks once entrusted to humans, to bold new innovations like blockchain and the Internet of Things (IoT) that are rewriting the rules on how data is collected, shared, and monetized. From finance and retail to healthcare and logistics, no sector is immune from this technological undercurrent. In fact, forward-looking firms already view data science as a core pillar of their commercial strategies, investing in AI-driven research, embedding analytics into every critical process, and rethinking how their leadership structures might evolve in a hyper-connected future.

One of the most striking developments is the rise of AI-driven automation. This is not merely about automating back-office tasks like invoice processing or data entry. It extends to autonomous decision-making systems that handle intricate, high-stakes processes such as algorithmic trading in finance and dynamic pricing in retail (Doerr, 2018). In the past, these decisions required extensive human oversight—teams of analysts sifting through data, generating reports, and holding meetings to decide on the next move. Now, machine learning and deep learning algorithms can factor in vast amounts of real-time information to make optimized decisions within seconds. While this increases operational speed and can produce sizable cost savings, it also demands new governance models to ensure transparency and oversight, lest one faulty algorithm trigger a cascade of unintended consequences. The organizations that master both the technology and the guardrails are positioned to reshape entire industries, outpacing rivals through faster, more adaptive responses to market fluctuations.

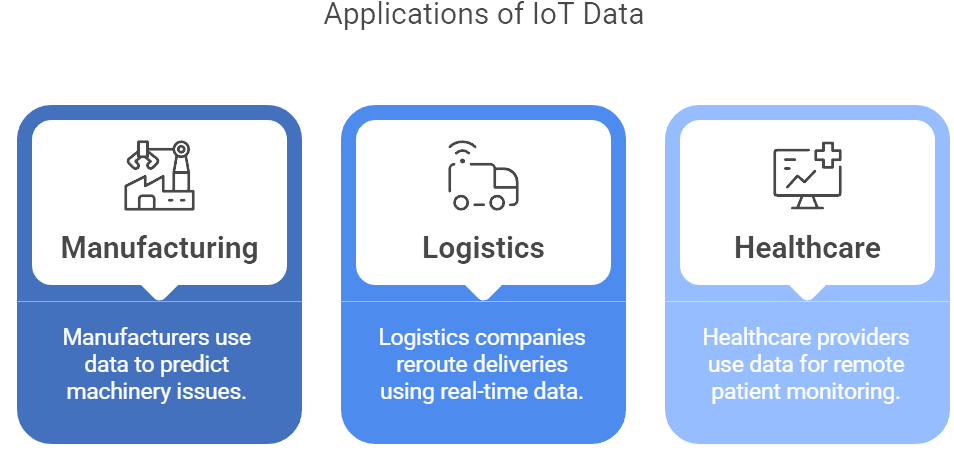

Figure 8: Illustration of the practical use of IoT data, showing how manufacturers predict machinery issues, logistics companies optimize delivery routes, and healthcare providers enable remote patient monitoring. (Image by Napkin).

The Internet of Things is layering an additional dimension onto the data-driven revolution. Smart sensors in factories, vehicles, homes, and even hospital rooms generate torrents of real-time data points that feed into predictive models. Manufacturers use this data to preemptively identify machinery issues, scheduling maintenance before breakdowns happen. Logistics giants rely on sensor streams to reroute deliveries in response to sudden traffic snarls or weather events, thereby cutting operational costs and meeting customer expectations for swift, reliable service. Healthcare providers leverage remote patient monitoring and telemedicine data to anticipate complications, sparing patients unnecessary ER visits and improving outcomes. All these scenarios hinge on the ability to collect, clean, and analyze data at breakneck speed. For many businesses, that means investing in cutting-edge cloud platforms, edge computing strategies, and data pipelines engineered for real-time analytics. It is a far cry from the days of nightly batch processing, pushing organizations to step up their infrastructure game or risk being left in the dust.

In parallel with these technological advances, the job description of a Chief Data Officer (CDO) or analytics executive is undergoing a fascinating transformation. Once perceived primarily as custodians of data governance—tackling tasks like regulatory compliance and data quality—these leaders are now expected to be catalysts for business innovation. They identify emerging revenue models built on analytics, champion ethical AI adoption to stave off reputational and legal nightmares, and collaborate with product teams to bake intelligence into goods and services. Organizations that merely hand the CDO a laundry list of compliance mandates and storage architecture decisions miss a crucial opportunity. Those that invite them to the boardroom and consult them on new digital business models, strategic market entries, and even M&A evaluations reap the benefits of an integrated, forward-looking data strategy. This leadership evolution does not happen overnight, but when executed effectively, it can forge a competitive edge that is difficult for laggards to replicate.

Blockchain technology, while sometimes overshadowed by the hype around cryptocurrencies, is carving out a niche in secure, auditable data sharing. By maintaining a decentralized ledger, blockchain-based platforms enable businesses to exchange information with partners and customers without relying on a single point of control or vulnerability. Whether used for supply chain tracking—where each handoff is recorded in an immutable ledger—or healthcare data management—where patient records are securely shared among providers—blockchain can enhance trust in multi-party transactions. The technology remains in a relatively nascent stage, with interoperability and scalability challenges yet to be fully resolved. However, organizations that explore its potential in tandem with data science can discover unique opportunities to streamline operations, assure authenticity, and open new partnership models. As these capabilities mature, they may catalyze entire ecosystems of data-driven services that we can scarcely imagine today.

In this rapidly evolving environment, agility is the ultimate currency. New AI techniques surface daily, and consumer sentiments around data privacy can shift in response to a single scandal. Organizations that fail to adapt risk becoming the cautionary tales of tomorrow. Yet, with agility comes responsibility. Ethical and responsible deployment of data science is not just a moral stance; it is a strategic necessity in a climate where customers and regulators grow ever more vigilant about privacy, bias, and security. Companies that operate ethically, maintaining robust governance structures and investing in workforce data literacy, will find themselves more trusted by stakeholders—a critical asset in an economy increasingly driven by real-time intelligence.

Forward-thinking leaders already envision a future in which data science, machine learning, and AI become inseparable from the daily fabric of business. Product design teams consult predictive models to prioritize feature rollouts that yield the highest customer satisfaction. Supply chain managers rely on digital twins—virtual replicas of real-world operations—to test changes in routing and capacity before implementing them in live environments. Marketing experts tailor campaigns to micro-segments, optimizing messaging and timing in real time. Finance executives incorporate AI-driven forecasts into capital planning, treasury operations, and risk assessments, ensuring the company’s financial health is as adaptive as the technology it employs (Davenport and Patil, 2012).

This holistic integration of analytics will undoubtedly present new hurdles. Talent shortages may become more acute as every company hunts for data science rock stars and AI-savvy product managers. Regulatory landscapes will continue to fluctuate, demanding that businesses remain nimble in their compliance efforts. Competition in the analytics space will intensify, pushing firms to differentiate themselves through proprietary algorithms, niche domain expertise, or best-in-class implementation speed. But these challenges pale in comparison to the scale of opportunities ahead. From revolutionizing customer engagement to unveiling entirely new lines of business, the organizations that pursue data science with strategic vision and operational discipline will be the ones shaping tomorrow’s data-driven marketplace.

In essence, the future belongs to enterprises that relentlessly harness the power of analytics to innovate, pivot quickly, and operate responsibly. Successful leaders realize that advanced data capabilities are no longer optional luxuries but core essentials that define market relevance. As trends like AI-driven automation, IoT, blockchain, and real-time analytics continue to merge, they create a fertile playground for disruption, growth, and—when navigated effectively—sustained commercial success.

3.9. Conclusion and Further Learning

Aligning data science with business strategy and ROI is a journey – one that transforms how an organization thinks, decides, and prospers. As we’ve seen, it’s a journey that requires vision from the top, collaboration across silos, investment in people and technology, and a steadfast focus on value. The rewards are compelling: clearer insights, smarter decisions, streamlined operations, happier customers, and a competitive edge that others will struggle to match. Companies that master this alignment are not just using data occasionally; they are weaving data into their DNA, creating a culture where intuition and information work hand in hand.

If you’re a business leader or professional, you have the opportunity to be a champion of this transformation. Start with small steps – a pilot project here, a dashboard there – but tie them to big goals. Demonstrate a win, then another. Communicate, educate, and inspire your teams with what’s possible. By fostering a mindset that values curiosity over convention and evidence over ego, you build an organization that learns and adapts continuously. In a world of rapid change, that ability is perhaps the greatest strategic asset of all.

Remember, the path to a data-driven future is iterative. There will be challenges: a model might fail, a dataset might disappoint, stakeholders might be skeptical. But each challenge is surmountable with the principles and practices we’ve discussed. Learn from setbacks, maintain good governance, and keep the lines of communication open. Over time, momentum will build. What once may have been “data silos” and gut-driven calls will evolve into an orchestrated, insight-rich operation where everyone from the boardroom to the front line embraces data as a partner.

You don’t need to be a data scientist to lead this charge – you need the strategic vision to see where data can propel your business and the leadership to bring the right people together to make it happen. As you apply the concepts from this chapter, you will not only achieve better ROI on individual projects, but also guide your organization to become more innovative, resilient, and customer-centric.

The future belongs to enterprises that can learn faster and execute smarter – and aligning data science with strategy is the key to unlocking that capability. You have the knowledge; now it’s time to put it into action. Encourage your teams to experiment and share insights, make data a visible part of every decision, and align every analytics effort with a strategic objective. Do this consistently, and you will cultivate a powerful synergy between data and strategy.

In closing, consider the leaders highlighted in this chapter and elsewhere who have said, in various ways, that data is the new currency of business. By investing it wisely and aligning it with purpose, you will ensure your organization not only survives in the data-driven era, but truly thrives. Here’s to your journey of turning data into competitive advantage – may it be rewarding, enlightening, and even transformative for your business. Good luck, and let the data light the way to your next success!

To further delve into the concepts from this chapter, here are 20 advanced prompts and questions that can stimulate deeper thinking and discussion:

Strategic Alignment via Balanced Scorecard: Choose a current data science project in your organization and map it to a Balanced Scorecard framework. Identify which BSC perspectives (Financial, Customer, Internal Process, Learning & Growth) the project impacts and suggest KPIs for each. How well balanced is the initiative across the perspectives?

OKRs for Data Teams: Design an OKR (Objective and 2-3 Key Results) for a data science team aligned with a corporate objective. For example, if the corporate goal is improving customer retention, what OKR could the data team adopt? Explain why this alignment would drive focus and how you would track progress

Impact vs. Feasibility Matrix in Practice: List five potential analytics projects in an industry (e.g., retail or healthcare) and plot them on an impact-feasibility matrix. Which projects come out as “quick wins” and which as “long-term bets”? Discuss how you would communicate to stakeholders why some low-feasibility but high-impact projects (the “moonshots”) might still be worth pursuing for strategic reasons

ROI Calculation Scenario: Imagine a bank wants to implement an AI-driven loan approval system. Outline how you would calculate the ROI for this project using NPV and payback period. What key benefits (e.g., faster processing, reduced defaults) and costs (IT investment, training, model risk) would you include? How would you handle qualitative benefits like improved customer experience in your justification?

Qualitative Benefits to Quantitative Metrics: Select a qualitative benefit from data science (such as “improved customer insight” or “better decision-making”) and propose a way to measure or estimate its business value. For instance, how might you quantify the value of insights gained from a new customer segmentation analysis? Discuss any assumptions or proxies used.

GDPR Compliance Plan: You are leading a customer analytics project that will use personal data of EU customers. Draft a brief compliance plan ensuring GDPR adherence. What steps will you take regarding consent, anonymization, data access, and handling data subject rights? How will these compliance steps align with and possibly enhance the project’s credibility and success?

Ethical AI Dilemma: Your company’s pricing algorithm has learned to charge higher prices to a certain customer demographic, leading to complaints of unfairness. How would you address this ethically and strategically? Discuss steps like bias audit, algorithm adjustments, or transparency measures. What business risks does this scenario highlight and how would you mitigate them through governance?

Building Data Literacy: Propose a data literacy program for a mid-sized non-tech company. What topics would you cover for executives vs. middle managers vs. frontline employees? Suggest interactive formats (workshops, e-learning, hackathons) and how you’d measure the program’s impact on the company’s decision-making culture.

Cross-Functional Data Squad: Design a “data squad” for a specific problem, e.g., reducing supply chain delays. Identify the roles (data scientist, data engineer, business analyst, supply chain manager, IT rep, etc.) and how they would collaborate. What challenges might arise in communication and how would the squad overcome them?

Championing Data Culture from Middle Management: If you are a middle manager convinced of data-driven methods but your peers are not, how would you champion a data culture? Outline a plan including one-on-one persuasion, sharing small success stories, mentoring colleagues on using data, and possibly presenting to senior leadership about on-ground obstacles and needs.

Real-Time Analytics Use-Case: Consider a retail chain that currently aggregates sales data weekly. Brainstorm a real-time analytics use-case (e.g., real-time inventory alerts or dynamic in-store digital signage changing with customer behavior) and discuss what infrastructure and organizational changes would be needed. How could real-time data improve a key metric like conversion rate or stock-out frequency?

Generative AI in Your Business: Imagine applying generative AI (text or image generation) to your industry. Provide two innovative use-cases (for example, automated marketing content creation, or AI-generated product designs to test). What value could this create and what risks (quality, brand consistency, ethical concerns) would you need to manage as part of strategy?

Data Monetization Idea: Many companies sit on valuable data that could potentially be monetized. Propose a data product or service your company could offer externally. For instance, a logistics firm might sell supply chain insights as reports or via API. Evaluate how this aligns with your core strategy and what new capabilities (or partnerships) you’d need to make it happen.

Role of CDO vs. CIO: Debate the roles of the Chief Data Officer and Chief Information Officer. In a scenario where both exist, how should responsibilities be divided? For example, who should own data ethics policy, data architecture, advanced analytics development, and business intelligence? How can they collaborate to ensure both “defensive” and “offensive” data strategies are covered.

AI Governance Board Simulation: Your company is starting an AI governance board to review high-impact AI projects. List 5 key questions or criteria this board should apply when evaluating a project (e.g., “Does it comply with our AI ethics principles?”, “Is there a human-in-the-loop for critical decisions?”). Apply these criteria to a hypothetical project (say, an AI hiring tool) and determine if you’d approve or request modifications.

Scenario Planning – Data Disruption: Imagine a disruptive event: e.g., a new data privacy law significantly limits use of third-party consumer data. How would your organization adapt its data strategy? Discuss alternative approaches like greater use of first-party data, investing in synthetic data, or diversifying analytics to less personal data. What does this scenario teach about flexibility in data strategy?

Continuous Learning Loop: Design a feedback loop for continuous learning in a deployed data product. For example, you launched a recommendation engine – how will you continually evaluate its performance and update it? Outline a cycle of data collection on outcomes, model retraining, A/B testing new versions, and deployment. How do you ensure this loop aligns with changing business objectives (perhaps the strategy shifts to promoting new categories)?

Data and Sustainability Goals: Environmental, Social, and Governance (ESG) goals are now part of many business strategies. Propose how data science can help achieve a sustainability target (e.g., reducing carbon footprint by X%). Perhaps route optimization (like UPS ORION) or energy usage analytics in facilities. What data would you use and how would you tie the results to ROI (since sustainability and efficiency often go hand in hand).

Next-Gen Customer Experience: Envision the customer experience in 5 years with AI everywhere. For a chosen industry (banking, retail, travel, etc.), describe how data-driven personalization, real-time interaction, and automation might make the experience different for the customer. Then identify what the company needs to do today data-wise to move toward that vision (such as integrating data silos to get a full customer view, or investing in certain AI capabilities).

Measuring Data Culture Maturity: How would you assess the maturity of your organization’s data-driven culture? Propose a set of indicators or a mini “audit.” These might include: percentage of decisions backed by data analysis, employee survey results on attitudes toward data, number of active users of analytics tools, quality of data documentation, etc. After assessing, what would be your top recommendation to mature the culture further?

Each of these prompts encourages exploring the chapter’s themes in greater depth or applying them in new ways. They can be used for self-reflection, team workshops, or discussions in executive meetings to drive deeper understanding and action-oriented insights.

To help you put the chapter’s concepts into practice, here are five hands-on assignments. These are designed as structured tasks with guidance, so you and your team can apply what you’ve learned to your own organizational context.

🛠️ Assignments

📝 Assignment 1: Strategy Alignment Workshop

🎯 Objective:

Ensure a data project is tightly aligned with business objectives using the Balanced Scorecard and OKR frameworks.

📋 Tasks:

| Aspect | Details |

|---|---|

| 1. Select a Data Initiative: | Pick one current or upcoming data science project in your organization (for example, a customer churn prediction model or inventory optimization tool). Gather the project team and relevant business stakeholders for a workshop session. |